Watch On-Demand

Explore new AI insights from the 2026 Software Engineering Benchmarks Report – backed by 8.1M+ PRs across 4,800 engineering teams and 42 countries.

Speakers

Rob Zuber

CTO

Circle CI

Smruti Patel

SVP

Apollo GraphQL

Yishai Beeri

CTO

LinearB

About the workshop

No fluff. Just data-driven insights from millions of data points and a 35-minute roundtable discussion breaking down:

- State of the Market: Survey results and reflections from our 2026 AI in Engineering Leadership survey.

- 2026 Benchmarks: This year’s benchmarks include 20 metrics spanning the entire SDLC – plus 3 all-new AI metrics.

- [NEW] AI insights: A brand new segment breaking down the impact AI tools are having on delivery velocity, code quality, and team health.

Your next read

Report

2026 Software Engineering Benchmarks Report

Created from a study of 8.1+ M PRs from 4,800 engineering teams across 42 countries.

Demo

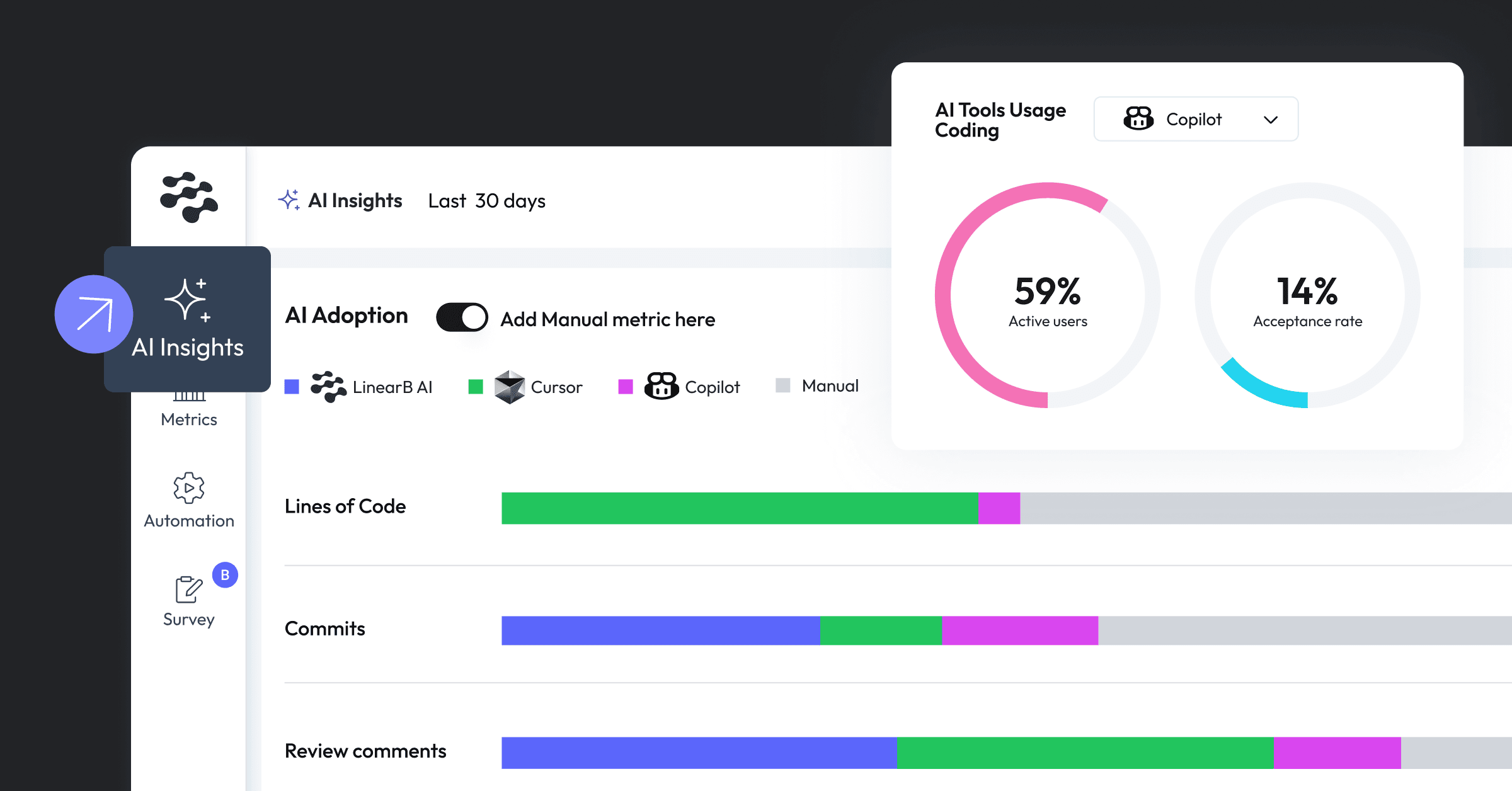

Understand AI adoption and developer impact with the AI Insights Dashboard

This demo guides you through the AI Insights Dashboard, demonstrating how to track and analyze trends in AI tool adoption, rule coverage, and code quality....

Demo

Using the MCP Server with Claude

This demo walks you through connecting Anthropic’s Claude to LinearB data, querying insights and recommendations, and building repeatable metrics dashboards –...