AI code review tools: 2025 evaluation guide

We built the industry’s first controlled evaluation framework to compare leading AI code review tools. Inside you’ll find:

Benchmark results: CodeRabbit vs. LinearB vs. Copilot

Tactical guidance on how to run the experiment yourself with real injected bugs

Tool fit guide to help your team choose the right tool based on your unique priorities

AI code review tools: 2025 evaluation guide

Download your free copy

Benchmark results

We ran a head-to-head benchmark of 5 leading AI code review tools using real-world code and seeded bugs. You’ll find the results broken down by:

Clarity: Did each tool catch the bug, propose a fix, and explain why?

Composability: Which tools have a high signal-to-noise ratio?

DevEx: Is there minimal friction during set-up and a seamless DevEx?

How to run the experiment yourself

Our benchmark was designed to be fully reproducible. Inside you’ll find step-by-step guidance on how to run the test yourself with the following resources:

All code changes, injected bugs, and review artifacts

Evaluation scripts, documented and preserved in a version-controlled repository

Detailed documentation for replicating the complete testing methodology

Tool fit guide

Beyond test scores, selecting the right AI code review tool also involves evaluating your team’s unique priorities. This section includes:

A comparative overview of features across tools, including strengths & trade-offs

Tool fit suggestions, according to different team sizes and workflows

Guidance on what to consider during vendor evaluations

Download your free copy

More resources

Report

2026 Software Engineering Benchmarks Report

Created from a study of 8.1+ M PRs from 4,800 engineering teams across 42 countries.

Workshop

2026 Benchmarks Insights

Explore new AI insights from the 2026 Software Engineering Benchmarks Report – backed by 8.1M+ PRs across 4,800 engineering teams and 42 countries.

Demo

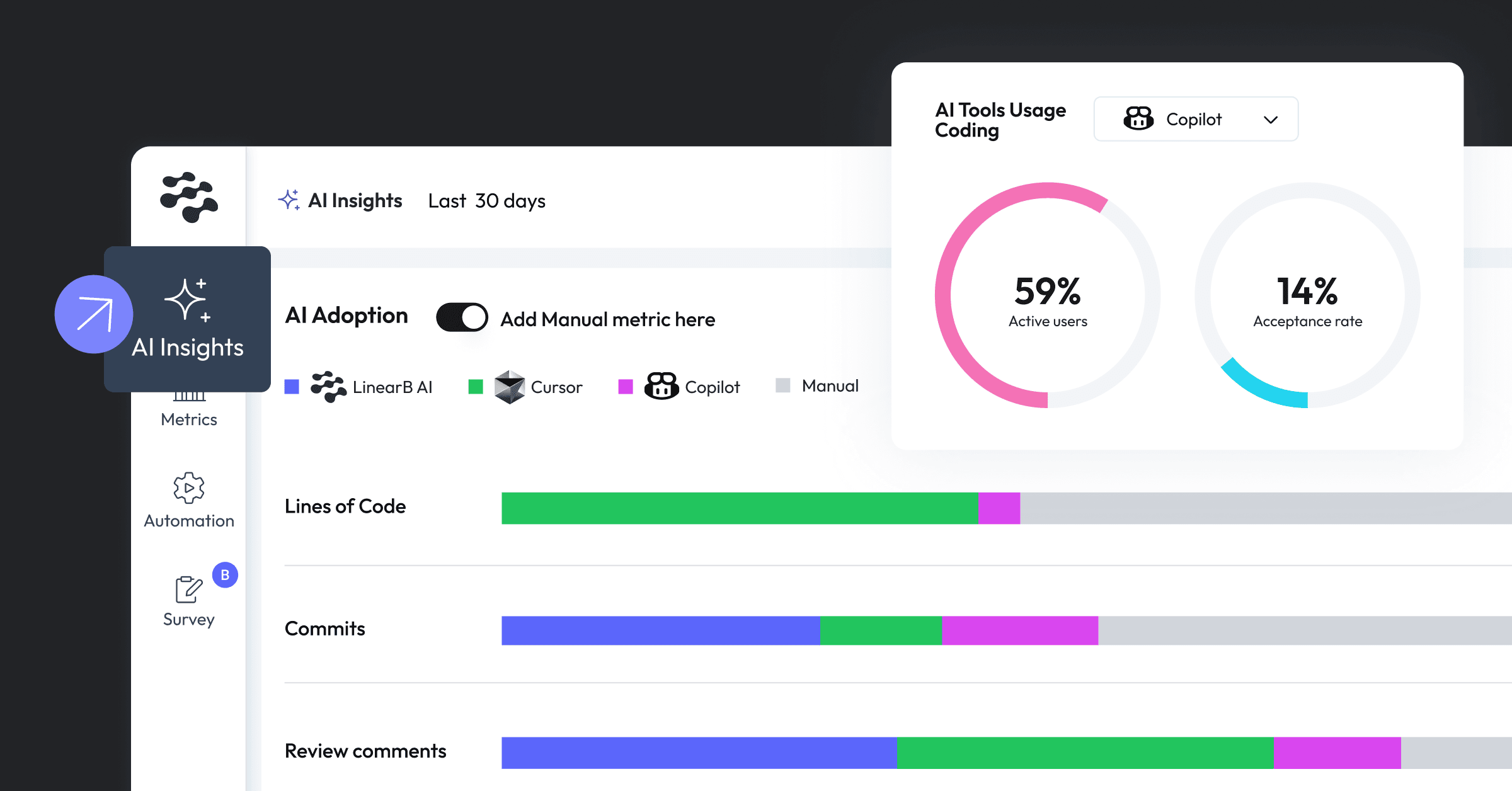

Understand AI adoption and developer impact with the AI Insights Dashboard

This demo guides you through the AI Insights Dashboard, demonstrating how to track and analyze trends in AI tool adoption, rule coverage, and code quality....