TL;DR: We’re launching the LinearB MCP server, AI Insights dashboard, and DevEx surveys, all included in our $29/mo Essentials plan – tools designed to help you understand and improve AI’s real impact on delivery and developer experience.

AI has changed how software is built. Your continued investment in AI drives faster code creation, but that doesn’t mean you’re actually shipping more features. It’s critical, now more than ever, that teams are enabled to quickly test and adopt new tools, with insight into the impact of their engineering operations.

That’s why we’re launching the Essentials package, designed to make measuring and improving AI productivity accessible to every engineering organization. Starting at $29 per month, get everything you need to measure and manage your AI adoption responsibly.

What’s included?

For engineering teams ready to scale AI adoption and productivity, the Essentials plan combines new features—the LinearB MCP server, AI Insights dashboard, and developer surveys—along with Git metrics, AI code reviews, PR automations, release detection, and more. You’ll get answers to questions like:

- Which AI tools are actually being adopted?

- How is AI impacting delivery?

- How are developers experiencing these changes?

- Where should I invest next?

LinearB is the only platform that enables engineering leaders to track a wide variety of AI tools out of the box and take action to ship faster. By being vendor agnostic and future-proof, LinearB equips engineering leaders with both the visibility and control needed to adopt AI quickly and responsibly.

Now let’s dive into new capabilities available in all paid plans, including the new Essentials plan.

A new way to connect with your engineering data

We’re excited to introduce the LinearB Model Context Protocol (MCP) server. Easily access and interact with all your engineering signals, from code activity to delivery performance to AI usage. Instead of digging through reports, use natural language or our library of pre-built prompts to surface inefficiencies, spot opportunities, and align delivery with business goals in minutes. Some examples include:

- Building custom reports with access to team, group, and metrics data

- Uncovering system-level bottlenecks, trends, and benchmarks without manual analysis

- Drilling down for hyper-specific context on any subject

Imagine building tailored reports or proactively receiving timely insights with next-best actions straight to your inbox or Slack/MS Teams. No prompts, no searching. This is just the beginning of how AI helps you run a more innovative, efficient engineering organization. Explore more use cases in this blog post.

Understand how AI influences your engineering flow

The MCP server becomes even more powerful when paired with our new AI insights dashboard and developer surveys. They give you a complete picture of how AI tools shape your delivery flow and developer experience.

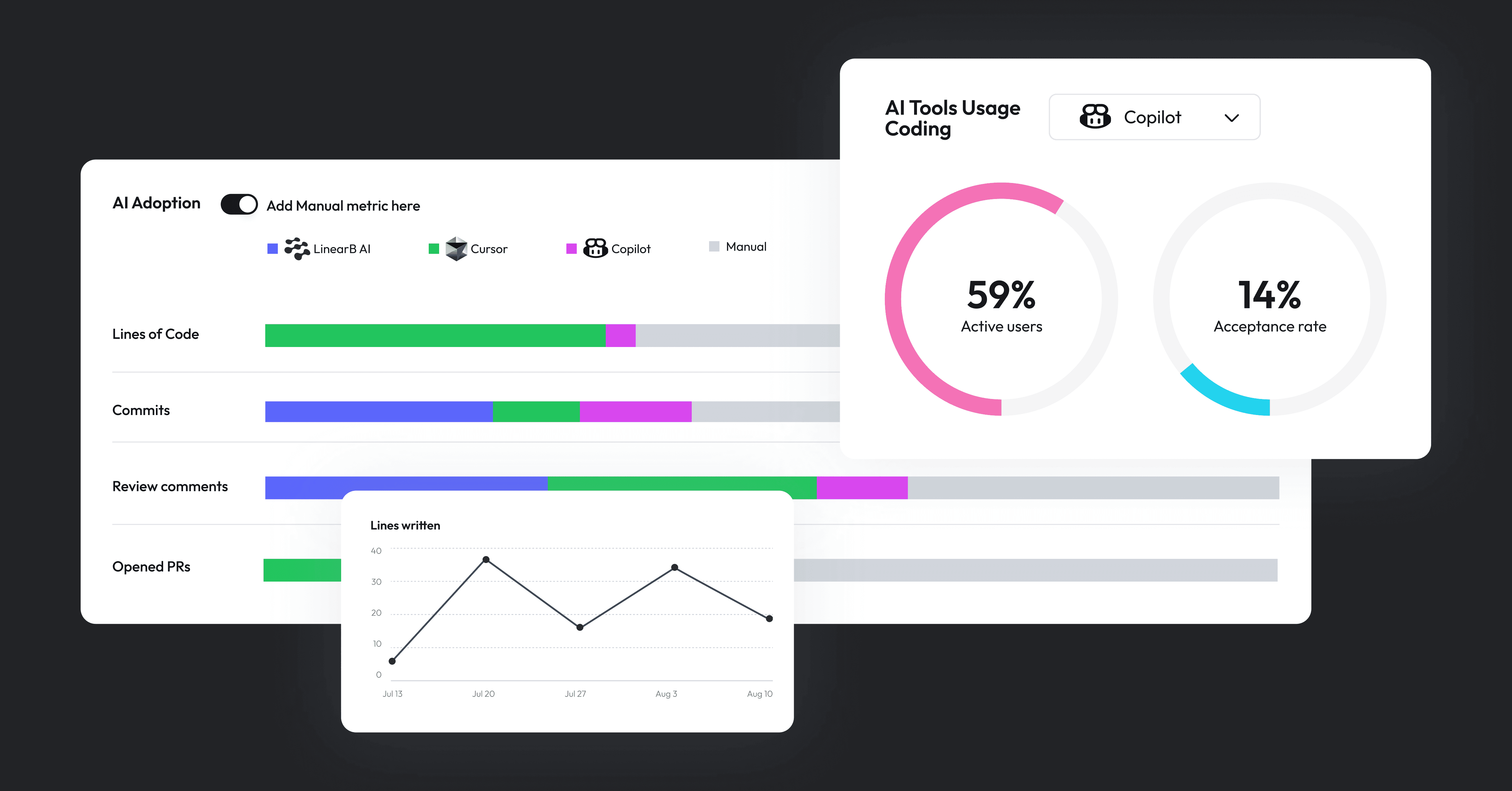

Use the AI Insights dashboard to grasp how different AI tools influence your dev workflow. From adoption across repos to impact from AI code reviews, code commits, and opened PRs, get a unified view of how widely and effectively AI tools are used across your organization.

As a vendor-agnostic AI productivity platform, we’re able to track over 24 AI tools, down to repo-level usage, using a simple GitHub integration:

| Aider | CodeRabbit | GitHub Copilot | OpenCode |

| Atlassian Code Reviewer for Bitbucket | Codex | GitLab Duo | Qodo |

| Atlassian Rovo | Cursor | Google Jules | SourceGraph |

| Bito | Devin | Graphite | Tabnine |

| Claude Code | Ellipsis Dev | Greptile | Tusk |

| CodeAnt AI | Gemini | Korbit | Windsurf |

Here are ways to translate signals in the dashboard to meaningful analysis:

- Surface issues identified by AI code reviews, such as bugs, security concerns, and maintainability issues.

- Understand how AI is used across stages of the delivery process, comparing AI-assisted activities across various AI agents versus manual activities.

- Measure AI adoption and governance maturity across your entire repo library with out-of-the-box tracking for over 24 leading AI tools.

- Evaluate AI trust signals by tracking how many developers actively use AI, and how often developers accept AI-generated suggestions.

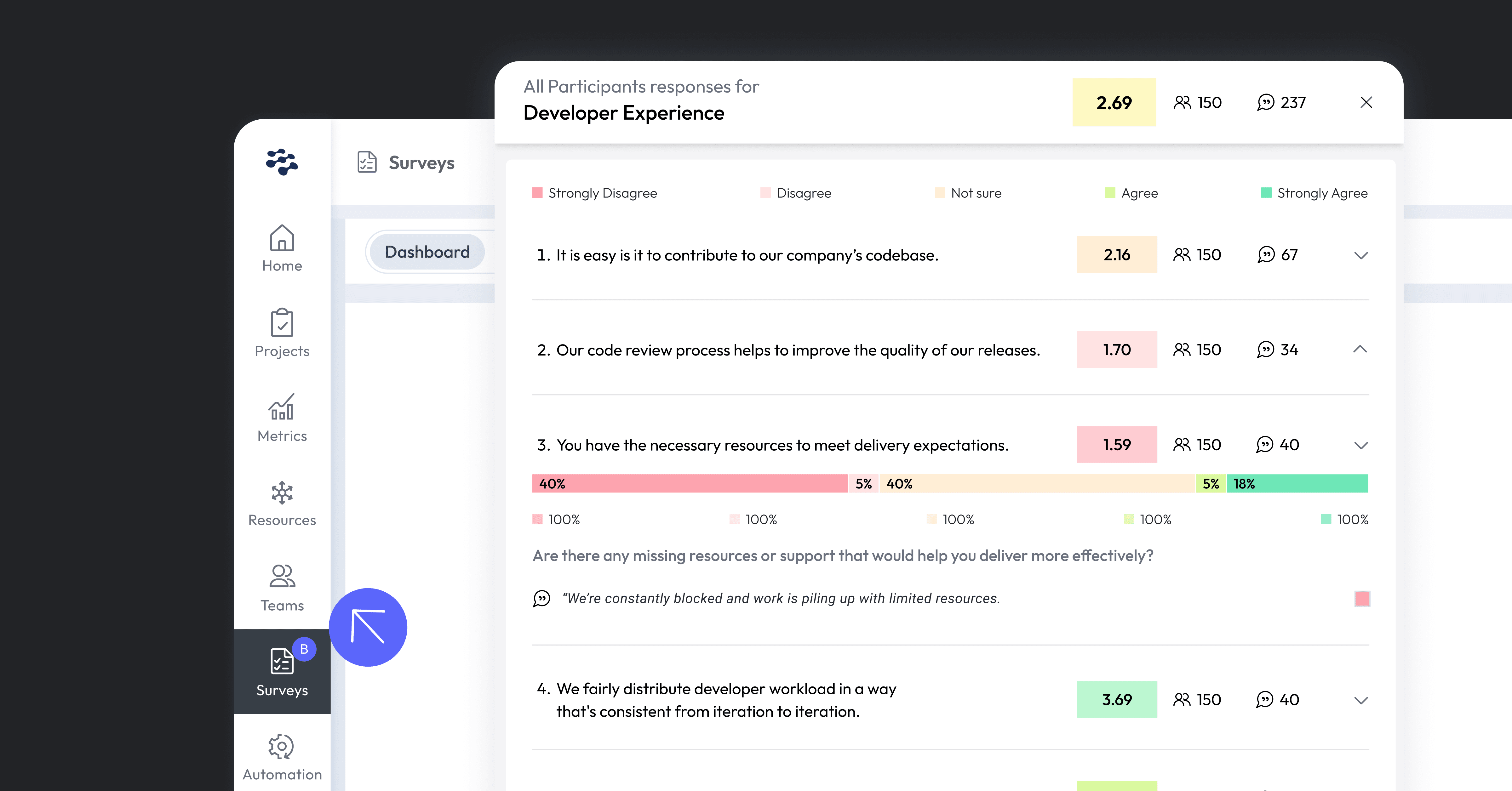

Developer surveys add the human layer, capturing how engineers feel about their workflows and tools, insights that may not be surfaced in hard metrics. With out-of-the-box questions, quickly collect anonymous responses from your team to measure:

- Trust in AI-assisted development

- Satisfaction with engineering processes and tools

- Cultural friction and team collaboration

Our surveys feature both quantitative (Likert-scale) and qualitative questions to measure developer productivity and satisfaction. Combining developer sentiment with metrics lets you make more informed decisions and drive targeted improvements.

Drive real change with AI and automation

AI adoption is just the beginning. The real challenge is connecting AI usage to outcomes such as velocity, quality, and developer experience. By combining engineering metrics with human insight and layering in automation that helps you act before bottlenecks stall delivery, LinearB enables you to ship faster, improve developer experience, and confidently lead in today’s AI era.

Try it out!

The LinearB MCP server, AI Insights dashboard, and developer surveys are available in the new Essentials package. For existing subscribers, you get access at no additional cost; just reach out to your LinearB representative or contact us, and we'll get you set up.

Want to dive deeper? Register for our upcoming workshop on evaluating AI productivity and closing the AI gap with executives. Learn how to turn executive expectations into outcomes while leading your engineering team with confidence.