By the end of 2024, Generative AI is projected to generate 20% of all code – or 1 in every 5 lines.

Nearly every engineering team is thinking about how to implement GenAI into their processes, with many already investing in tools like Copilot, CodeWhisperer or Tabnine to kickstart this initiative.

In fact, our recent GenAI Code Report revealed that 87% of participants are likely or highly likely to invest in a GenAI coding tool in 2024.

As with any new tech rollout, the next question quickly becomes: how do we measure the impact of this investment? It’s every engineering leader’s responsibility to their board, executive team, and developers alike to zero in on an answer and report their findings.

LinearB’s approach to measuring the impact of GenAI code starts with PR labels. Every pull request that includes GenAI code is labeled, allowing metric tracking for this type of work. From there, you can compare success metrics against the unlabeled PRs.

To help you get started, we put together the following quickstart guide:

- Create a LinearB Account and connect your git repos.

- Install gitStream to your git organization, and auto-apply labels to indicate PRs supported with generative AI tools.

- Use your LinearB dashboard to measure and track the impact of generative AI initiatives.

This guide will take about 10 minutes to complete. Let’s get started!

Step 1: Create A LinearB Account

If you don’t already have a LinearB account, you first need to create one. As part of the onboarding process, you’ll need to connect LinearB to your git repos so it can begin to track your metrics. If you don’t have administrative permissions for your git repositories, it would be a good idea to plan ahead by contacting the appropriate individuals at your organization. You’ll also need these privileges in the next step, so it might be easier to batch these requests.

Once you’ve connected LinearB to your git repositories, your dashboard will populate with data. If you want, you can also take this moment to connect LinearB to your project management solution to get all your metrics, but it isn’t necessary to follow this guide.

Step 2: Setup gitStream

Now that you have LinearB set to track your metrics, it’s time to set up gitStream to handle workflow automations. gitStream is a workflow automation tool for code repositories that enables you to handle a wide range of tasks automatically via YAML configurations and JavaScript plugins. In this guide, gitStream serves the role of automatically labeling PRs that are supported by generative AI tools so you can filter them inside LinearB.

Like LinearB, gitStream is also a GitHub and GitLab app, so you’ll need someone with admin privileges on your git repos to install it. Head over to the docs to find installation instructions for GitHub and GitLab. We recommend installing the gen AI gitStream automations at the organization level to ensure they are applied consistently. With gitStream installed, you have three options for tracking generative AI usage: based on a list of known users, PR tags, or using prompts in GitHub comments.

For this guide, we’ll show how to do things for GitHub Copilot, but you can easily adapt these examples to other generative AI tools. To use any of the examples in this guide, create a new CM file inside cm repo for your organization (if you installed at the organization-level) and copy/paste the configurations you want from this guide into that file.

Label Based on Known User List

If you have an opt-in program for developers to adopt generative AI tools, a good solution to track the impact is to label based on the list of opted-in users. The following automation example shows how to use a pre-determined list to automatically label PRs based on whether the author has adopted generative AI tools.

# -*- mode: yaml -*-

manifest:

version: 1.0

automations:

label_genai:

# For all PRs authored by someone who is specified in the genai_contributors list

if:

- {{ pr.author | match(list=genai_contributors) | some }}

# Apply a label indicating the user has adopted Copilot

run:

- action: add-label@v1

args:

label: '🤖 Copilot'

genai_contributors:

- username1

- username2

- etcIf you want to pull the list of opted-in generative AI users from a centralized source, you can leverage gitStream plugins to connect to external data sources.

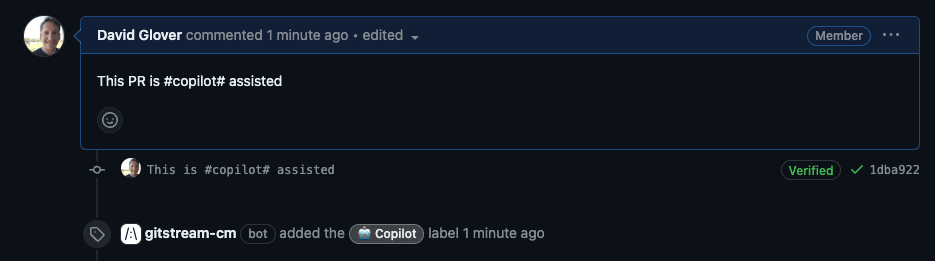

Label Based on PR Tags

If your developers are less consistent with using generative AI tools, an option to track the impact of generative AI adoption is to include a special tag in the PR description or comment that informs gitStream that the PR author used generative AI support to write the code. For example, you could require developers to include a #copilot# tag in the PR description and use gitStream to detect the presence of the tag and automatically label the PR.

# -*- mode: yaml -*-

manifest:

version: 1.0

automations:

label_copilot:

# Detect PRs that contain the text '#copilot#' in the title, description, comments, or commit messages

if:

- {{ copilot_tag.pr_title or copilot_tag.pr_desc or copilot_tag.pr_comments or copilot_tag.commit_messages }}

# Apply a label indicating the user has adopted Copilot

run:

- action: add-label@v1

args:

label: '🤖 Copilot'

copilot_tag:

pr_title: {{ pr.title | includes(regex=r/#copilot#/) }}

pr_desc: {{pr.description | includes(regex=r/#copilot#/) }}

pr_comments: {{ pr.comments | map(attr='content') | match(regex=r/#copilot#/) | some }}

commit_messages: {{ branch.commits.messages | match(regex=r/#copilot#/) | some }}Prompt Users to Indicate Generative AI Usage

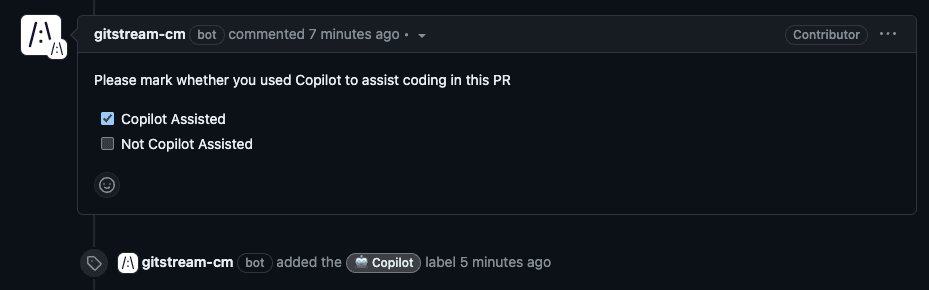

One of gitStream’s best features is its ability to create complex, highly configurable automations that respond to the changing conditions of a PR. If the first two options for tracking generative AI contributions don’t work for you, you can create an automation to prompt the PR author via a comment that asks them to indicate whether they used generative AI to help make the code in the PR. gitStream will automatically apply the label if the user indicates yes.

The comment prompt requires two separate automations. The first creates the comment with the prompt, and the second labels the PR when someone indicates they used generative AI. You'll need to create separate CM files for each because they have different execution triggers.

-*- mode: yaml -*-

manifest:

version: 1.0

on:

- pr_created

automations:

comment_copilot_prompt:

# Post a comment for all PRs to prompt the PR author to indicate whether they used Copilot to assist coding in this PR

if:

- true

run:

- action: add-comment@v1

args:

comment: |

Please mark whether you used Copilot to assist coding in this PR

- [ ] Copilot Assisted

- [ ] Not Copilot Assisted-*- mode: yaml -*-

manifest:

version: 1.0

on:

- comment_added

- commit

- merge

automations:

# You should use this automation in conjunction with comment_copilot_prompt.cm

label_copilot_pr:

# If the PR author has indicated that they used Copilot to assist coding in this PR,

# apply a label indicating the PR was supported by Copilot

if:

- {{ pr.comments | filter(attr='commenter', term='gitstream-cm') | filter (attr='content', regex=r/\- \[x\] Copilot Assisted/) | some}}

run:

- action: add-label@v1

args:

label: '🤖 Copilot'Step 3: Track Impact Over Time With Your LinearB Dashboard

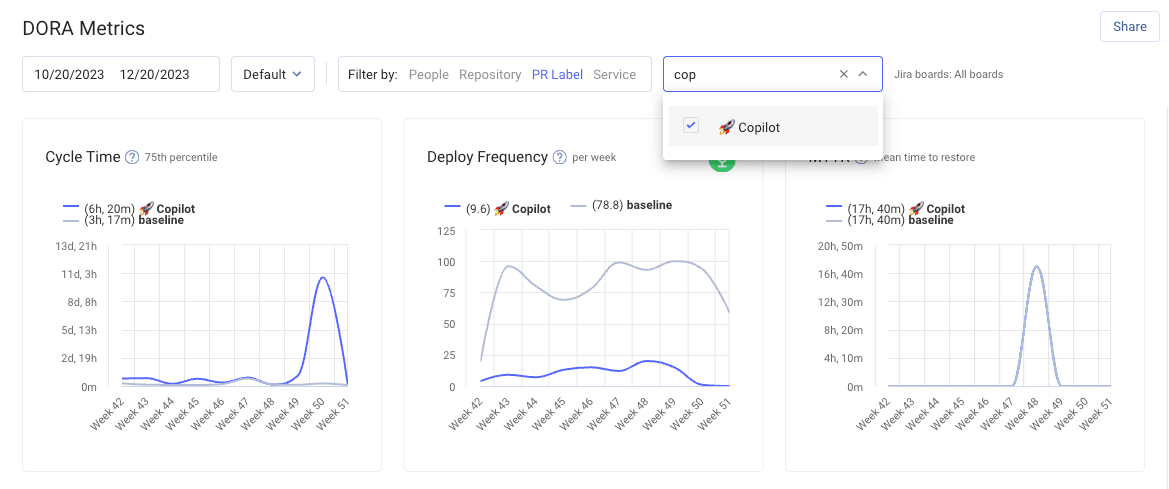

Once gitStream labels your PRs, you can log into your dashboard and use the filter capability at the top of any metrics dashboard. This will show you the metrics for all of your developers using generative AI tools and enable you to easily compare their cycle time, deployment frequency, change failure rate, and more against the baseline of your entire organization.

The Future of Initiative Tracking

As exciting as it is to talk about the future of the software delivery landscape, the reality is that GenAI is likely just one initiative that you as an engineering leader have in flight right now.

Your mind is probably also spinning about your developer experience initiative, your agile coaching initiative, your merge standards initiative, your test coverage initiative, your new CI pipeline initiative, etc, etc.

Universal label tracking with gitStream allows you to measure the impact of any initiative you’ve kicked off with your team. This way, you can answer questions like:

- What is the ROI on this new 3rd party tool we bought?

- Should we roll this agile coaching initiative out to the rest of the organization?

- Is changing up my CI pipeline allowing us to speed up our delivery? By how much?

Advocating for more headcount or even for another funding round is much more effective when you can point to a dashboard with tangible engineering results that you can trace back to any given initiative, GenAI or otherwise.

LinearB metrics and workflow automation have already saved developers thousands of hours, with the average repo seeing a 61% decrease in Cycle Time.

You can start tracking the impact of your GenAI initiative today with a free forever account! Click here to schedule a personalized demo.