Chaos Engineering might sound like a buzzword - but take it from someone who used to joke his job title was Chief Chaos Engineer (more on that later) it is much more than buzz or a passing fad - it’s a practice.

The world can be a scary place and more and more companies are beginning to turn to Chaos Engineering to proactively poke and prod their systems and in doing so are improving their reliability and guarding against unexpected failures in production and unplanned downtime.

During my career I dealt with my fair share of outages, including one that caught me mid-song during a bout of karaoke and far too many that woke me up at 02:00. As the co-founder and CTO at Gremlin, I do my best to make sure no other engineers have to suffer sleepless nights worrying about their product.

But the question remains, what is Chaos Engineering and where did it come from?

A Short History

The spiritual predecessor to Chaos Engineering is often called by a much more widely recognized name - disaster recovery. The focus when this practice was introduced is much the same as today: proactively suss out production problems by injecting failure.

Netflix’s Chaos Monkey is probably the most well publicized Chaos Engineering tool as it arguably kickstarted the adoption of Chaos Engineering outside of large companies, but this has led to the erroneous belief that Netflix invented the practice. In fact, the practice was already widely in use amongst the titans of technology.

Over a decade ago during my time as a Lead Software Engineer at Amazon, we implemented several crude practices designed to inject failure into our systems. The most rudimentary of which was employed by a man called Jesse Robbins, who earned the nickname “Master of Disaster” by running through data centers pulling out cables.

Let’s just say the practice has evolved a lot since those early days and your data center cables are much safer these days.

What is Chaos Engineering?

“What Chaos Engineering really is, is the art, if you want to call it that, of introducing controlled chaos.”

- 2:16 on the Dev Interrupted podcast

At its core, Chaos Engineering is a disciplined approach of identifying potential failures before they have an opportunity to become customer facing outages.

It is a practice that lets you safely test your assumption about how your systems will behave under duress by actually exercising resilient mechanisms in a controlled fashion. You literally "break things on purpose" to validate and build resiliency. The end goal of Chaos Engineering is not to inject arbitrary failure into a system, but rather to strategically inject turbulence to enhance the stability and resiliency of your systems.

How Chaotic is Chaos Engineering?

I always tell people that Chaos Engineering is a bit of a misnomer because it’s actually as far from chaotic as you can get. When performed correctly everything is in control of the operator. That mentality is the reason our core product principles at Gremlin are: safety, simplicity and security. True chaos can be daunting and can cause harm. But controlled chaos fosters confidence in the resilience of systems and allows for operators to sleep a little easier knowing they’ve tested their assumptions. After all, the laws of entropy guarantee the world will consistently keep throwing randomness at you and your systems. You shouldn’t have to help with that.

How do I Start?

One of the most common questions I receive is: “I want to get started with Chaos Engineering, where do I begin?” There is no one size fits all answer unfortunately. You could start by validating your observability tooling, ensuring auto-scaling works, testing failover conditions, or one of a myriad of other use cases. The one thing that does apply across all of these use cases is start slow, but do not be slow to start.

What I mean by this is to start testing across just a few nodes versus impacting your entire fleet. We refer to the impacted area as the “blast radius” and we highly recommend starting with a small blast radius (the number of systems impacted) and increasing it over time.

By starting small you allow yourself to gain confidence in both the experiments you are running and your systems. Of course your risk tolerance is also a factor of how large a blast radius your organization will use.

For instance, a large banking institution with millions of customers has a much lower risk tolerance than a tech startup with a couple hundred customers. In that case, they would want to run experiments in a programmatic way and would need to be very explicit about communicating to the rest of the organization what tests are going to be run and when to avoid any unplanned 2am or 3am disasters.

Eventually you want to get to the point where all of this is automated, a process we refer to as “continuous chaos.” Starting small with automation could be something as simple as taking out a single node; then taking out five nodes; then ten; and so on. Eventually you automate the process at a level you are comfortable with.

“Ultimately you want to be able to handle any of this random chaos being thrown at you, because that's what the world is, it's entropy, it's degradation”

- 7:35 on the Dev Interrupted podcast

No Tolerance for Downtime

When I founded Gremlin, it was just myself and my co-founder developing the first iteration of the product. The business looked very different then and I jokingly referred to myself as the “Chief Chaos Engineer” responsible for implementing code that was mostly used by enterprise companies. Many of these companies came to us because they had reliance thrust upon them by the US government or they had top-down reliability standards and they wanted a tool to help them shore up their systems.

As the company began to evolve, so did the customer base. These days it’s not just Fortune 500 companies that care about reliability, it’s everybody. Planned downtime is a relic of days gone by. It is no longer acceptable to espouse planned maintenance windows as part of development lifecycles and customers don’t have the patience for products they rely upon to spend any time unavailable. Companies recognize this dynamic - and it’s not a hard one to miss.

Seemingly our appetite for technology has gone up exponentially while our ability to stomach downtime has drastically decreased. Customers expect that your product is always working, always running. If your product is down because of outages then there are ten other similar products waiting in the wings to take their money.

Making Lives Better

Visibility is high these days and companies don’t need the publicity that comes with making any unforced errors, let alone to be subject to errors not of their making. No one wants to be blown up on Twitter because their product isn’t working or because one of their downstream dependencies or their cloud provider had an unexpected outage.

By preparing for the worst, we can be at our best as an industry and can be prepared when disaster eventually comes knocking. That’s why when an unexpected outage occurs or there is a production failure customers will never even know it happened.

I often joke that we are the engineers’ engineers because many of us know that feeling of being jolted from a dream at 03:00 by our pagers, groggily wiping our eyes and whipping out the laptop to go dig through a sea of monitoring dashboards and logs. It’s not fun and it’s exactly why I founded Gremlin. Because there is a better way to approach operations than merely sitting back on our haunches and waiting for the next outage. Chaos Engineering not only helps to protect against the randomness of the world, but also teaches people how to build more reliable software. And if enough people build more reliable software, we build a more reliable internet.

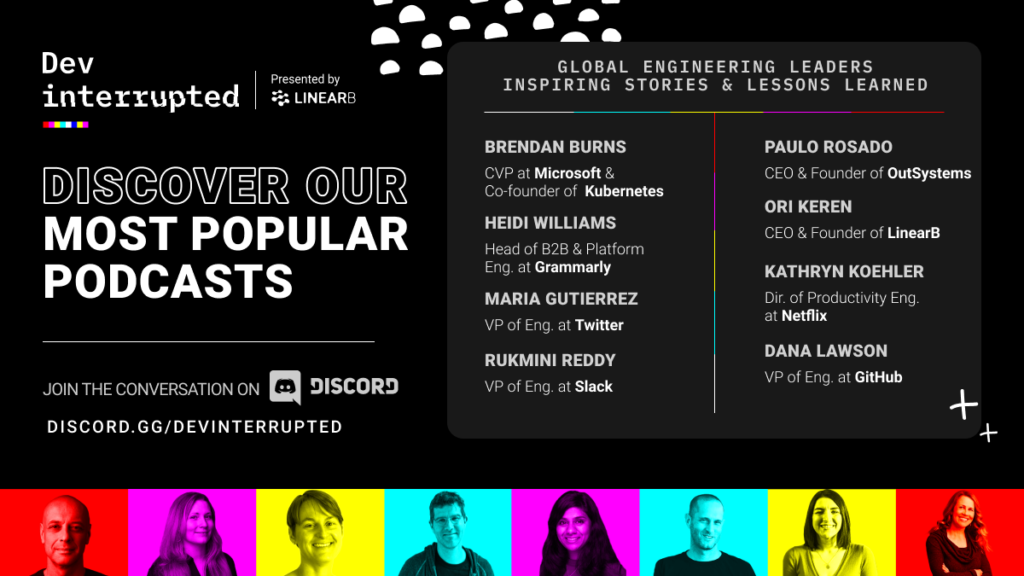

Starved for top-level software engineering content? Need some good tips on how to manage your team? This article is inspired by Dev Interrupted - the go-to podcast for engineering leaders.

Dev Interrupted features expert guests from around the world to explore strategy and day-to-day topics ranging from dev team metrics to accelerating delivery. With new guests every week from Google to small startups, the Dev Interrupted Podcast is a fresh look at the world of software engineering and engineering management.

Listen and subscribe on your streaming service of choice today.