Measuring the impact of AI workflows in software engineering has become a critical but often elusive goal. As AI-enabled tools spread across the software development lifecycle (SDLC), AI enablement leaders are under pressure to quantify their success. Are developers actually using the tools? Is quality improving? Can we show a measurable return on investment?

At LinearB, we’ve spent years helping engineering leaders gain clarity into their workflows. Today, we’re excited to introduce a feature that brings that same visibility to one of the most important AI use cases to emerge in recent years: AI code reviews.

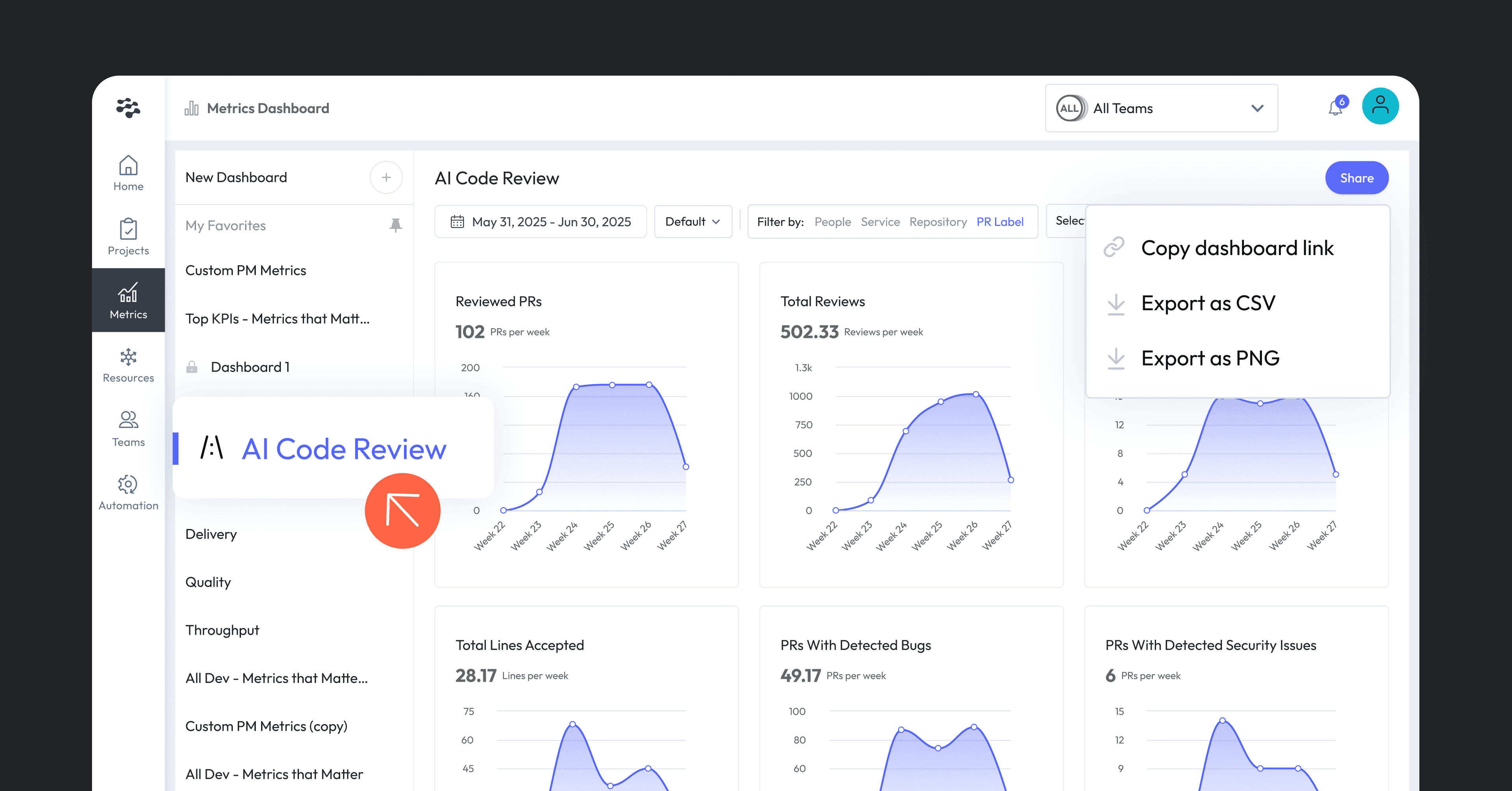

The new AI Code Review Metrics Dashboard gives teams a complete view into the performance, adoption, and impact of AI code reviews at scale.

Before we drop into the new dashboard, let’s take a quick look at the pull request automation that enterprise engineering teams are adopting en masse.

About AI code reviews

AI code review applies artificial intelligence and machine learning algorithms to automate and enhance code evaluation for quality, security, and best practices. Unlike manual reviews, AI systems analyze large volumes of code rapidly, identifying patterns and issues with precision and consistency. That means no more nitpicking over line breaks; AI’s got it.

For engineering teams, AI code review offers strategic advantages. Organizations using these tools report up to 40% shorter review cycles and fewer production defects, resulting in faster deployments and more reliable software. The technology has evolved quickly, with enterprise adoption moving from experimentation to full implementation.

Instead of wasting time on minor issues, human reviewers can focus on higher-level concerns like architecture, business logic, and edge cases. The result is a more consistent, streamlined review process that accelerates delivery while maintaining code quality.

You can dive deeper into how AI code reviews work in practice with LinearB.

Introducing the AI Code Review Metrics Dashboard

LinearB's new dashboard surfaces detailed metrics that track review activity, issue detection, and suggestion adoption. It gives engineering leaders their first real visibility into how AI code reviews are being used and what they’re delivering.

Below, we break down each key metric and the insight it unlocks:

Reviewed PRs

Tracks the total number of pull requests reviewed by the AI.

Why it matters

It’s the baseline for measuring adoption and gives leaders an at-a-glance view of how widely the AI reviewer is being used across their organization.

Each bar in the graph represents one day, helping teams identify trends over time and correlate review activity with deployments, changes in workflow, or AI rollout efforts.

Total reviews

Shows the total number of AI-generated review comments across all PRs.

Why it matters

Unlike the PR count, which measures breadth of coverage, this metric reflects depth — how actively the AI is engaging in each review.

It includes all review events, regardless of whether developers ultimately accept the suggestions. That makes it a valuable indicator of how thoroughly gitStream is analyzing changes and providing feedback.

A steady or growing number here can signal increased confidence in the AI’s capabilities and richer code coverage across teams. It also helps quantify AI’s contribution to the overall review process.

Total suggestions accepted

The number of AI-generated suggestions that were accepted and committed.

Why it matters

This is your trust signal. When developers accept AI suggestions, it shows alignment between machine recommendations and human judgment.

The chart includes a hover feature to show daily counts, enabling teams to identify when and where developers are most likely to adopt AI suggestions.

Over time, a high acceptance rate demonstrates that the AI reviewer is not just being used — it’s providing suggestions that developers consider valid, helpful, and worth incorporating into their code.

Total lines accepted

Total number of lines of code added or changed based on accepted AI suggestions.

Why it matters:

While accepted suggestions reflect the number of ideas adopted, this metric goes a level deeper: measuring the total number of code lines that were changed or added based on AI input.

This allows teams to understand the scope and scale of AI-generated contributions. Are they mostly small formatting fixes, or are they impacting larger logic or structural changes?

By comparing this with overall code churn, leaders can see how much of the delivered code is influenced by AI, and better assess the technology’s role in driving developer output.

PRs with detected bugs

Counts PRs where gitStream flagged potential logic or correctness issues.

Why it matters:

Catching these issues early can prevent production incidents…duh. But more specifically, this metric tracked bugs like improper control flow, missing validations, or faulty error handling.

By monitoring this metric over time, teams can assess the AI's effectiveness in identifying potential defects early, and also target areas for improved testing or training.

PRs with detected security issues

Highlights PRs where AI flagged potential vulnerabilities—such as missing validation, insecure comms, or broken access control.

Why it matters:

Security leaders can use this as a signal for how well secure coding practices are being followed across teams, and where enforcement may need to be strengthened.

If high volumes of security issues appear repeatedly in certain teams or repositories, it may be time to reevaluate onboarding, review workflows, or even training programs.

PRs with detected performance issues

Shows code flagged for inefficiencies like excessive memory use or poor network handling.

Why it matters:

These findings help engineering leaders catch potential performance regressions before they impact users. Even better, they provide a data-driven basis for optimization initiatives.

Over time, tracking this metric can help teams identify common patterns that slow down code and inform performance best practices across the org.

PRs with readability issues

Captures complexity or clarity problems: unclear naming, poor structure, etc.

Why it matters:

Clean code is maintainable code. High readability issue counts can indicate the need for better documentation, clearer internal style guides, or targeted coaching.

Improving this score not only enhances code maintainability but also accelerates onboarding for new engineers and reduces review cycle friction.

PRs with maintainability issues

Surfaces problems like duplication, tight coupling, or poor modularity.

Why it matters:

This is your technical debt early warning system. Addressing these concerns early reduces the risk of long-term technical debt and improves the team’s ability to move quickly on future changes.

For DevEx and AI enablement leaders, this metric provides a feedback loop into how AI is helping reinforce sound engineering practices across the codebase.

All charts in the dashboard can be filtered by repository, contributor, or date range, and shared easily with collaborators for review or reporting.

Why this matters for DevEx and AI enablement leaders

The AI Code Review Metrics Dashboard is more than just an analytics feature — it’s a strategic enabler. For the first time, engineering leaders can answer high-stakes questions about the tools they’re rolling out:

- Is AI being used in our development process?

- Is it making our code better?

- Where are we seeing real impact?

Quantifying these answers helps drive smarter decisions, whether it’s prioritizing AI adoption in specific teams, fine-tuning training models, or reporting results to executive stakeholders.

Beyond visibility, the dashboard also enables meaningful change. By analyzing these metrics, leaders can:

- Demonstrate ROI: Show concrete value from AI initiatives in the language of metrics and outcomes.

- Guide DevEx strategy: Use insights to refine tooling, support underperforming teams, and reinforce effective behaviors.

- Improve model quality: Identify where AI is succeeding — and where it’s falling short — to improve prompts or model tuning.

- Prioritize security and maintainability: Focus attention where risk is emerging and ensure engineering practices scale with quality.

Conclusion: From black box to baseline

AI code reviews are no longer just experimental — they’re becoming core to how modern engineering teams work. But until now, they’ve been a black box, with no way to track impact or adoption.

LinearB’s AI Code Review Metrics Dashboard changes that. It gives leaders the insight they need to measure what matters, make smarter decisions, and ensure that AI adoption in the SDLC is delivering on its promise.

This is how engineering organizations go from AI in theory to AI in practice — and from data blindness to data-driven improvement.