What is AI Code Review?

AI code review applies artificial intelligence and machine learning algorithms to automate and enhance code evaluation for quality, security, and best practices. Unlike manual reviews, AI systems analyze large volumes of code rapidly, identifying patterns and issues with precision and consistency. That means no more nitpicking over line breaks; AI’s got it.

For engineering teams, AI code review offers strategic advantages. Organizations using these tools report up to 40% shorter review cycles and fewer production defects, resulting in faster deployments and more reliable software. The technology has evolved quickly, with enterprise adoption moving from experimentation to full implementation.

AI code review integrates into modern engineering workflows as a complement to human expertise. By handling routine checks and pattern recognition, these tools allow experienced developers to concentrate on architecture and complex logic evaluations. When connected with version control, CI pipelines, and project management tools, AI code review significantly boosts engineering productivity.

Forward-thinking organizations now leverage these capabilities to maintain code quality at scale while accelerating delivery. Understanding how to effectively implement and measure AI code review impact has become crucial for competitive advantage in today's development environment.

From Manual to AI-Powered Code Reviews

Manual code reviews slow teams down, burn reviewers out, and miss things that machines catch in seconds. Manual reviews depend heavily on reviewer availability, technical knowledge, and focus. This often leads to inconsistent results, bottlenecks in delivery pipelines, and increased cognitive load on team members who must context-switch between their own work and review tasks.

AI transforms this process by introducing speed, consistency, and scalability. Where human reviewers might spend hours examining complex changes, AI systems can analyze thousands of lines in seconds. The technology eliminates common review challenges such as fatigue-based oversights and personal biases, while providing standardized feedback across all code submissions.

Key Features of AI Code Review Tools

Understanding the technical foundation of AI code review helps engineering teams evaluate solutions and implement them effectively. Modern systems combine multiple analysis approaches to deliver comprehensive results.

Static analysis examines code without execution, identifying syntactic errors, style violations, and potential bugs. AI enhances this process by learning from code patterns across vast repositories, enabling sophisticated detection of issues that might escape rule-based systems. These capabilities help catch problems early, saving valuable debugging time later.

Dynamic analysis observes application behavior during execution, identifying performance bottlenecks, memory leaks, and runtime errors by analyzing program flow. This approach reveals problems difficult to detect through static means alone, such as race conditions or edge-case bugs that only appear under specific runtime conditions.

Rule-based components provide the foundation for enforcing coding standards and best practices, while AI builds upon these with learning capabilities that adapt to project-specific patterns. This creates a system combining the reliability of explicit rules with the flexibility of machine learning.

Natural Language Processing (NLP) and Large Language Models form the most advanced elements in code review AI. These models understand programming languages similarly to human languages, recognizing semantic meaning beyond syntax. They can suggest improvements, generate explanatory comments, and understand the intent behind implementation choices.

The integration of these technologies creates powerful review systems that address code quality across multiple dimensions, providing comprehensive analysis that no single approach could achieve alone.

Making the Business Case for AI Code Review

Implementing AI code review delivers tangible business benefits beyond technical improvements. Engineering leaders must understand these advantages to justify investment and measure success.

Teams implementing AI code review can expect productivity improvements. This translates directly to revenue impact through faster feature delivery, particularly for organizations where deployment velocity affects market competitiveness.

Security vulnerabilities caught early cost significantly less to fix. AI review tools excel at identifying common security issues including injection flaws and sensitive data exposure that human reviewers might miss due to fatigue or knowledge gaps. By catching problems before deployment, teams dramatically reduce security risk exposure without adding specialized personnel.

Developer satisfaction directly impacts recruitment, retention, and productivity. AI code review enhances this by providing immediate, constructive feedback rather than delayed human reviews. New team members benefit particularly through consistent guidance on project-specific patterns and practices, accelerating their onboarding and reducing dependency on senior developers for routine reviews.

Common Challenges and Limitations

AI excels at pattern recognition but lacks the contextual understanding and project history knowledge of experienced developers. Finding the right balance between automation and human judgment remains essential. Teams must establish clear guidelines for when human review takes precedence and create escalation paths for ambiguous issues.

AI systems trained on generic code repositories may struggle with organization-specific practices, legacy systems, or unique architectural considerations. This limitation requires careful tool configuration and supplementation with human reviews for areas where contextual understanding proves critical.

Managing Expectations During Adoption AI Adoption

One of the most significant challenges in AI code review implementation is effectively handling false positives (flagging non-issues) and false negatives (missing actual problems). Without proper management, these errors can erode team trust and undermine adoption efforts.

Pro Tip: To start, use AI review first on routine code changes with low risk. Build trust before rolling it out to mission-critical systems.

Advanced workflow orchestration tools enable teams to systematically track and label detected issues throughout their entire lifecycle—from initial detection to production deployment. By connecting these labeled results with actual outcomes, teams create valuable feedback loops that continuously refine detection accuracy.

The most sophisticated implementations use dedicated orchestration platforms to categorize findings by confidence level, automatically filter known false positives, and maintain a centralized knowledge base of validated patterns. This approach transforms what could be an irritation into a powerful learning mechanism that gets smarter with every code change.

Rather than letting false positives simply disappear from view after code merges, leading organizations actively track them through deployment and runtime monitoring. This end-to-end visibility allows correlation between AI predictions and actual production behavior, creating unprecedented insight into review effectiveness while dramatically reducing noise over time.

Developer skepticism toward automated recommendations can undermine adoption. Overcoming this resistance requires a transparent explanation of how AI systems make judgments, what training data they use, and clear examples of their effectiveness. Teams should introduce AI review gradually, allowing members to validate results and build confidence in the system.

Engineers trust what they understand. Transparency beats “black box” magic every time.

How to Compare AI Code Review Tools

Selecting the right AI code review solution requires evaluating several factors based on organizational needs.

When assessing tools, consider integration capabilities with existing development environments, supported programming languages, customization options, and learning capabilities. The ideal solution should fit seamlessly into current workflows while providing clear improvement metrics.

Look beyond feature lists to evaluate real-world performance. Request proof-of-concept implementations with your actual codebase, solicit feedback from engineers who will use the system daily, and prioritize solutions that demonstrate measurable impact on your specific pain points.

Integration Architecture and Platform Ecosystem

The most effective implementations connect AI code review with broader development processes through integration with version control systems, CI/CD pipelines, and issue tracking platforms. This ensures findings translate into actionable items within normal workflows, maximizing value.

Leading organizations are moving toward integrated engineering platforms that provide unified visibility across the entire software delivery lifecycle. These platforms connect review insights with deployment metrics, engineering analytics, and business KPIs, creating unprecedented visibility into how code quality impacts organizational outcomes.

Cost Structure and ROI Considerations

Cost structures vary widely, from open-source tools with limited features to enterprise solutions with comprehensive capabilities. Consider per-seat licensing versus repository-based pricing, and evaluate how costs will scale as your organization grows. Some platforms offer tiered pricing that allows starting with basic features before committing to full implementation.

When calculating ROI, look beyond license costs to include implementation effort, ongoing maintenance, and most importantly, the business value of accelerated delivery and improved quality. The most comprehensive workflow platforms may carry premium pricing but often deliver superior returns through automation, policy enforcement, and outcome measurement capabilities that basic tools simply cannot match.

How to Implement AI Code Reviews

Successful implementation follows a structured approach focused on incremental improvement and clear metrics.

- Begin by documenting existing review workflows, identifying bottlenecks, and establishing baseline metrics. Track cycle time, review depth, change failure rate, and reviewer satisfaction to measure current performance against future improvements. This assessment reveals where AI review can deliver the greatest initial impact.

- Choose tools that address your specific pain points while integrating with existing systems. Consider programming language support, customization options, and learning curve for your team. Prioritize solutions that offer transparency into how they generate recommendations and align with your development culture rather than selecting based solely on feature lists.

- Minimize disruption by integrating AI code review into current processes rather than requiring workflow changes. Start with low-risk, high-value areas to demonstrate quick wins, integrate seamlessly into pull request processes, and configure smart notification systems to highlight critical issues. The most successful implementations feel like gaining a helpful collaborator rather than adding an enforcement mechanism.

- Introduce AI review gradually, starting with non-critical code areas to build confidence. Provide clear documentation on how to interpret and respond to AI recommendations, and establish regular feedback sessions to address concerns. Treating AI review as a developer productivity tool rather than a compliance requirement accelerates adoption as engineers experience how it makes their work easier.

- Establish clear guidelines for when AI recommendations can be automatically accepted versus when human review remains mandatory. Define escalation paths for disagreements with AI suggestions and create feedback mechanisms for improving system accuracy over time. This governance framework ensures consistent application while continuously enhancing the system's value.

Measuring the Impact of AI Code Review

Quantifying the benefits of AI code review requires tracking specific metrics that demonstrate business and technical value. A comprehensive measurement approach combines process efficiency, code quality, and business impact indicators.

Metric Improvements: Review Time and Pickup Time

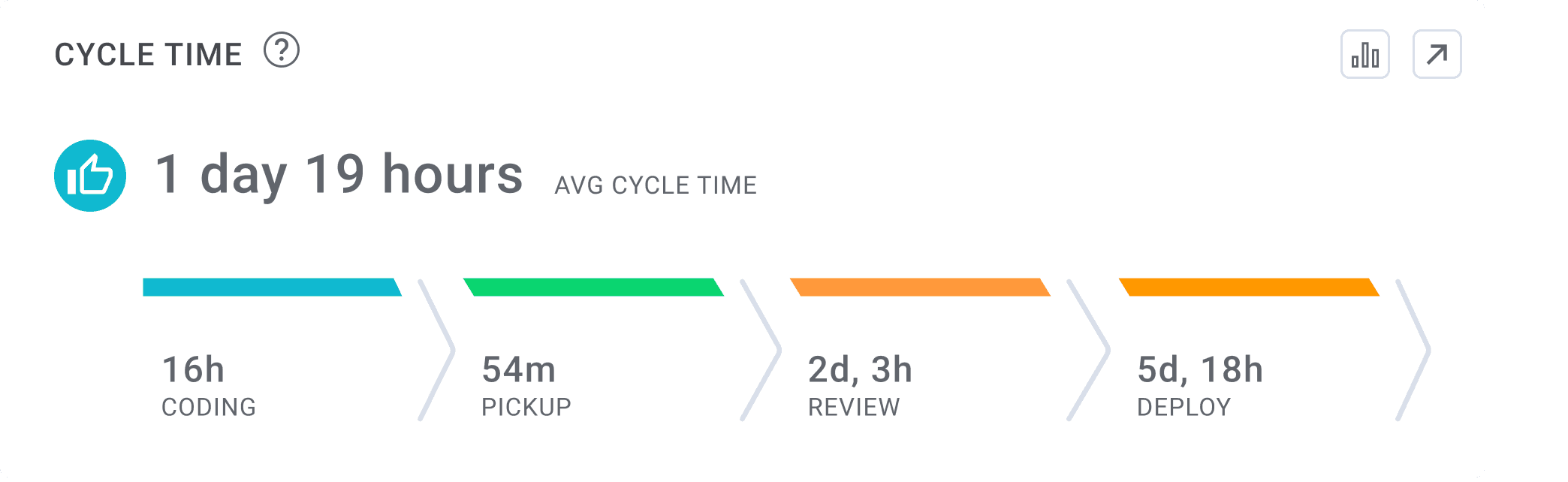

The most immediate impact of AI code review appears in review efficiency metrics. Measure how quickly reviews start after submission (pickup time) and how long they take to complete (review time).

Effective AI implementation typically reduces review initiation time because automated checks begin immediately upon submission. More importantly, total review duration often decreases by 30-50% as human reviewers can focus on strategic aspects rather than routine checks. These time savings compound across your organization, creating substantial capacity for higher-value work.

Leading organizations track these metrics by repository, team, and code category to identify optimization opportunities. Look for platforms that automatically capture these metrics and provide trend analysis rather than requiring manual tracking, which becomes unsustainable at scale.

Metric Improvements: Cycle Time and Review Depth

End-to-end cycle time—from first commit to production deployment—provides the most comprehensive view of AI review's impact on your delivery pipeline. This metric encompasses coding time, review time, and deployment processes, revealing how quality improvements affect overall delivery speed.

AI review typically reduces overall cycle time while maintaining or improving review depth. The most sophisticated implementation approaches measure review depth through coverage analysis, examining what percentage of code changes receive substantive feedback rather than simply counting comments.

Look for platforms that automatically capture these metrics within your normal workflow rather than requiring separate tracking systems. The most effective solutions connect cycle time improvements directly to business outcomes like feature delivery predictability and time-to-market advantages.

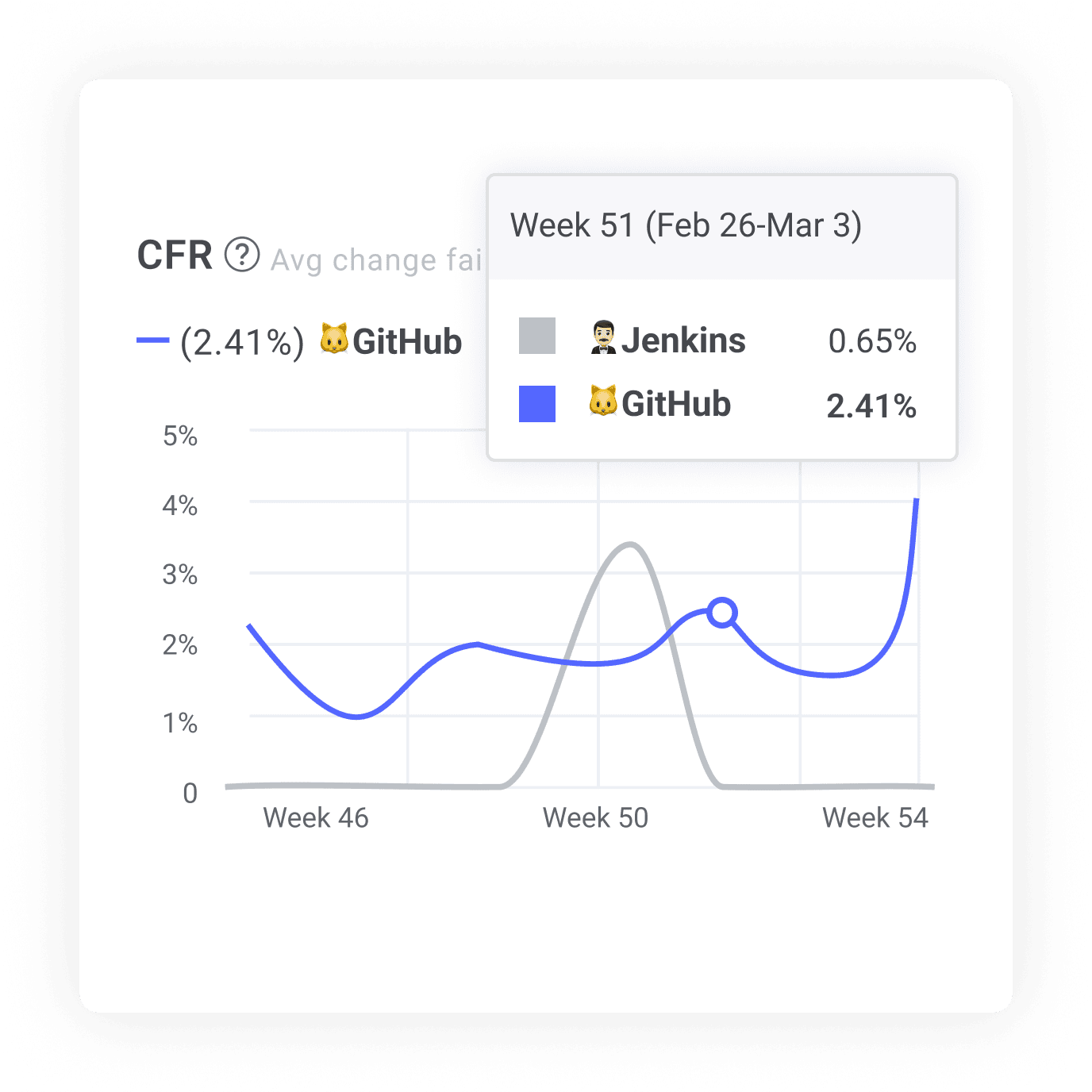

Metric Improvements: Change Failure Rate (CFR) and Rework Rate

The true measure of code review effectiveness lies in production quality. Monitor change failure rate (the percentage of deployments requiring remediation) and rework rate (the frequency of changes requiring revision after review).

Organizations implementing AI review typically see fewer production incidents and similar reductions in post-review changes. These improvements directly impact business continuity, customer satisfaction, and engineering capacity by reducing emergency responses and rework.

The most valuable measurement approaches track these metrics over time, correlating them with code complexity, developer experience, and AI review coverage. Nobody wants to manually track CFR. Pick a tool that just shows you. This analysis reveals which types of issues AI catches most effectively and where human review remains essential, enabling continuous optimization of your review strategy.

Learning Curve Acceleration

An often-overlooked benefit of AI code review is its impact on skill development. Measure how quickly new team members reach productivity parity with experienced developers and how effectively knowledge spreads across your organization.

AI-assisted teams see faster onboarding times (time to first commit) as consistent feedback accelerates learning of project-specific patterns and practices. The most advanced platforms provide personalized insights to developers, highlighting growth opportunities and tracking improvement over time.

Look for solutions that quantify these learning effects through metrics like error rate reduction by developer, knowledge sharing patterns, and code quality trends across experience levels. These insights help you build more resilient teams and reduce dependency on individual experts.

Maximizing AI Code Review Benefits with Best Practices

Optimize your implementation through proven approaches from successful engineering organizations. Document what constitutes acceptable code, which issues must be addressed versus suggested improvements, and who makes final decisions when AI and human reviewers disagree. These clear standards prevent inconsistent application of recommendations.

Configure review tools to focus on issues relevant to your codebase and ignore false positives. Determine which code areas benefit most from AI review versus human attention, with critical security components or complex business logic warranting additional human scrutiny while routine changes might rely more heavily on automated assessment.

Review system performance regularly, adjusting rule sensitivity and prioritization based on real-world effectiveness. Create structured processes for developers to provide feedback on AI suggestions, helping train systems to better understand your specific codebase patterns and reduce false positives over time. These continuous improvement practices ensure your implementation evolves alongside your organization's needs.

The Future of Engineering Productivity

The evolution of AI code review continues to accelerate, with emerging systems now generating test cases, suggesting refactoring approaches, and producing documentation based on code analysis. Future tools will likely offer increasingly sophisticated code generation capabilities alongside review functions, becoming part of comprehensive development platforms rather than standalone tools.

As AI handles more routine review tasks, engineering teams are shifting focus toward higher-value activities like architecture design and business logic implementation. This transition may lead to flatter team structures with less emphasis on traditional senior-junior review hierarchies. Organizations gaining the most advantage position AI code review as part of broader engineering excellence initiatives, connecting code quality improvements directly to business outcomes and customer value delivery.

You’ve Survived!

Wow, what a journey! You scaled the cliffs of confusion, crossed the swamp of skepticism, and battled the bugs. Now it’s time to build your AI code review basecamp: start small, move smart, and conquer the pipeline. AI code review represents a transformative opportunity for engineering teams to improve quality, accelerate delivery, and enhance developer experience simultaneously. The most significant advantages include faster delivery cycles, higher code quality, reduced security vulnerabilities, and improved developer satisfaction.

Begin with a pilot project in a single team or repository, establish baseline metrics before implementation, and measure results systematically. Start with simpler use cases like style checking and common bug detection before progressing to more sophisticated analysis. Explore vendor documentation, community forums, and implementation case studies to learn from others' experiences.

The competitive advantage of AI code review grows with early adoption and organizational learning. Begin your implementation journey now to realize these benefits sooner and position your organization at the forefront of modern development practices.

Get Started with AI Code Review

Implement AI-powered code review today and experience immediate improvements in engineering velocity, code quality, and team productivity. With LinearB’s AI Code Reviews, you get a complete workflow automation platform that connects code quality to measurable business outcomes.

Stop wasting developer time on manual reviews and start focusing on what matters: building great software that delivers value.

Set up a meeting with one of our workflow automation experts to learn more about using AI workflows to improve your code review process.

![Automated PR review suggestion from LinearB AI via gitStream, highlighting a bug in a Python file. The issue involves unsafe type coercion with astype(str), applied inconsistently across different DataFrames. The suggested fix ensures proper column reference and consistent string conversion. The review includes a code snippet with a corrected assignment for df_issues_summaries["issue_provider_id"]. Enhancing code quality and reducing errors in data processing workflows](https://assets.linearb.io/image/upload/v1740677729/git_Stream_AI_code_review_84a71b56e5.png)