In just over a year, Model Context Protocols (MCP) have gone from an intriguing experiment to a critical piece of the AI infrastructure puzzle. But for large, security-focused organizations, adopting such a fast-moving technology can be a daunting challenge. How do you embrace innovation while maintaining enterprise-grade stability and security?

Brendan Irvine-Broque, Director of Product at CloudFlare, offers a clear blueprint based on their successful MCP implementation. The key to their approach is a deeply ingrained philosophy of internal dogfooding. As he explains, "When we build products, we're building for our own internal customer, zero. First." This principle ensures that by the time a product reaches an external customer, it has already been battle-tested by CloudFlare's own engineering teams.

This article explores CloudFlare's journey with MCP, from their "customer zero" adoption strategy and high-value use cases like observability, to the common challenges they've navigated and their vision for an agent-to-agent future.

The adoption journey: a philosophy of internal dogfooding

CloudFlare's journey with MCP began about a year ago, with the team's "ears to the ground on what developers wanna build." Initial experiments with the local-only protocol from Anthropic quickly accelerated in early 2025 as developer enthusiasm exploded. The technology was a natural fit, representing an elegant solution to the service communication challenges their teams were already contemplating for the new era of AI clients that are becoming, as Irvine-Broque puts it, "the new web browsers."

The core of their strategy was their "customer zero" approach. Instead of just building MCP for external customers, they built it for themselves first, creating a powerful, real-time feedback loop. This internal-first philosophy allowed them to rapidly test, refine, and harden the technology—especially around security and the complexities of remote authentication—before it ever reached an external customer. "I wouldn't feel good as a product leader," Irvine-Broque notes, "if I'm telling a customer... they should go and adopt something if we're not really using it ourselves."

This tight integration between the platform builders and their internal users proved invaluable. For example, engineering teams began using their own MCP servers to debug CloudFlare workers and investigate observability issues. When they encountered challenges or identified opportunities, they could immediately collaborate with the teams building those platform features. This ability for engineers to go "back and forth really, really quickly in fast cycles" is what allows CloudFlare to build products that are not only powerful but also practical and reliable.

A killer use case: enterprise observability

Observability has emerged as one of the most compelling and high-value starting points for MCP adoption. Engineering organizations often use four or five different tools for logs, traces, and metrics, creating a significant pain point during incident response. Engineers are forced to "pull up six or seven different tabs in Chrome and like, correlate different things just to figure out, okay, it's this."

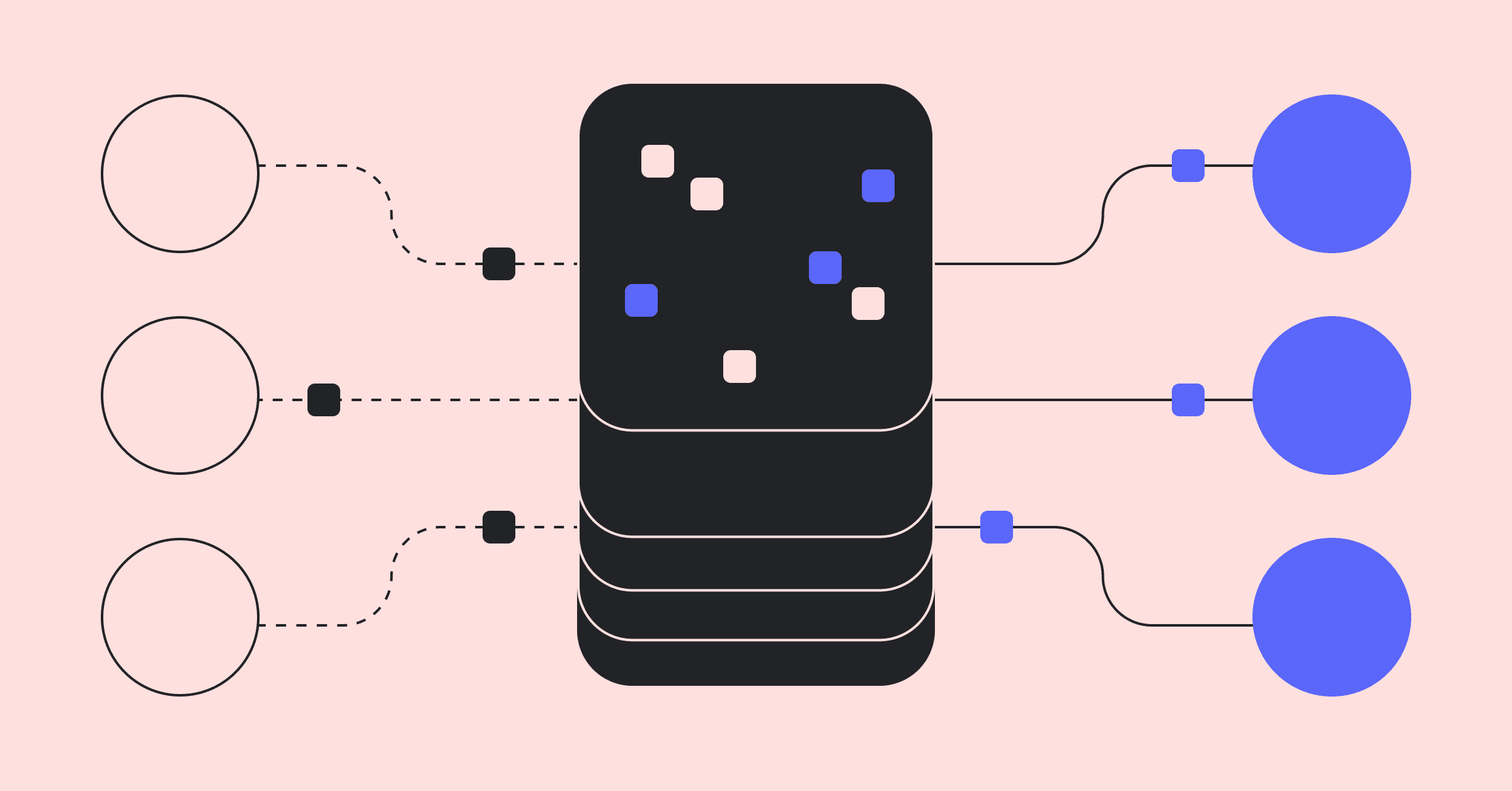

MCP servers solve this problem by acting as a powerful correlation engine. An engineer can now use an AI agent to query logs from one system and metrics from another simultaneously. As Irvine-Broque explains, the agent can "look at correlation and look at what might be happening here and piece the two things together." This transforms a manual, time-consuming task into an automated, intelligent query.

This use case is an ideal entry point for enterprise adoption for three key reasons. First, it's a universal problem that nearly every engineering team faces. Second, it's a focused implementation that doesn't require exposing every possible tool at once. Third, and most importantly, it's fundamentally a read-only operation. This dramatically reduces the security concerns associated with AI agents that can mutate data, allowing organizations to gain significant value while maintaining a strong security posture.

The future: from APIs to agent-to-agent communication

While MCP started as a way for agents to talk to APIs, Irvine-Broque is most excited about its future as a "protocol for agents to communicate" with each other. This vision of a more dynamic, interconnected AI ecosystem is where the true potential of MCP lies. CloudFlare's "durable objects" technology, which provides stateful workers that can maintain instances, proved to be a natural architectural fit for this forward-looking vision.

The MCP specification requires managing long-lived, stateful connections between clients and servers, a task that can be complex on other platforms. For CloudFlare, however, durable objects provided a ready-made solution. "There was just this like very natural fit for us," Irvine-Broque notes. This architectural alignment gave them a head start in building scalable and reliable remote MCP servers.

This technical foundation is now enabling teams to explore more advanced capabilities, such as agents that have their own memory and can persist context across different sessions and platforms. This tackles a key question in the AI space: will a user's memory be controlled by a single provider like OpenAI, or can it be decentralized? Irvine-Broque sees a future where MCP lets "people bring memory across platforms," creating more coherent and personalized AI experiences that are not locked into a single ecosystem.

Common implementation challenges to avoid

As organizations race to adopt MCP, Irvine-Broque identifies several common pitfalls. The most frequent is blowing up the context window. In their excitement, teams often expose too many tools at once. "You've given it 25 tools that all start with the word 'update'," he says, and the LLM "is quite confused." This leads to poor results and developer frustration.

Another critical, often overlooked, challenge is the quality of tool descriptions. Engineers are used to naming methods clearly, but often forget that for an AI, the natural language description is the primary source of information for how and when to use a tool. Irvine-Broque has seen teams develop "mini evals frameworks" just to iterate on their tool descriptions to get the best results, a practice that requires a shift in mindset from traditional API design.

Ultimately, these challenges highlight the need for a more focused and deliberate approach. The initial trend of one-to-one API mapping has given way to a more thoughtful process of curating specific tools for specific use cases. CloudFlare's experience suggests that starting with a narrow, targeted implementation is the most effective path to success, allowing teams to learn and iterate before scaling more broadly.

A blueprint for enterprise MCP adoption

CloudFlare's journey provides a powerful blueprint for how any large organization can successfully adopt emerging AI technologies like MCP. Their success wasn't based on a top-down mandate or a rush to implement every possible feature, but on a pragmatic, engineering-led approach.

It began with a core philosophy of being their own "customer zero," which allowed them to harden the technology in a real-world environment. They focused on a high-value, low-risk initial use case—observability—to build momentum and demonstrate value. Finally, they leveraged their existing architectural strengths, like durable objects, to not only solve immediate challenges but also to unlock a more sophisticated, agent-to-agent future.

For engineering leaders navigating the hype cycle, CloudFlare's experience offers a clear and repeatable lesson: start with your own teams, solve a real internal problem, and build from there. This methodical, internally-focused approach is the most effective way to build the expertise and confidence needed for a successful enterprise-wide implementation.

For the full story on how CloudFlare made MCP enterprise-ready, listen to Brendan Irvine-Broque discuss these ideas in depth on the Dev Interrupted podcast.