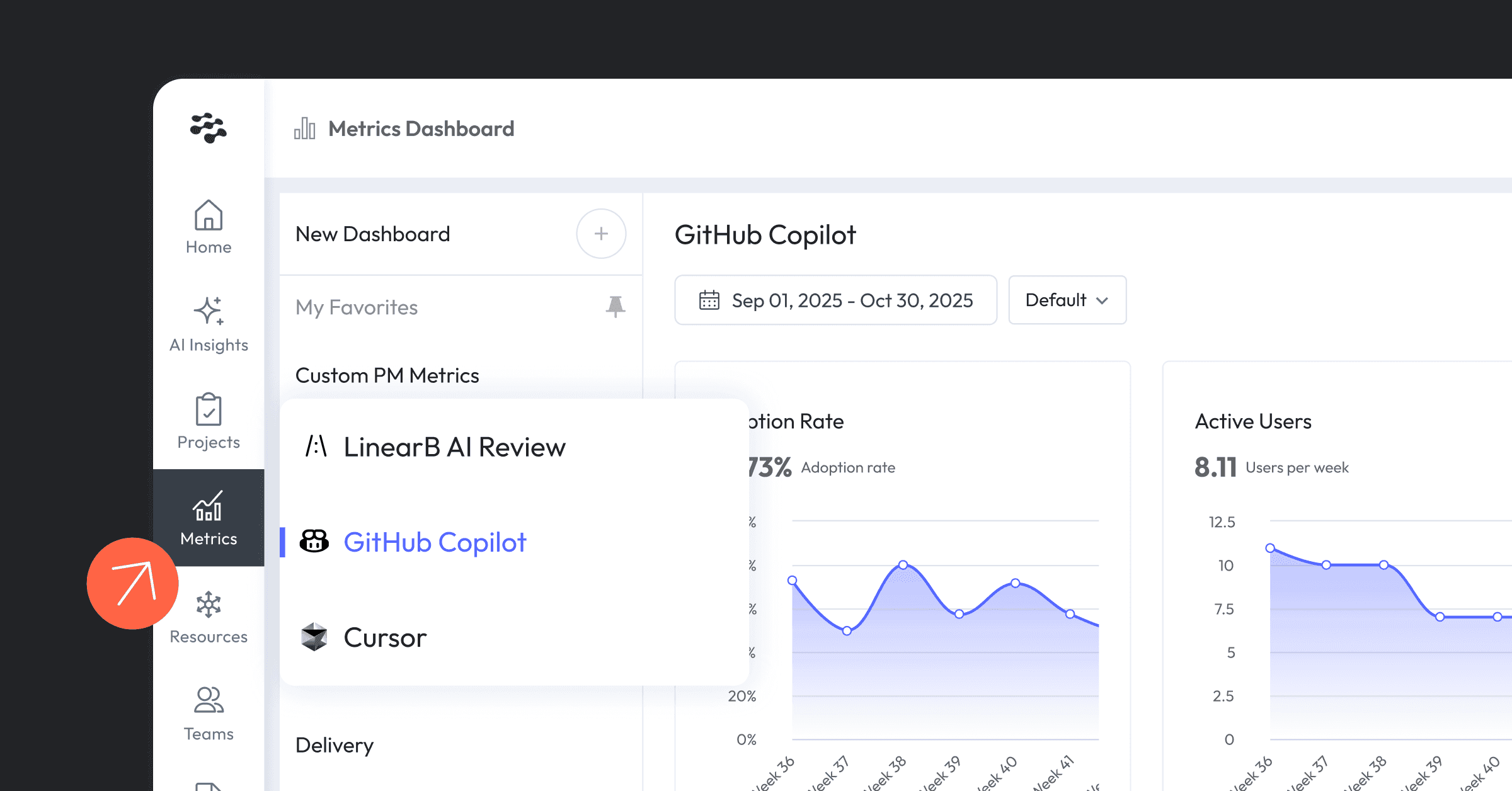

Out-of-the-box metrics dashboards for GitHub Copilot and Cursor are now generally available in LinearB, enabling engineering leaders to measure and understand how AI across tools contributes to development outcomes.

No more switching between multiple platforms to assess adoption and piece together insights, or sharing API keys with every user who needs access to the data. LinearB consolidates your Copilot and Cursor usage metrics into a single platform, providing additional context to the data.

Measure the impact of GitHub Copilot and Cursor

Track, compare, and correlate the impact of both GitHub Copilot and Cursor AI on developer productivity across the organization. By integrating your AI coding assistant usage metrics, LinearB helps you:

- Measure adoption rate across your organization

- Track daily active and engaged users using GitHub Copilot and Cursor

- Monitor AI-generated code suggestions

- Review acceptance of AI-generated code to identify trust patterns and productivity gains

By connecting AI coding assistant usage metrics to delivery results, LinearB helps you quantify how deeply AI is integrated into your SDLC and tracks productivity improvements from Copilot and Cursor adoption – all without leaving the platform.

A unified view of your AI adoption

LinearB brings your AI coding assistance usage metrics in one place, helping you track AI adoption and impact at a glance. Why consolidate Copilot and Cursor analytics in LinearB?

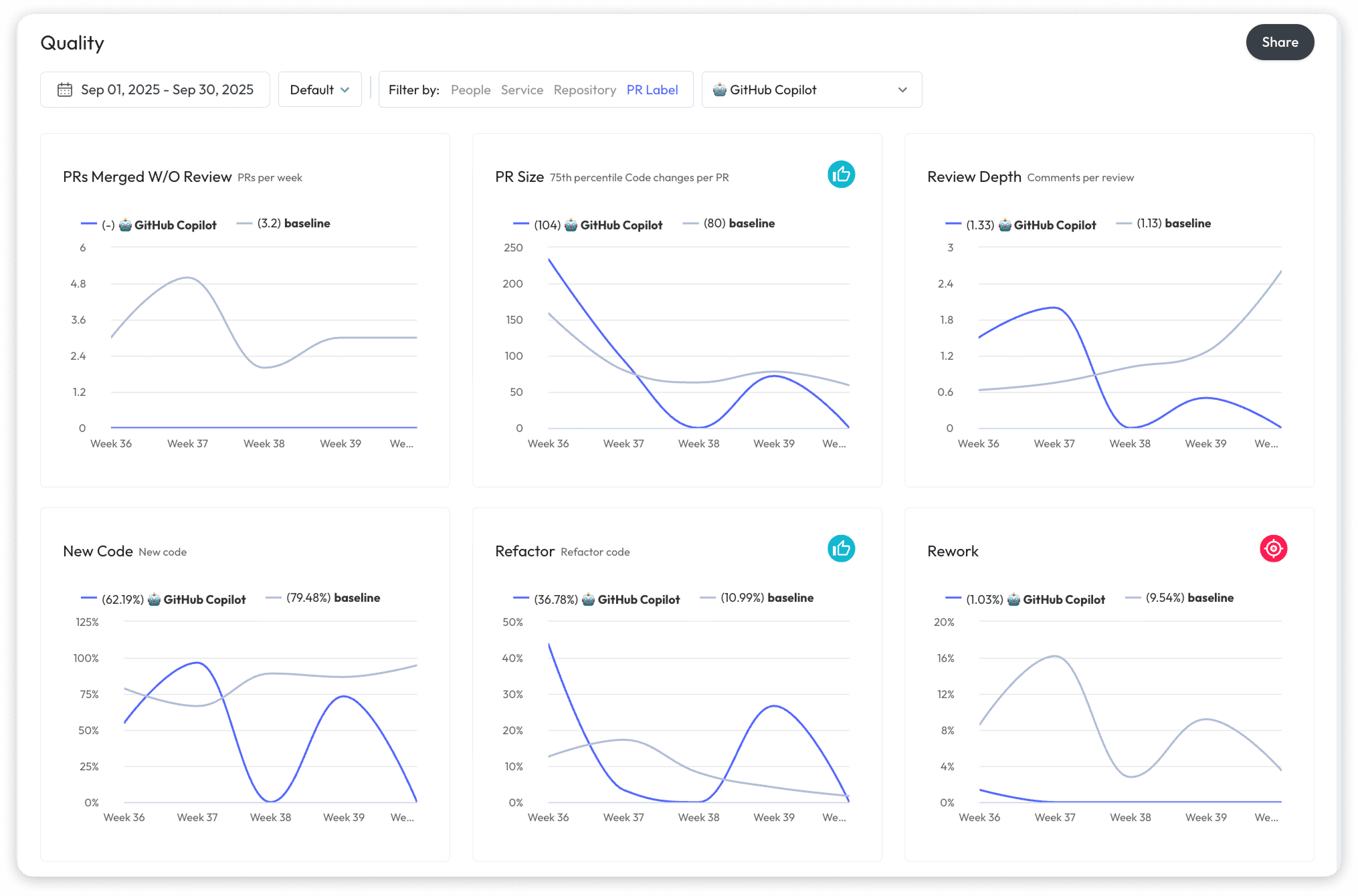

- Correlation with delivery metrics: Don’t let AI data live in isolation. Automatically detect and label PRs that involve AI tools to measure time savings (e.g. cycle time, review time), PR risks (e.g. refactor rate, CFR), and productivity lift from AI tools.

- Efficiency and focus: View Cursor and Copilot ROI metrics in one platform, eliminating the need to toggle between tools.

- Security-first: Avoid distributing API keys to every user. LinearB centralizes access, providing visibility on a single platform.

Turn AI data into actionable engineering insights

With the right context, AI metrics become a catalyst for action. Identify where adoption is growing, pinpoint the biggest productivity gains across your development lifecycle, and know where to invest next to amplify developer impact. Whether you’re quantifying AI impact on code quality or correlating Copilot ROI metrics with throughput gains, LinearB gives you the complete picture of how AI tools shape your engineering outcomes.

Get started today

If you’re already using LinearB, start tracking AI adoption and impact today by connecting your GitHub Copilot or Cursor.

New to LinearB? Try it for free and see how developer workflow analytics and AI coding assistance usage metrics come together to improve delivery outcomes.