Introduction

67% of developers use AI to write code, yet 77% of merge approvals remain entirely human-controlled. This gap reveals a fundamental truth about AI in software development: adoption doesn't equal integration. While teams rush to implement tools like GitHub Copilot and Cursor, they're missing the bottlenecks that actually constrain their delivery pipelines.

We surveyed over 400 engineering teams to understand how AI truly impacts software development. The results challenge everything vendors claim about AI productivity. Yes, teams achieve 19% faster cycle times. Yes, they save 71-75 days over six months. But these gains only materialize when you solve the right problems. We recently explored these findings and what's next for AI in software development in our latest webinar with industry leaders, diving deeper into the trends that separate successful AI implementations from expensive experiments.

Most teams focus on the wrong metrics. They count lines of AI-generated code or track tool adoption rates. Meanwhile, their human review processes create massive bottlenecks that neutralize any upstream gains. It's like installing a Ferrari engine in a car with bicycle wheels.

This guide provides two frameworks you won't find elsewhere: the AI Collaboration Matrix, which maps your team's actual AI maturity across both content creation and workflow automation, and our bottleneck analysis methodology that pinpoints exactly where human processes constrain your AI investments.

You'll discover which tools developers actually use (spoiler: managers lag significantly at just 17% adoption), how enterprises differ from startups in AI integration patterns, and why measuring merge approval rates matters more than code generation metrics.

Let's start with what AI in software development actually means beyond the hype.

What is AI in Software Development? Beyond Code Completion

AI in software development extends far beyond GitHub Copilot autocompleting your for loops. Today's AI systems touch every stage of the software development lifecycle (SDLC), from initial planning through production deployment. Yet most teams barely scratch the surface of what's possible.

The Full SDLC Perspective

Our analysis of 400+ teams reveals AI adoption varies dramatically across the 14 stages of modern software development. Think of AI adoption like a river flowing through your SDLC - deep in some areas, barely a trickle in others.

Where the river runs deepest, we see remarkable adoption rates. Coding leads at 67%, with documentation and testing both hitting 65%. These are the comfortable zones where AI excels at pattern recognition and content generation. But the shallows tell a different story. Planning struggles at 31% adoption, architecture decisions hover at 36%, and deployment automation reaches just 30%. Most concerning? Merge approvals (the critical quality gate) remain 77% human-controlled.

This uneven distribution creates what we call "AI dams" - points where human processes block the flow of AI-accelerated work. As Suzie Prince from Atlassian noted during our recent webinar, "80% of coding time for a developer or the time that a developer spends is not coding. It's planning, it's documentation, it's reviews, it's maintenance." The irony is clear: we're optimizing the 20% while ignoring the bottlenecks in the remaining 80%.

From Tools to Transformation

The evolution of AI in software development follows three distinct phases. Phase 1, from 2021-2023, saw individual developers adopt copilots and assistants with productivity gains remaining localized and no real process changes. We're currently in Phase 2 (2024-2025), where teams connect AI tools across workflows, introducing automated PR reviews, intelligent test generation, and smart deployments. Some processes are beginning to adapt. Phase 3, emerging in 2025 and beyond, will feature AI agents handling complete workflows, with human oversight shifting from task execution to strategy and exceptions.

Most teams remain stuck in Phase 1, treating AI like a better autocomplete rather than a transformative technology. As Amir Behbehani, VP of Engineering at a Fortune 500 company, explains: "AI isn't making our developers faster typists - it's shifting them from carpenters to architects. But only if we redesign our processes to match."

The teams achieving 19% cycle time improvements have moved beyond tool adoption. They've identified where AI creates value (high-volume, repetitive tasks) and where humans excel (complex decision-making, quality gates). More importantly, they've redesigned their workflows to eliminate the handoff friction between AI and human work.

Consider code reviews: AI can analyze syntax, detect patterns, and suggest improvements in seconds. But if your process still requires two senior developers to manually review every PR, you've created a bottleneck that negates AI's speed advantage. This is why understanding the full SDLC perspective matters - optimization in isolation fails.

The path forward requires viewing AI not as a tool but as a team member with distinct capabilities and limitations. Only then can you design processes that truly accelerate delivery.

The Real Impact of AI on Software Development: 400+ Teams Report

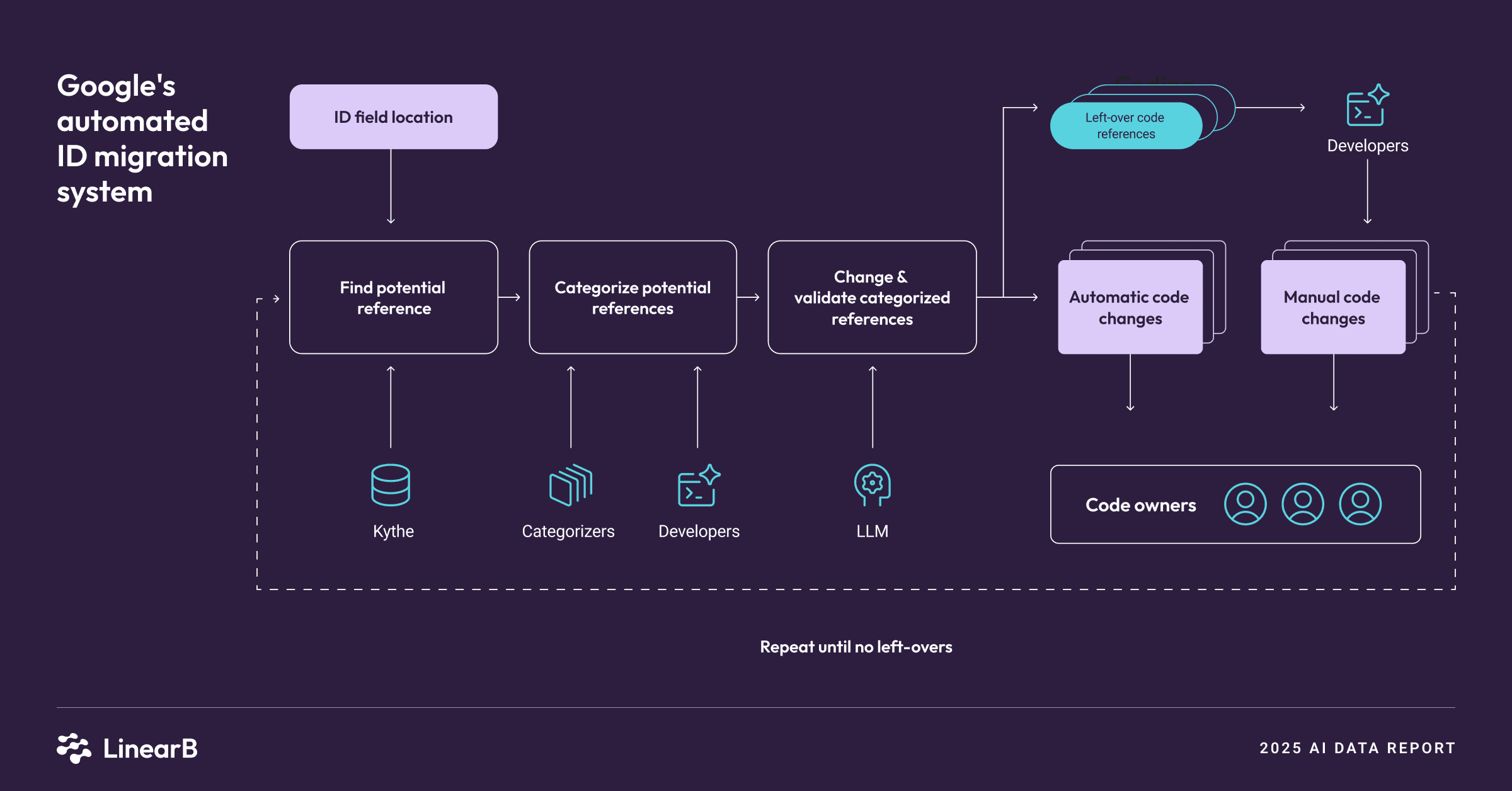

When Google reported that AI writes 75% of their migration code and saves 50% of developer time, the industry took notice. But Google isn't your typical engineering organization. What happens when regular teams adopt AI? Our analysis of 400+ engineering teams reveals a more nuanced reality.

AI Developer Productivity Metrics That Matter

The headline metric everyone chases is cycle time reduction. LinearB's platform data shows teams achieving AI maturity average 19% faster cycle times from commit to production. But this aggregate number hides crucial details.

Teams adopting AI for 5 months save 41 days of engineering time. By 6 months, savings increase to 75 days. This translates to approximately 2.5 hours saved weekly per developer, with a compound effect of 2,500 PRs merged without human intervention. These aren't theoretical projections. They're measured outcomes from teams using AI across their entire workflow, not just for code generation.

The methodology matters. We calculate days saved by comparing baseline cycle time before AI adoption against reduced cycle time after 6 months, factoring in the number of PRs processed and engineering hours eliminated from the workflow. Google's case study provides additional validation - their AI-powered migration system generated 69.46% of code edits automatically. Meta's testing framework created 9,000 test mutations with 73% acceptance rate.

But here's what vendors won't tell you: these gains only materialize under specific conditions. As Birgitta Böckeler from Thoughtworks cautions: "AI amplifies both good and bad practices indiscriminately. If your code review process is broken, AI will help you ship bad code faster."

Where AI Makes the Biggest Difference (And Where It Doesn't)

Our adoption data reveals clear patterns about where AI delivers value. High-impact areas show over 60% adoption, with code generation leading at 67% because AI excels at boilerplate and patterns. Documentation follows at 65% due to AI's natural language capabilities, while test creation matches that rate as AI generates comprehensive test cases.

The moderate-impact areas, ranging from 30-40% adoption, include architecture decisions at 36% with AI suggesting patterns, planning at 31% for estimation and task breakdown, and deployment at 30% through intelligent CI/CD.

Low-impact areas tell the most revealing story. Merge approvals languish at 23% adoption - the critical bottleneck. Security reviews hover at just 15% due to risk concerns, while production debugging remains at 12% given its complexity. The pattern is clear: AI thrives in structured, repeatable tasks but struggles with nuanced decision-making. This explains why 77% of merge approvals remain human-controlled despite 67% AI usage in coding.

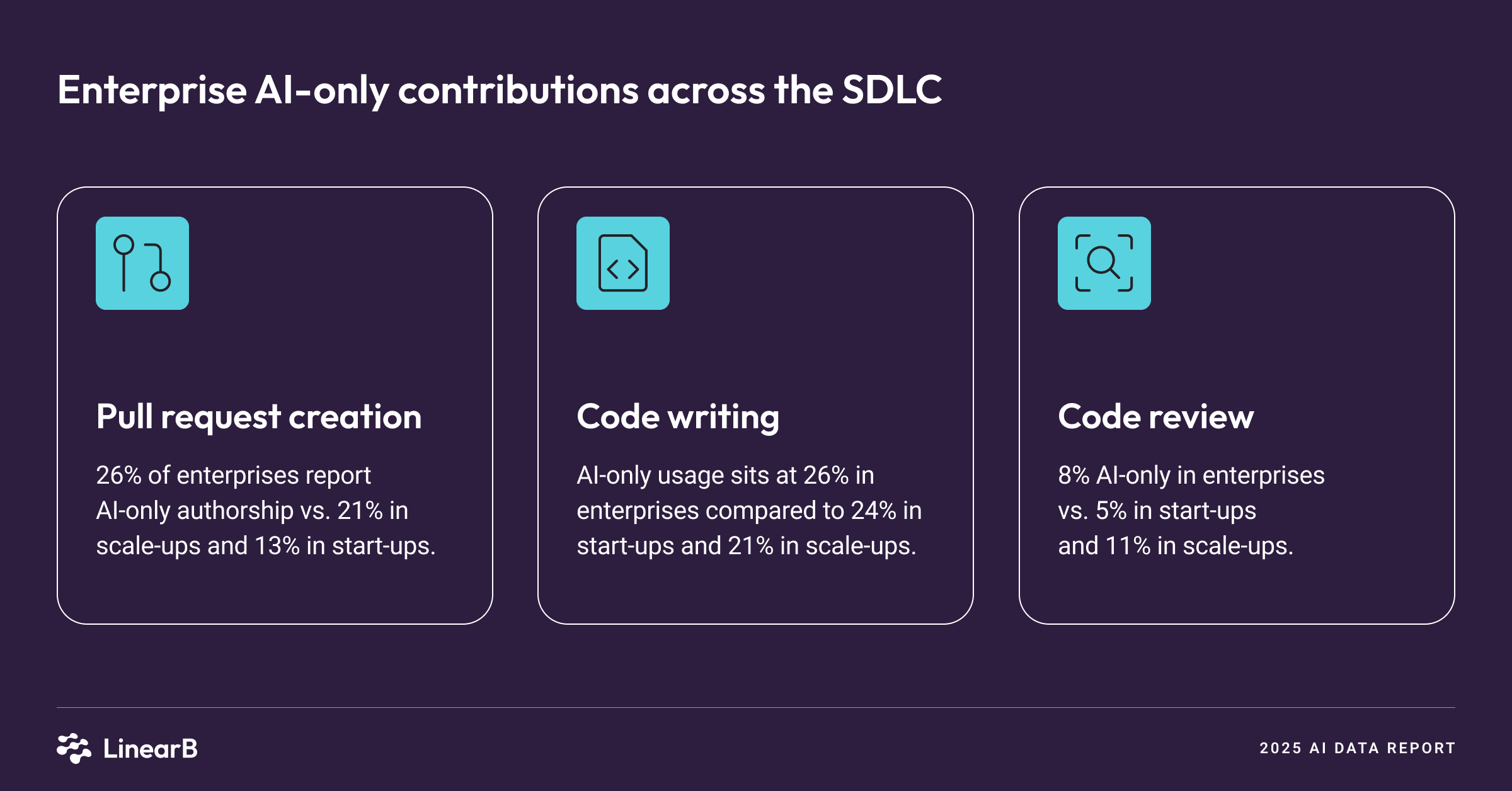

Company size dramatically affects these patterns. In enterprises with 300+ developers, 50% remain "AI Newbies" on our Collaboration Matrix, while 26% use AI-only workflows for specific tasks, and just 8% achieve full AI-driven development. Their conservative adoption stems from governance requirements.

Scale-ups (50-300 developers) show the most balanced approach. While 57% are AI Newbies advancing quickly, 35% blend human-AI collaboration effectively, and 4% reach AI-driven status. Their flexibility allows for more experimental adoption patterns.

Startups present a paradox. Despite aggressive experimentation, 60% remain AI Newbies. Only 20% land in the Pragmatist zone, while 16% become "Vibe Coders" - heavy on generation but maintaining traditional processes. Just one startup in our study achieved AI Orchestrator status.

Role-based adoption adds another crucial dimension. Directors lead at 38% using OpenAI tools to explore strategy. Developers match at 40%, adopting AI for immediate productivity gains. But managers (who control processes and approvals) lag significantly at just 17% adoption. This fragmentation creates an interesting dynamic where directors set AI strategy and developers implement tools, but managers who control workflows remain disconnected from the transformation.

The teams achieving exceptional results share three characteristics. First, they measure impact beyond velocity metrics. Second, they redesign processes rather than just adding tools. Third, they treat AI adoption as a team sport, not individual choice. Real impact comes from systemic change, not tool proliferation. The 19% cycle time improvement represents teams that understood this principle and acted on it.

How to Measure AI Impact on Your Development Team

Measuring AI impact requires more than tracking GitHub Copilot acceptance rates. After analyzing hundreds of teams, we've identified the metrics that actually predict AI success and the measurement traps that waste everyone's time.

Building Your AI Measurement Framework

LinearB's data science team developed a four-metric system that captures real AI value. First is cycle time reduction - the gold standard metric. Measure commit-to-deploy time before and after AI adoption, with our benchmark showing 19% reduction within 6 months. Calculate it simply by comparing your baseline average cycle time pre-AI (say 5 days) against current average with AI (4.05 days), revealing your improvement percentage.

Second, track automated PR processing by counting PRs that move through your pipeline without human intervention. High-performing teams automate 2,500+ PRs over 6 months. Monitor PRs auto-approved by AI, those auto-merged after AI review, and time saved per automated PR.

Third, examine developer time allocation to see where your developers actually spend time. AI should shift allocation from routine tasks to complex problem-solving. You should see hours on boilerplate code decrease while hours on architecture and design increase, with debugging time also trending downward.

Fourth, maintain quality metrics balance because AI can hurt quality if mismanaged. Track defect density (bugs per 1,000 lines), ensure test coverage maintains or improves, and monitor production incident frequency.

Here's the math behind our "71-75 days saved" headline: Take an average team of 10 developers with a baseline cycle time of 5 days. With AI-improved cycle time of 4.05 days (19% reduction) and 3 PRs per developer per week, you get 28.5 hours saved weekly. Over 6 months, that's 741 hours - approximately 75 days.

But raw time savings miss the point. As Dan Lines, LinearB's COO and Co-founder, emphasizes: "The question isn't how much faster can we code, but how much more value can we deliver. Speed without direction is just expensive chaos."

Common Measurement Pitfalls and How to Avoid Them

The first pitfall is velocity worship. Tracking story points completed or lines of code generated tells you nothing about value delivered. AI can pump out thousands of lines that create technical debt. Instead, measure outcomes like features shipped and customer satisfaction rather than outputs like code volume.

The second trap is ignoring quality decay. Teams celebrating 50% faster delivery often discover they're shipping 50% more bugs. The cleanup cost eliminates any efficiency gains. Implement quality gates that AI cannot bypass, maintaining minimum test coverage and code review standards.

Tool-specific metrics represent another common mistake. Measuring "Copilot acceptance rate" or "ChatGPT queries per day" tracks tool usage, not impact. High usage might indicate dependency rather than productivity. Focus instead on workflow metrics that span tools - cycle time captures the entire development process.

Finally, watch for the missing denominator problem. "We saved 1,000 developer hours!" sounds impressive until you realize it took 2,000 hours of DevEx work to achieve. Calculate true ROI, including implementation costs, factoring in training, tool licenses, and process redesign time.

Setting Realistic Benchmarks

Not every team achieves 19% improvement. Success factors include current process maturity (more room for improvement equals bigger gains), team size (10-50 developers see best results), tech stack complexity (modern stacks integrate easier), and leadership buy-in (process changes require support).

Suzie Prince from Atlassian poses the critical question: "Are we using AI to build more software faster, or to build the right software better?" Your measurement framework should answer both questions.

Start simple. Pick two metrics from our framework. Establish baselines. Measure for 90 days. Then expand. Premature optimization of measurements wastes cycles you're trying to save.

AI Tools Across the SDLC: A Comprehensive Comparison

The AI tools landscape shifts faster than a startup's product roadmap. While everyone knows GitHub Copilot, our survey reveals surprising patterns in what engineering teams actually use and more importantly, what they keep using after the honeymoon phase ends.

AI Code Assistants: The Current Landscape

The market has exploded beyond the GitHub Copilot monopoly. Based on our 400+ team survey, here's what different roles actually adopt.

Directors, as strategic explorers, gravitate toward OpenAI tools (38%) for experimentation with ChatGPT and API integrations. They also leverage Microsoft's suite including Copilot and Azure AI (26%), while 15% experiment with JetBrains AI Assistant. The remaining 21% explore emerging tools like Cursor, Claude, and Tabnine.

Developers, focused on productivity, show similar patterns but with different motivations. They rely on OpenAI for problem-solving (40%), use Microsoft Copilot in their IDE (18%), and prefer JetBrains for integrated experiences (11%). Notably, 31% mix multiple tools based on specific tasks.

Managers present the most concerning pattern. Only 17% use any AI tools regularly, and when they do, it's primarily OpenAI (70%) or Microsoft (30%) with minimal experimentation. Their focus remains on reporting rather than hands-on usage.

This role-based fragmentation explains why AI adoption stalls. Directors set strategy with tools managers don't use, while developers implement solutions managers can't evaluate. The result? That persistent 77% human approval bottleneck.

Tool Comparison: Beyond Marketing Claims

Let's cut through vendor hype with actual usage data from our survey.

GitHub Copilot shows strong IDE integration and Microsoft backing, making it enterprise-ready. However, while 55% initially adopt it, only 31% remain active after 6 months. It works best for teams already in the Microsoft ecosystem at $19/user/month for enterprise.

Cursor, built for AI-first development with powerful commands, sees just 12% adoption but boasts 78% retention after 6 months. Senior developers willing to change workflows find it particularly effective at $20/user/month.

Amazon Q Developer leverages AWS integration and security scanning, achieving 8% adoption mostly in AWS-heavy shops. Teams with significant AWS infrastructure benefit most at $19/user/month.

Tabnine's on-premise option and code privacy features attract 15% adoption in regulated industries. Teams with strict data residency requirements prefer it at $12-15/user/month.

The surprise finding? Teams using multiple tools report 23% higher satisfaction than single-tool shops. The sweet spot appears to be a primary IDE assistant plus a chat-based problem solver.

Beyond Coding: AI Tools for Every Stage

While code generation grabs headlines, the real productivity gains come from AI across the entire SDLC.

In planning and architecture (31% adoption), teams use Jira AI for automated story generation and effort estimation, Linear AI for smart issue grouping and priority suggestions, and Notion AI for requirements documentation and PRD generation. The impact? 30% reduction in planning meeting time.

Testing and quality (65% adoption) sees remarkable transformation with Diffblue Cover automating unit test generation for Java, Meta's ACH System creating 9,000 test mutations with 73% acceptance, and Qodo (formerly Codium) suggesting tests while coding. Teams report 2.5x increase in test coverage.

Code review and collaboration is growing rapidly, with LinearB's AI code review saving 71 days through automated workflows, DeepCode providing security-focused automated reviews, and Qodo PR-Agent automating PR descriptions and reviews. The result is 50% reduction in review wait time.

Documentation (65% adoption) transforms from a dreaded task to an automated process. Mintlify generates API documentation automatically, ReadMe AI creates project documentation from code, and Swimm provides code-coupled documentation that updates automatically. Teams report 80% less time spent on documentation tasks.

Deployment and monitoring (30% adoption) remains underutilized but shows promise. Harness AI makes deployment verification and rollback decisions, PagerDuty AIOps correlates incidents for faster resolution, and LaunchDarkly Experimentation drives AI-powered feature flag decisions. Early adopters see 40% fewer deployment failures.

The pattern is clear: specialized tools outperform general-purpose AI in their domains. A dedicated test generation tool beats asking ChatGPT to write tests. An AI-powered review system beats copying code into Claude.

The Integration Challenge

Here's what vendors won't tell you: the average team uses 4.7 AI tools, but only 1.8 integrate with each other. This creates "AI silos" where productivity gains in one area create bottlenecks in another.

Smart teams solve this through API-first selection, choosing tools with robust APIs; workflow platforms like LinearB to orchestrate tools; standard formats ensuring tools speak common languages (OpenAPI, GraphQL); and gradual rollout, integrating one stage at a time.

The teams achieving 19% cycle time improvements don't just adopt tools - they orchestrate them. They've moved from asking "which AI tool is best?" to "how do AI tools work together?" That shift in thinking separates the AI Pragmatists from the AI Newbies.

The AI Collaboration Matrix: Understanding Your Team's AI Maturity

After analyzing 400+ engineering teams, we discovered that AI maturity isn't about tool count or code generation percentage. It's about how AI and humans collaborate across two dimensions: who creates the artifacts and who drives the processes. This insight led to our AI Collaboration Matrix - a framework that finally explains why some teams thrive with AI while others struggle.

Four Stages of AI Development Maturity

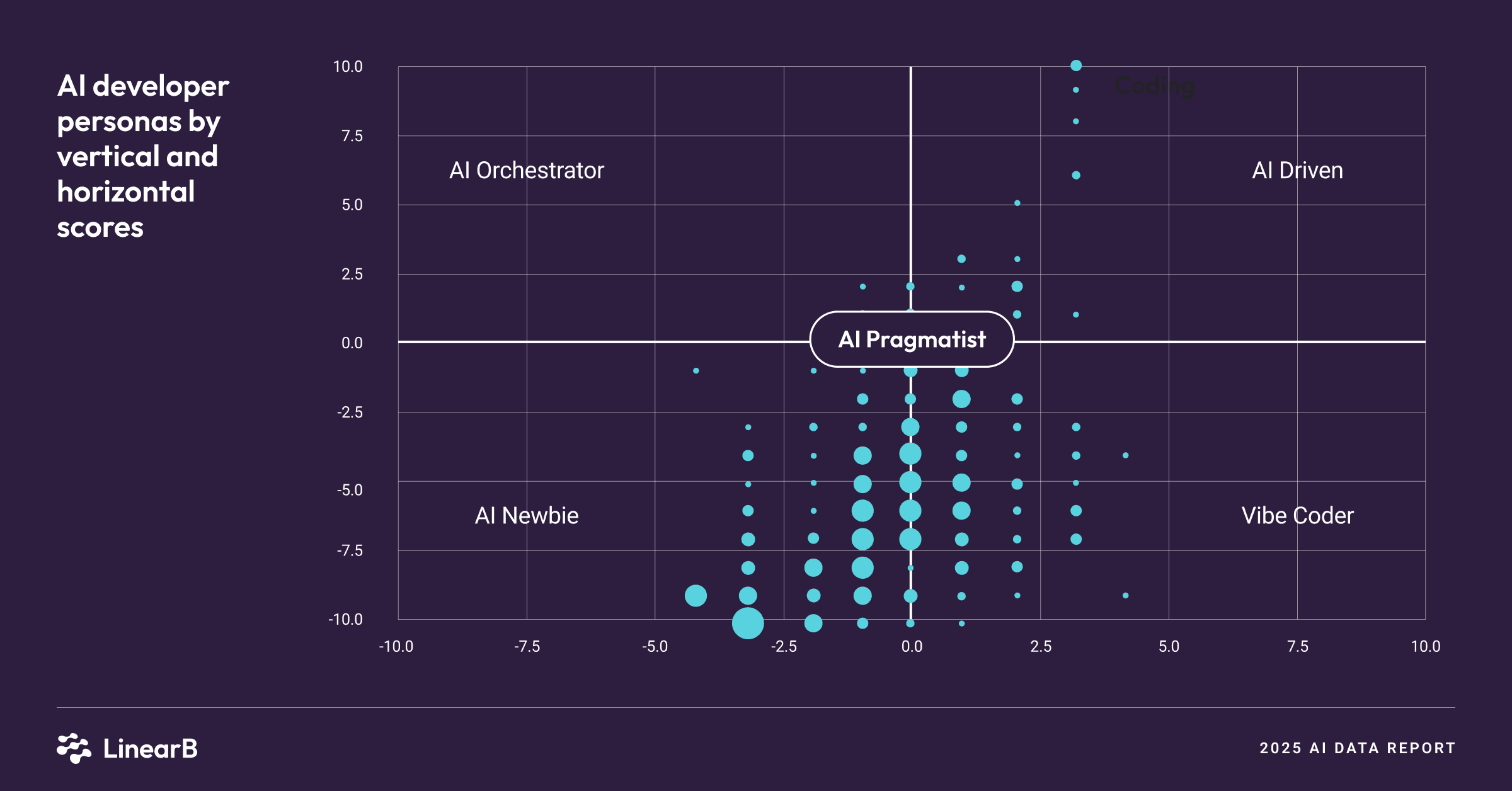

The matrix plots teams across two axes: AI vs. Human artifact creation (horizontal) and AI vs. Human process control (vertical). Here's where teams land:

AI Newbie (Bottom-left: 50% of enterprises, 60% of startups) These teams show minimal AI adoption with traditional workflows dominating. They might have GitHub Copilot licenses gathering dust. Manual processes control everything - reviews, approvals, deployments. They're stuck due to risk aversion, lack of DevEx support, and unclear ROI. The next step? Pick one high-impact area (usually testing) for an AI pilot.

Vibe Coder (Bottom-right: 16% of startups, rare in enterprises) Heavy AI code generation meets traditional processes in this quadrant. Developers use Copilot for everything, but PRs still wait days for review. AI creates; humans slowly evaluate. These teams plateau because process bottlenecks negate generation speed. Warning signs include rising technical debt and reviewer burnout.

AI Orchestrator (Top-left: Fewer than 1% of start-ups) This rare breed maintains human-crafted code with AI-driven workflows. Developers write; AI handles reviews, testing, deployment. Humans maintain quality; AI ensures velocity. It's rare because it requires significant DevEx investment, but often represents the optimal balance for complex systems.

AI Pragmatist (Center: 20% of startups, 4% of scale-ups) Balanced AI involvement characterizes these teams. They run 3-5 integrated AI tools across the SDLC, with AI handling routine tasks while humans manage exceptions. These teams achieve the 19% cycle time improvement and represent natural progression from any other quadrant.

AI-Driven (Top-right: 8% of enterprises, paradoxically) Maximum AI automation across all dimensions, more common in enterprises than startups. Humans set strategy; AI executes. This works best in highly standardized, regulated environments but risks over-automation and loss of engineering judgment.

Finding Your Position and Planning Your Evolution

The enterprise paradox deserves attention: 26% of enterprise teams use AI-only workflows for specific tasks, yet 50% remain AI Newbies overall. This bimodal distribution suggests enterprises either fully commit or completely avoid AI - no middle ground.

Scale-ups show the healthiest distribution with 35% human / 35% mixed / 30% AI for code writing and 38% human / 34% mixed / 27% AI for testing. This balanced adoption across all SDLC stages explains why scale-ups report highest satisfaction with AI adoption. They're not trying to automate everything; they're finding the right mix.

Maturity Assessment Criteria

Rate your team (1-5 scale) on artifact creation (how much code/docs/tests does AI generate?), process automation (how many workflows run without human intervention?), integration depth (do AI tools communicate and coordinate?), quality maintenance (has defect rate increased with AI adoption?), and team capability (can everyone effectively prompt and guide AI?).

Score interpretation reveals your position: 5-10 points indicate AI Newbie status (start with education and pilots), 11-15 suggests transitioning (focus on process bottlenecks), 16-20 shows maturing (integrate and orchestrate tools), while 21-25 indicates advanced status (optimize and scale what works).

Common Evolution Blockers

The manager gap presents the first major obstacle. With only 17% manager adoption, teams lack middle-layer buy-in. Tool sprawl compounds the problem - the average team tries 4.7 tools but integrates only 1.8. Legacy approval workflows resist automation, creating process debt. Many teams operate with measurement blindness, not knowing if AI helps or hurts. Finally, all-or-nothing thinking leads teams to attempt full automation before basics work.

The path forward isn't linear. A Vibe Coder might become a Pragmatist by adding process automation. An AI Newbie might jump to Orchestrator by focusing on workflow-first adoption. The key is intentional evolution based on your team's specific constraints and goals.

Our most successful teams share one trait: they measure their position quarterly and adjust accordingly. They treat AI maturity as a journey, not a destination.

Implementing AI in Software Development: A Practical Guide

Most AI implementation guides read like vendor wishlists: buy tools, train everyone, hope for magic. After studying teams that actually achieved 19% cycle time improvements, we've documented what really works and what expensive consultants won't tell you.

Week-by-Week Implementation Roadmap

Weeks 1-2: Bottleneck Analysis (Find Your 77%) Start by mapping your current workflow bottlenecks. Where does work actually slow down? For most teams, it's that 77% human-controlled merge approval process, but yours might differ.

Export last 90 days of cycle time data, identify top 3 delay points in your pipeline, survey developers asking "What wastes your time?", and document baseline metrics for later comparison. One team discovered their bottleneck wasn't reviews but flaky tests requiring manual reruns. They implemented AI-powered test stability analysis and saved 4 hours per developer weekly - before touching code generation.

Weeks 3-4: Build Knowledge Repositories Brandon Jung, Director of Developer Experience at a 500-person startup, shared their breakthrough: "We spent two weeks documenting our coding patterns, architectural decisions, and review criteria. That knowledge base became the foundation for every AI tool we adopted. Without it, AI just guesses."

Essential documentation includes coding standards and patterns, common architectural decisions, PR review checklists, security and compliance requirements, and team-specific terminology. Store this in a searchable, versionable format - your AI tools will reference it thousands of times.

Weeks 5-6: Governance Framework Without Bureaucracy Create lightweight policies that enable experimentation while managing risk. Start with simple guidelines: all AI-generated code requires human review (for now), production-critical paths need extra scrutiny, document which AI tool generated what code, share successful prompts with the team, and report issues weekly in standups. Avoid 50-page governance documents. Start simple, iterate based on actual problems.

Weeks 7-8: Pilot Team Selection and Kickoff Choose your pilot team carefully. Look for 5-8 developers (small enough to coordinate), a mix of AI enthusiasts and skeptics, teams working on non-critical features, a strong technical lead who embraces change, and clear, measurable project goals.

Provide the pilot team with 2-3 integrated AI tools (not 10), dedicated DevEx support, weekly check-ins, and permission to fail and adjust.

Weeks 9-12: Measure, Iterate, Adjust Track your four key metrics weekly. Target 5% cycle time improvement by week 12, aim for 20% of simple PRs to be automated, survey developer satisfaction weekly, and ensure code quality metrics don't decrease.

Adjust based on data, not opinions. One team discovered their AI code reviewer flagged too many false positives, causing developers to ignore all suggestions. They tuned the sensitivity and saw immediate adoption improvement.

Month 4+: Scale What Works, Kill What Doesn't By month 4, patterns emerge. You'll identify which tools deliver value (keep and expand), which create friction (eliminate immediately), which processes need redesign (prioritize these), and which teams are ready to adopt (roll out gradually).

Critical Success Factors Most Teams Miss

1. Enthusiast-Skeptic Balance Following Birgitta Böckeler's advice: "Pair your biggest AI enthusiast with your biggest skeptic. The enthusiast pushes boundaries; the skeptic ensures quality. Together, they find the practical middle ground." This pairing prevents both reckless adoption and stubborn resistance.

2. Trust Through Transparency Brooke Hartley Moy, VP of Engineering at a fintech startup, transformed their culture: "We made all AI interactions visible. Every AI-generated PR shows the prompts used. Every automated decision logs its reasoning. Transparency built trust faster than any training program."

3. Solving the Merge Bottleneck First Remember the 77% problem? Teams that tackle merge approvals first see cascading benefits. Faster feedback loops encourage more AI usage, developers trust the system when reviews are quick, and quality improves when reviews focus on real issues.

LinearB's AI code review helped one team automate 60% of routine PR approvals, focusing human reviewers on complex changes. Result: 71 days saved in 6 months.

4. Learning from Meta's 73% Test Acceptance Meta succeeded because they started with clear objectives (catch privacy bugs) rather than vague goals (improve testing). Define specific, measurable outcomes for each AI implementation.

5. Infrastructure Requirements Cory O'Daniel, Platform Engineering Director, warns: "AI tools demand 3x more API calls, 2x more CPU for analysis, and new monitoring approaches. Budget for infrastructure scaling or face adoption walls."

Technical requirements often overlooked include API rate limit increases, enhanced logging and monitoring, secure credential management, and backup workflows when AI services fail.

The teams achieving sustained success treat implementation as a product launch, not a tool deployment. They version their approach, gather user feedback, and iterate relentlessly. Most importantly, they remember that AI amplifies existing processes - good or bad. Fix your fundamentals first, then accelerate with AI.

[Visual: Implementation timeline Gantt chart]

The Future of AI-Driven Development

The future of AI in software development isn't about replacing developers - it's about fundamentally reimagining how software gets built. Based on emerging patterns from our research and industry leaders, here's what the next 18 months will bring.

First, we're moving from tools to autonomous agents. Today's AI assists; tomorrow's AI acts. We're seeing early examples of agents that handle complete workflows - from receiving a feature request to deploying tested code. The key shift: humans define objectives and constraints; agents determine implementation.

Second, the "Humans at the Top" architecture is emerging. Leading teams are restructuring workflows with humans as orchestrators, not implementers. Developers evolve into system architects, quality guardians, and AI trainers. One enterprise CTO describes it: "We're moving from human developers with AI assistants to AI developers with human supervisors."

Third, knowledge graphs are replacing documentation. Traditional documentation can't keep pace with AI-generated code. Teams are building living knowledge graphs that AI agents continuously update. These graphs connect code, decisions, personnel, and business logic in queryable formats.

Fourth, compound AI systems are emerging. Single-purpose tools give way to AI networks. Imagine a code generator that consults a security analyzer, which triggers a performance optimizer, which schedules deployment based on risk assessment - all without human intervention. Early adopters report 40% efficiency gains from AI-to-AI coordination.

Fifth, quality through AI competition becomes standard. Meta's approach - AI creating bugs for AI to catch - shows the way forward. Teams will deploy competing AI systems: creators vs. validators, optimizers vs. security checkers. This adversarial approach improves both sides continuously.

Sixth, infrastructure transforms into AI runtime. Cory O'Daniel's prediction is materializing: "Infrastructure stops being about servers and becomes about AI execution environments. We'll manage prompt libraries like we manage containers today."

Developer-Agent Collaboration Patterns

The most successful teams are experimenting with three models. Pair Programming 2.0 sees developer and AI agent sharing context continuously, with the agent suggesting approaches, implementing routine sections, and alerting to potential issues while the developer focuses on architecture and business logic.

Swarm Development involves multiple specialized agents working on different aspects simultaneously - UI agent, backend agent, test agent - coordinated by a human architect. Early experiments show 3x faster feature development.

Evolutionary Development features AI agents proposing multiple implementation approaches, with automated testing evaluating each option. Humans select based on business criteria. This "guided evolution" produces solutions humans wouldn't conceive.

Preparing Teams for Increased Autonomy

Smart organizations are investing in prompt engineering training to teach developers how to guide AI effectively, AI literacy programs ensuring everyone understands capabilities and limitations, ethical AI frameworks establishing boundaries before they're needed, and continuous learning systems capturing lessons from every AI interaction.

The teams positioning for success share one perspective: AI isn't a better hammer for the same nails. It's an opportunity to reimagine what we're building and how. They're asking not "How can AI make us code faster?" but "What becomes possible when coding isn't the bottleneck?"

As one director told us: "We're three years from AI handling 80% of today's development tasks. The question is: what will developers do with that freed capacity? That's where competitive advantage lies."

Conclusion & Your Next Steps

We began with a paradox: 67% of developers use AI to write code, yet 77% of merge approvals remain entirely human-controlled. This gap reveals the critical insight separating successful AI adoption from expensive experimentation.

The 400+ teams we studied taught us that AI transformation isn't about tool adoption - it's about workflow evolution. Yes, the right teams achieve 19% faster cycle times. Yes, they save 71-75 days over six months. But these gains only materialize when you solve the right problems in the right order.

Your next steps depend on where you fall on the AI Collaboration Matrix. If you're an AI Newbie, start with bottleneck analysis. Find your 77% constraint. Pick one high-impact area for a pilot. Measure religiously. If you're a Vibe Coder, stop generating more code faster. Focus on downstream processes. Automate reviews and deployments before adding more generation capacity. If you're an AI Orchestrator, you're rare. Document what works. Share your patterns. Consider productizing your approach. If you're an AI Pragmatist, you're on the right track. Focus on integration and measurement. Scale what works.

Resources to accelerate your journey include taking our AI Collaboration Matrix assessment to pinpoint your position, using our ROI calculator to build your business case, downloading our implementation checklist, and joining our quarterly research updates for fresh insights.

The future belongs to teams that view AI as a team member, not a tool. The question isn't whether to adopt AI - it's whether you'll lead the transformation or follow it.

Transform your engineering team from reactive to proactive with AI-powered insights, automated workflows, and industry-leading benchmarks. Request a LinearB demo to see how our Engineering Productivity platform can help you measure, optimize, and accelerate your AI transformation.

FAQ Section

1. What is AI in software development? AI in software development encompasses tools and systems that automate coding, testing, documentation, and deployment tasks. It includes code assistants like GitHub Copilot, testing frameworks, and AI-powered project management, with 67% of developers currently using AI for code generation according to our 400+ team survey.

2. How does AI improve developer productivity? AI improves developer productivity by reducing cycle time by 19% on average and saving 71-75 days over 6 months. It automates repetitive tasks, generates boilerplate code, assists with debugging, and helps with documentation, allowing developers to focus on complex problem-solving and architecture decisions.

3. What are the best AI tools for developers? Top AI tools include GitHub Copilot (40% developer adoption), OpenAI tools (38% among directors), Cursor, Amazon Q, and Tabnine for coding. For non-coding tasks, LinearB automations for reviews, Diffblue for testing, and Mintlify for documentation lead adoption rates based on our survey data.

4. How do you measure AI impact on development teams? Measure AI impact through cycle time reduction (target 19%), days saved (track over 6 months), PR merge rates, and quality metrics. Use frameworks like LinearB's 4-metric system and establish baselines before implementation to track improvement accurately.

5. Will AI replace software developers? No, AI won't replace developers but transforms their role from "carpenters to architects." While AI handles routine coding, developers focus on system design, problem-solving, and quality assurance. The shift requires new skills but creates more strategic opportunities for developers.

6. What is the ROI of AI development tools? AI development tools typically deliver ROI through 19% faster cycle times and 71-75 days saved per team over 6 months. With tools costing $20-50/developer/month, teams see break-even within 2-3 months through productivity gains alone, not counting quality improvements.

7. How to implement AI in existing development workflows? Start by identifying bottlenecks (especially the 77% manual merge approval problem), build knowledge repositories, select pilot teams, and measure impact over 12 weeks. Focus on one SDLC stage at a time and scale based on concrete metrics like cycle time reduction.

8. What are the risks of using AI in software development? Key risks include technical debt from unchecked AI code, over-reliance on generation without understanding, security vulnerabilities, and quality issues. Mitigate through code reviews, governance frameworks, and maintaining balance between speed and quality - remember AI "amplifies indiscriminately."