Rethinking Developer Productivity in the Age of Agentic AI

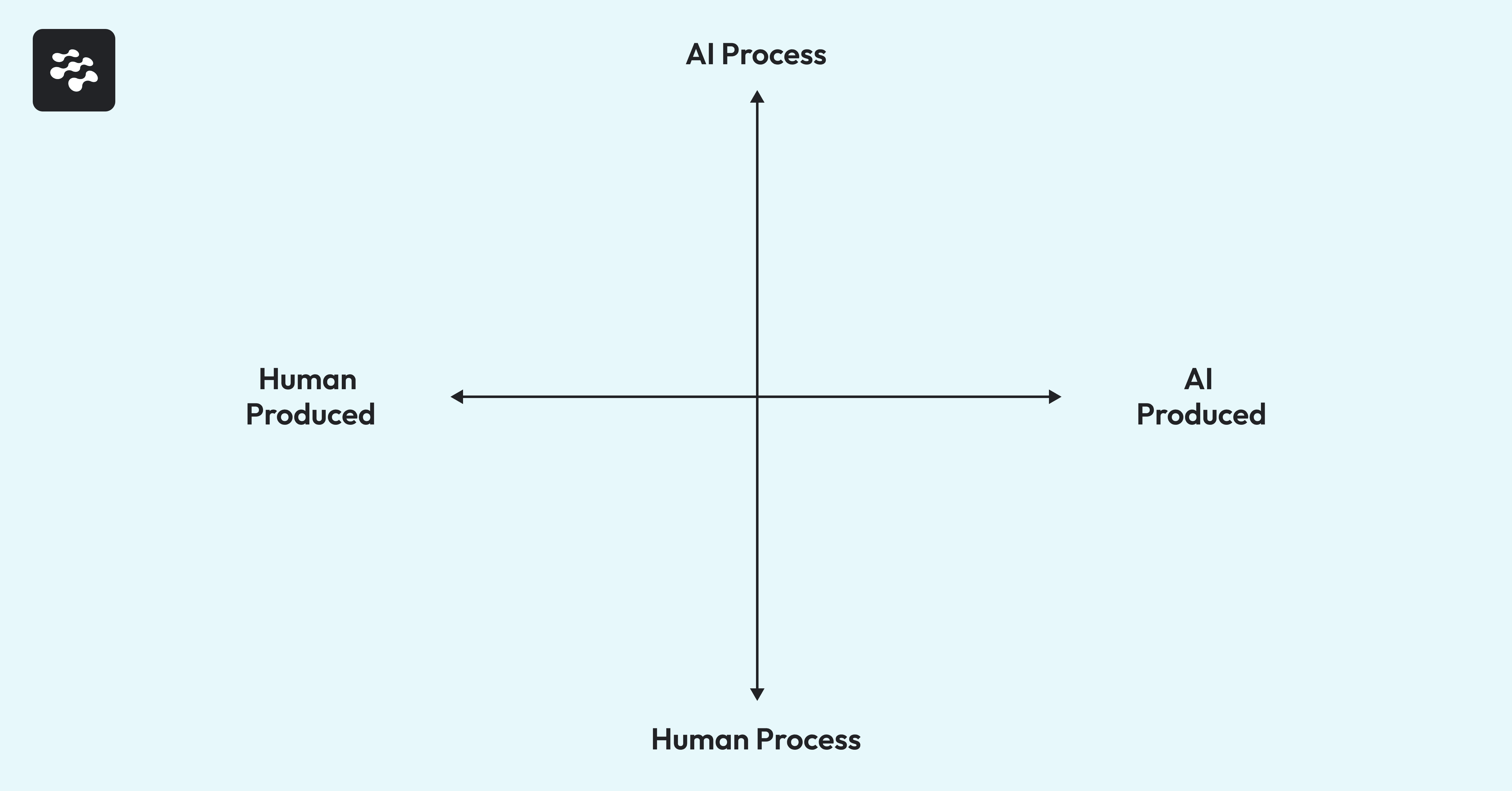

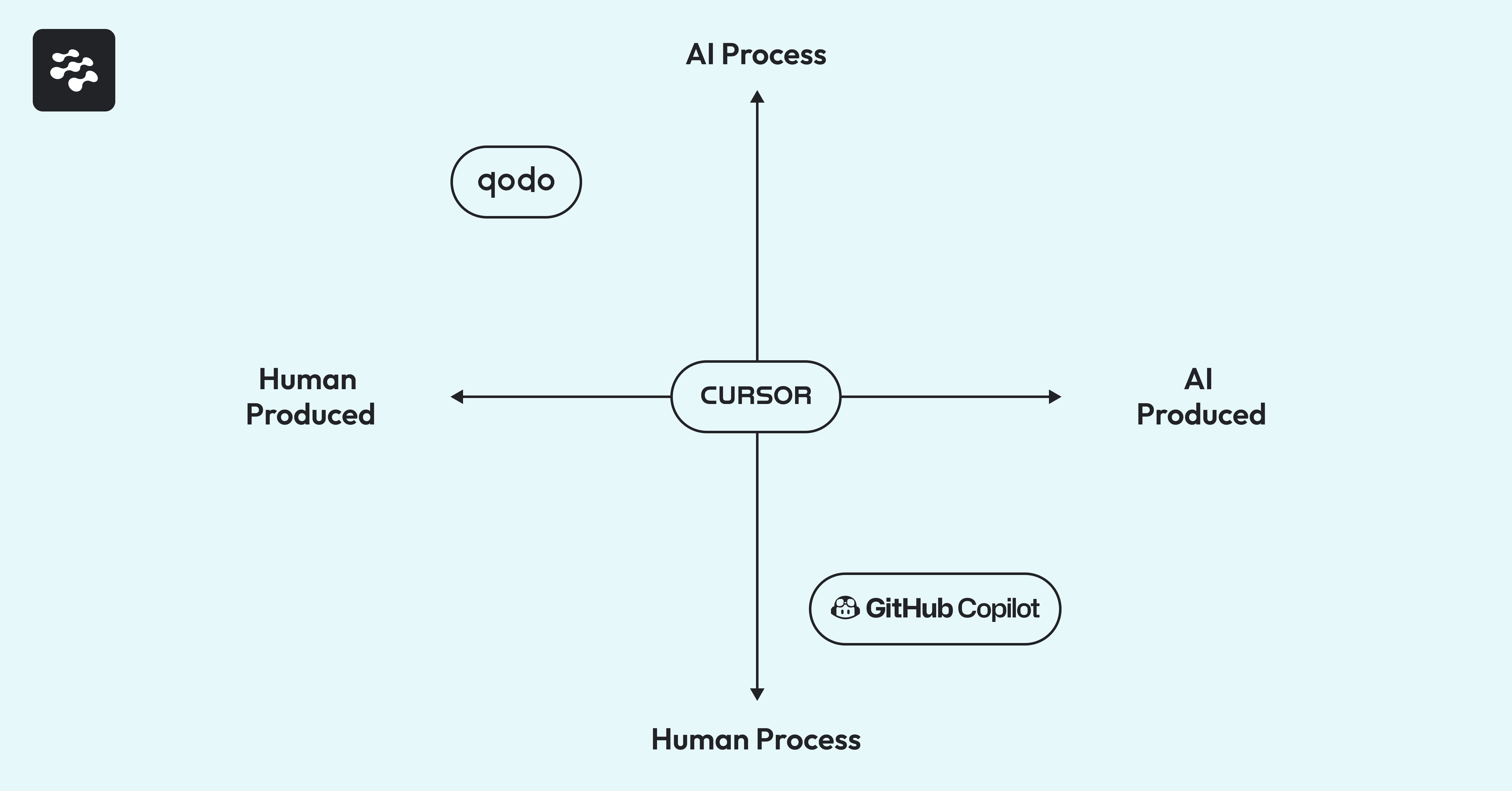

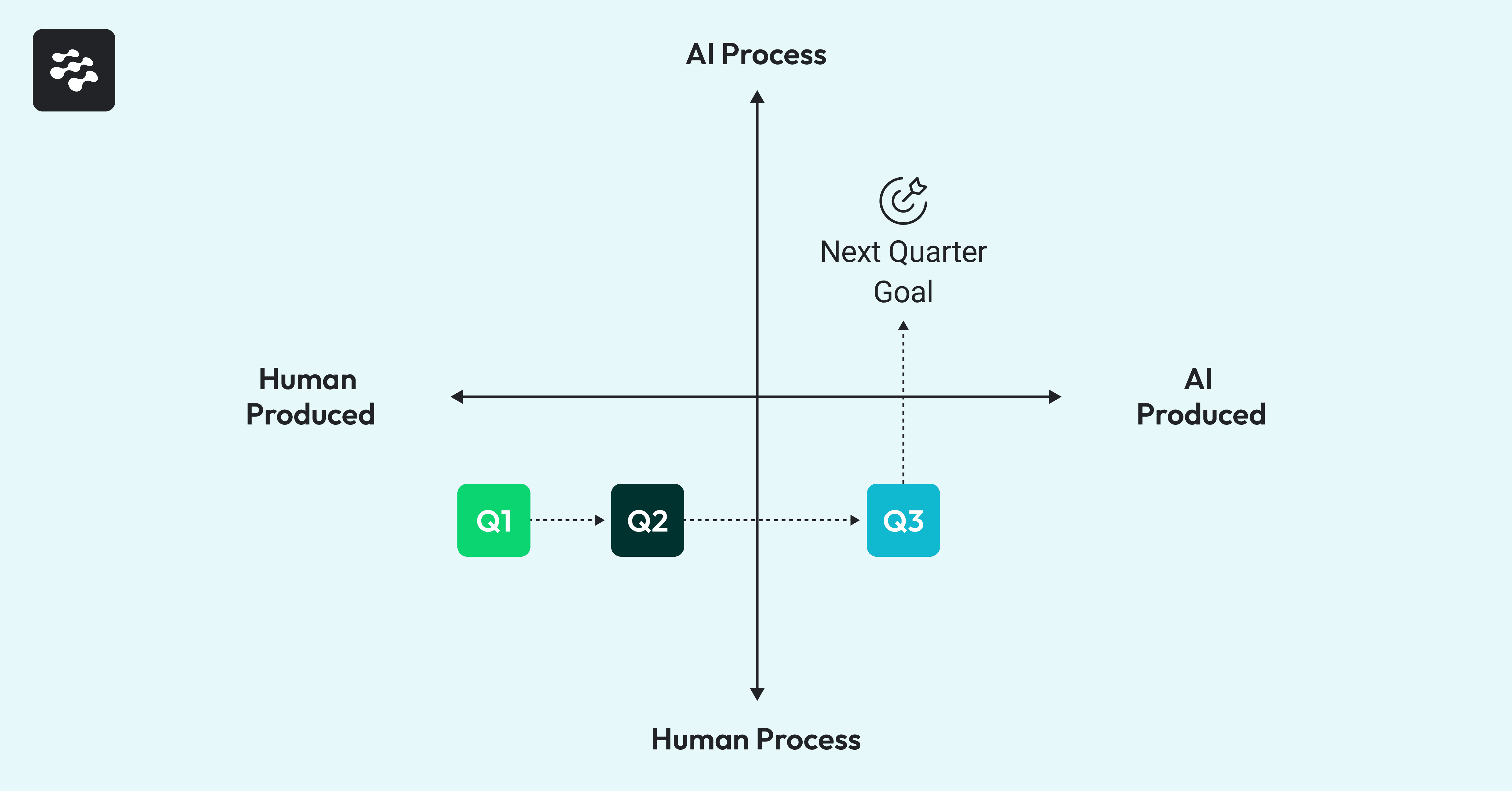

Let's face it: AI is reshaping how we build software, and keeping track of this transformation isn't easy. That's why we created the AI Collaboration Matrix: a straightforward way to visualize and guide your AI journey. This model examines two simple questions: who's making your stuff (humans or AI?) and who's running the show (again, humans or AI?). By mapping where you are today, you can avoid random AI adoption, spot opportunities to level up, and explain your AI strategy to stakeholders without their eyes glazing over. As AI evolves from a helpful assistant to a teammate who gets things done, this framework helps you navigate the transition without getting lost.

We created a simple, 30-second quiz to plot your position on this chart. Check it out here.

Most productivity frameworks we use today were built for a human-centric world. They measure human effort, human decision-making, and human-created output. However, the future of knowledge work doesn't just include humans; it also includes AI agents working alongside us or, in some cases, independently of us.

And here's the problem: our current models aren't equipped to measure this future.

As AI plays an increasingly central role in creating work products and managing workflows across all knowledge domains, we need a new way to understand and track its impact. This matrix provides a snapshot of AI usage and a flexible, forward-looking framework that helps organizations measure their progress toward an AI-driven future where human and AI collaboration is the norm, not the exception.

By placing AI’s role on a two-dimensional spectrum that compares human vs. AI-produced resources and human vs. AI-led processes, you can track your organization’s journey toward fully agentic, AI-powered work and identify where to focus next.

The Software Development Frontier From Theory to Practice

This simple map gives you three significant benefits:

- See exactly where you stand today in your AI adoption journey

- Track how your tools and practices are changing the way work happens

- Explain to your boss (or your boss's boss) what's happening with all that AI investment

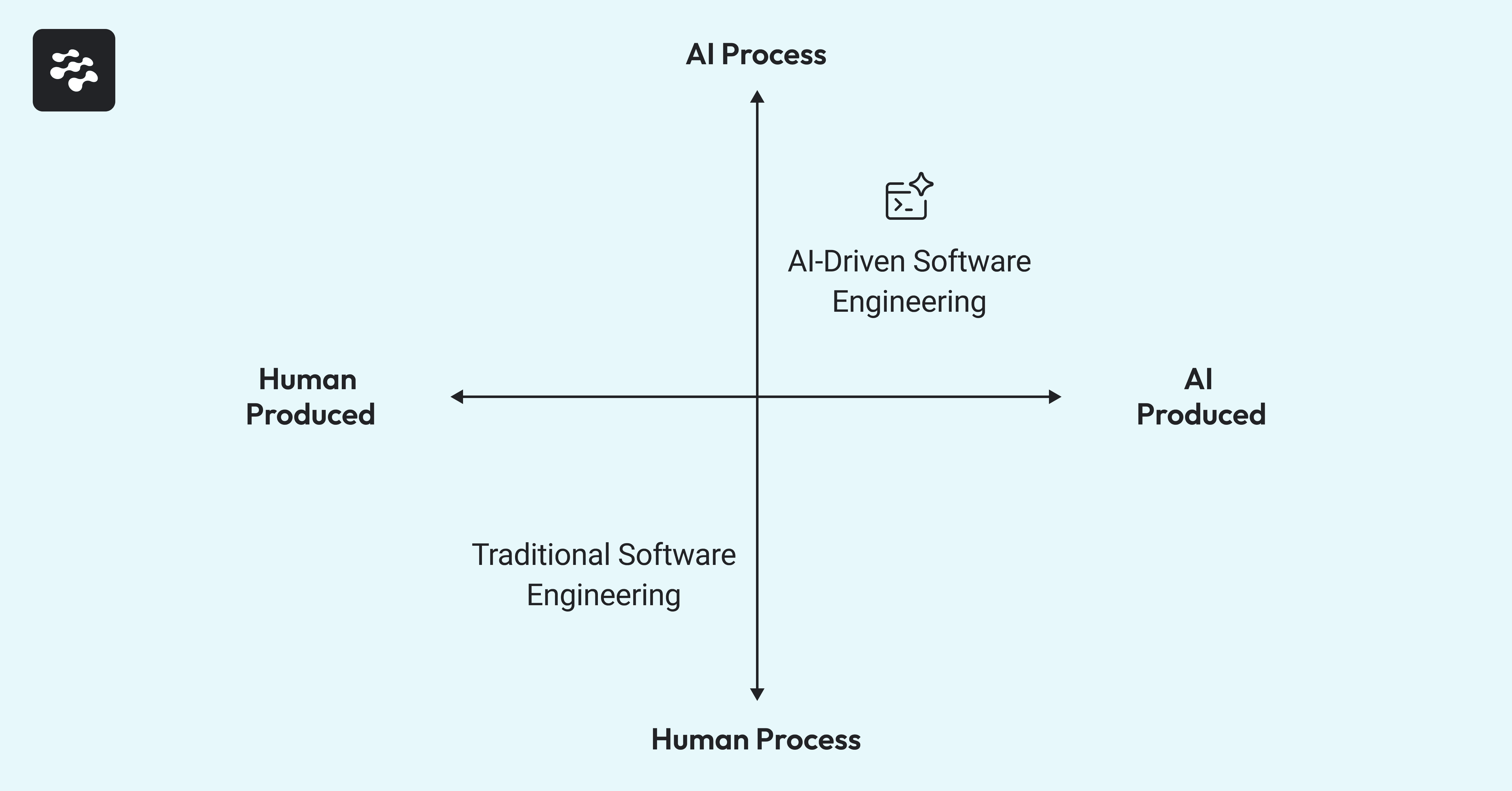

While the AI Collaboration Matrix provides a theoretical framework, its actual value emerges when applied to specific domains. Software engineering represents the perfect testing ground for these concepts, as development teams have historically been early adopters of automation and AI-enhanced tools.

Software engineering teams are among the first knowledge workers experiencing this transformation, and they're serving as the canaries in the coal mine for what most knowledge work will eventually become. By examining how developers integrate AI into their creative processes and workflow management, we can extract valuable lessons applicable to all knowledge workers.

The world before 2023 lived entirely in the bottom-left corner of this map: human-managed, human-written software development. But the trajectory is clear. By 2030, most organizations will find themselves in the upper-right quadrant, where AI takes on far more work, from writing code to managing the delivery pipeline.

The benefit of this framework is that it makes it easy to plot various activities to understand how AI interacts with your workflows. For example, you can use this to represent how AI tools will impact you. Predictive typing tools, like GitHub Copilot and Tabnine, push you primarily toward AI-produced code (bottom-right quadrant). Whereas AI-assisted code review and process automation tools, like Qodo and gitStream, move your organization upward toward AI-driven processes that manage more of the software delivery lifecycle. There are also tools like Cursor that combine an AI-powered IDE with agentic coding services. These tools offer a more balanced approach to AI adoption that positions you somewhere in the middle.

How to Use This Framework to Compare Team Progress

This matrix enables you to plot all knowledge work involved in software delivery. Any activity, output, or resource that requires cognitive effort fits within this framework. For clarity, let's examine pull requests (PRs) as our unit of analysis since they represent the atomic unit of work in modern software development teams. Doing so allows us to compare conditions across individuals, teams, and organizations.

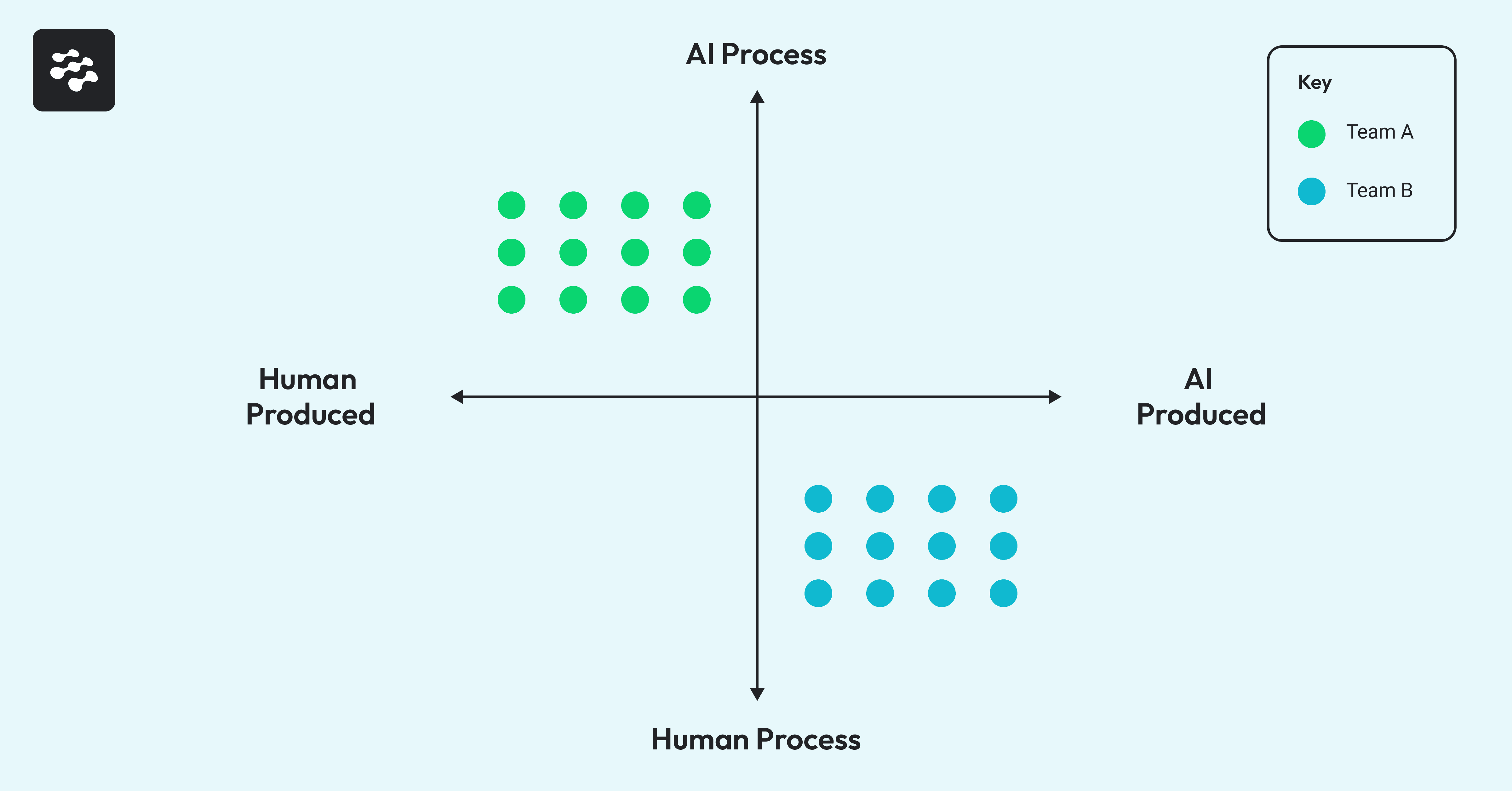

Let’s start by applying this framework to understand how two development teams may feel AI's impact differently.

Team A: AI-Supported Legacy System Maintenance

A team of senior engineers maintains a critical system built on a 15-year-old Java codebase with complex domain-specific business logic. Their approach to AI integration looks quite different:

- Code Generation: Developers write most code manually, as AI tools struggle with their specialized legacy frameworks and strict compliance requirements

- Knowledge Management: They've implemented an AI agent that indexes their extensive documentation, providing instant answers about system architecture and business rules

- Work Prioritization: An AI system analyzes incoming bug reports and feature requests, categorizing them by urgency, complexity, and business impact

This team has evolved toward the top-left quadrant. While they continue to produce most code manually, they've delegated significant cognitive overhead around process management to AI systems. This approach has reduced their planning cycles and improved release predictability without compromising their strict quality standards. Their next opportunity lies in training specialized models on their codebase to increase AI's role in code generation gradually.

Team B: AI-Driven Front-End Development

The first team of developers works on a modern, consumer-facing application built with React and TypeScript. They've fully integrated AI coding assistants into their workflow:

- Code Generation: Developers use GitHub Copilot to generate 60% of their code, significantly reducing time spent writing boilerplate components

- Testing: While they still manually review test results, AI generates most test cases based on requirements

- Review Process: Human code reviews remain mandatory but are lightly supplemented by AI-powered static analysis

This team has moved firmly into the bottom-right quadrant. They're producing AI-generated code assets while maintaining human oversight of the development process. Their next opportunity is to explore how AI can improve their workflow management and decision-making.

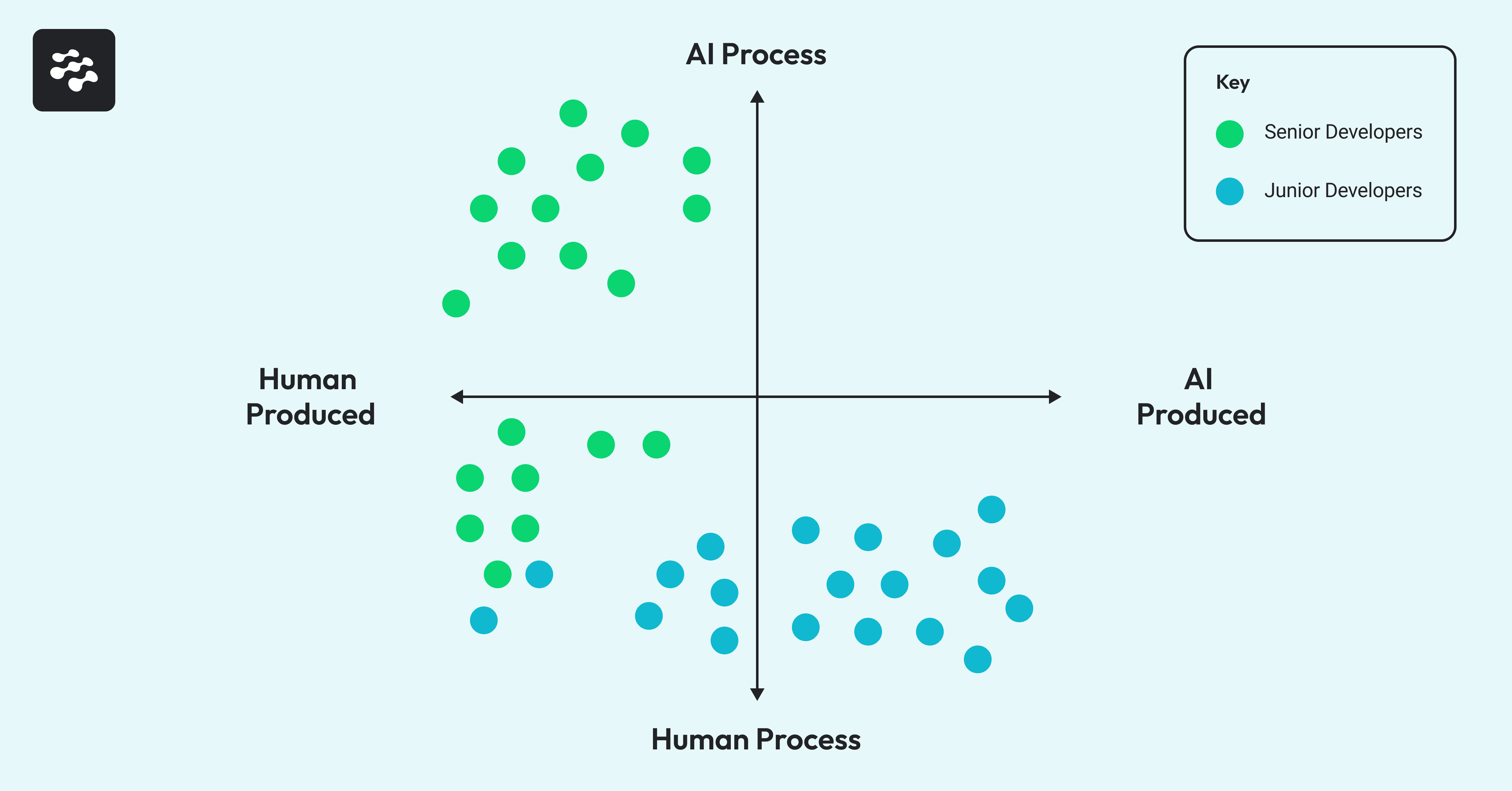

Developer Seniority and AI Adoption

Experience level significantly influences how developers integrate AI into their workflows. Junior developers typically first embrace AI as a coding assistant, relying heavily on predictive typing and natural language-to-code tools that accelerate their output. They accept AI-generated solutions more readily, often using them as learning tools to understand patterns and best practices. This positions junior-heavy teams predominantly in the bottom-right quadrant, where AI produces substantial portions of code while humans maintain process control.

In contrast, senior developers typically leverage AI's strategic capabilities beyond code generation. Deep domain knowledge makes them more selective about AI-produced code but more willing to delegate process management. Senior engineers often use AI for requirements analysis, architectural validation, and decision support rather than routine coding tasks. This approach creates a natural progression toward the top-left quadrant, where human ability drives creation while AI increasingly manages workflow optimization, knowledge retrieval, and quality assurance processes.

The Risk of Unstructured AI Adoption

This framework illustrates the risk of unstructured AI adoption. Without a consistent strategy, teams develop wildly divergent approaches to AI integration. Some will over-trust AI in complex situations, delegating critical decisions without appropriate oversight and introducing quality and security vulnerabilities. Others will systematically under-utilize AI capabilities due to skepticism or fear, missing significant efficiency gains and competitive advantages. Both extremes lead to suboptimal outcomes that undermine organizational goals.

The result is a destructive cycle of organizational whiplash: initial over-enthusiasm creates unrealistic expectations, inevitable disappointments trigger widespread skepticism, and this pattern generates a corporate immune response against further AI adoption. This pendulum swing between over-adoption and under-adoption wastes resources and creates unpredictable technical debt, building cultural resistance that is far more difficult to overcome than any technical challenge.

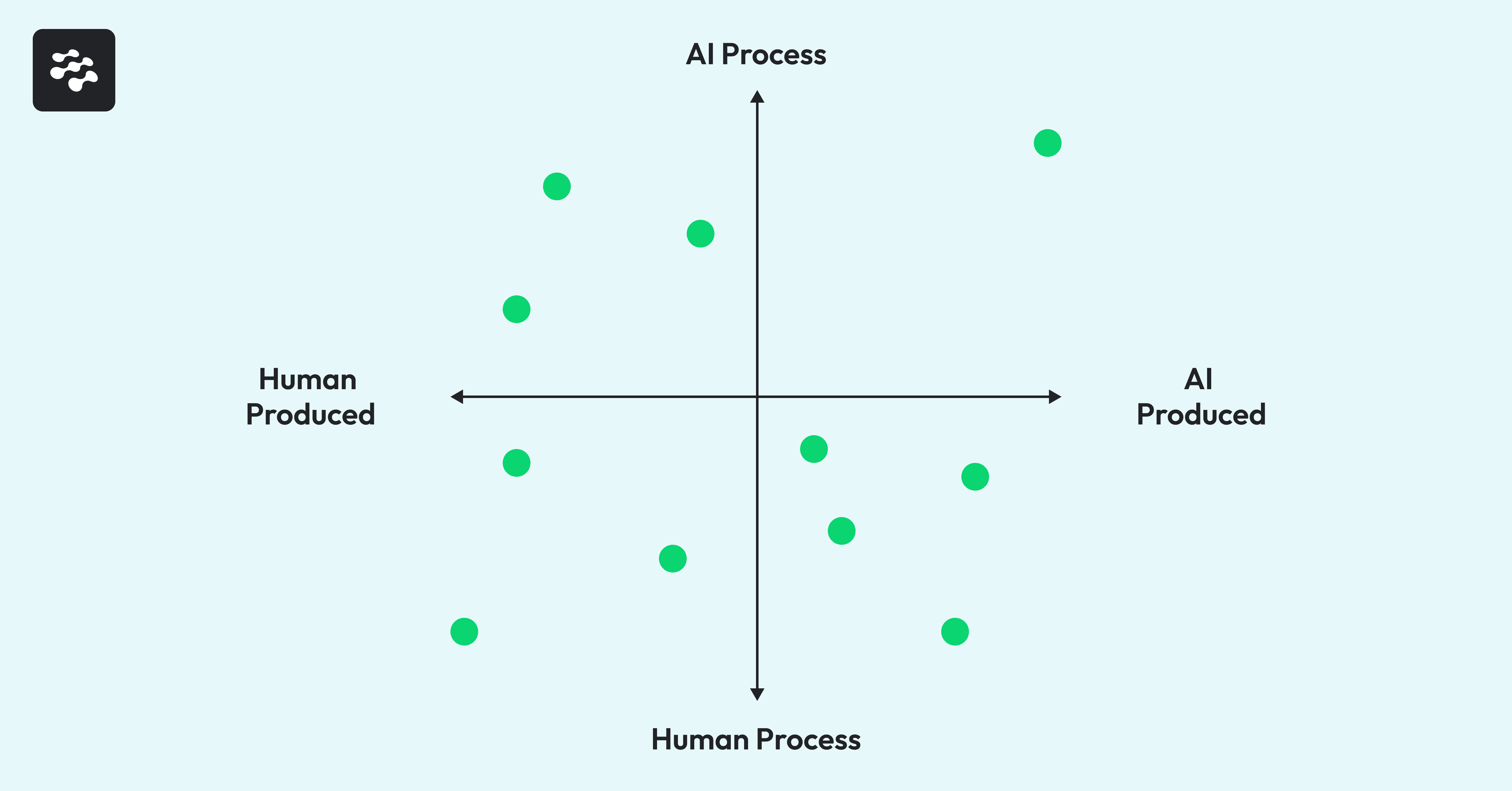

Measuring Your AI Journey by Tracking Progress Over Time

One practical application of this framework is visualizing an organization's AI adoption trajectory over time to track technology implementation in a way that measures the fundamental shift in how work gets done.

When tracking your progress, measuring both dimensions independently is essential because movement along one axis doesn’t guarantee progress along the other. Look for outliers because they may reveal individuals or teams who lead or resist the transformation. Tracking different work types separately can also be helpful since routine tasks often shift toward AI assistance more quickly than novel or complex challenges. By taking this quantifiable approach, you can turn abstract conversations about “AI adoption” into concrete, actionable insights that reveal how your organization is evolving.

- Establish a baseline: Sample 20-30 pull requests from your repositories each quarter. Evaluate each PR across two dimensions:

- What percentage of the code was AI-generated vs. human-written?

- To what degree did AI vs. humans manage the process (planning, testing, review, deployment)?

- Plot your data: Map these samples on the chart to create a heat map of your current state. This visualization reveals patterns that might otherwise remain hidden.

- Track velocity: Measure where you are and how quickly you're moving through the quadrants. Different teams will naturally progress at different rates based on their context.

For example, many software engineering teams begin their AI journey with code completion tools, which naturally drive progress toward the bottom-right quadrant. After these tools are in place, it can be valuable to set goals around implementing AI-powered code review and automated testing workflows to create a more balanced distribution toward the upper-right quadrant.

This kind of evolution often reveals important insights: while developers tend to embrace AI for code generation quickly, adopting AI for process automation usually requires more intentional change management and training. Without this tracking, teams may mistakenly assume their AI transformation is complete after adopting coding assistants alone.

The Rapid Shift to AI-Orchestrated Workflows

While the matrix suggests gradual progression, we increasingly see teams across knowledge domains, including marketing, technical documentation, and research teams completely bypass intermediate stages. Once the activation energy required to implement AI-driven workflows drops below a certain threshold, organizations are incentivized to replace entire human-managed processes rather than incrementally augment them. The economics become compelling: why spend months gradually integrating AI assistance when completely reimagining the workflow with AI at the center delivers immediate competitive advantages?

This "rip and replace" approach is particularly evident in knowledge work, where outcomes are easily measurable and processes are well-defined. Marketing teams completely overhaul campaign management by replacing traditional A/B testing with AI-driven content optimization engines. Research teams replace traditional literature review processes with AI systems that continuously monitor, analyze, and synthesize new publications. Technical writers discard conventional documentation workflows in favor of AI-powered systems that automatically generate, update, and localize documentation based on code changes. In each case, organizations find that once the implementation barrier falls below a critical point, the incentive structures push toward rapid, comprehensive transformation rather than the gradual evolution our progression models might suggest.

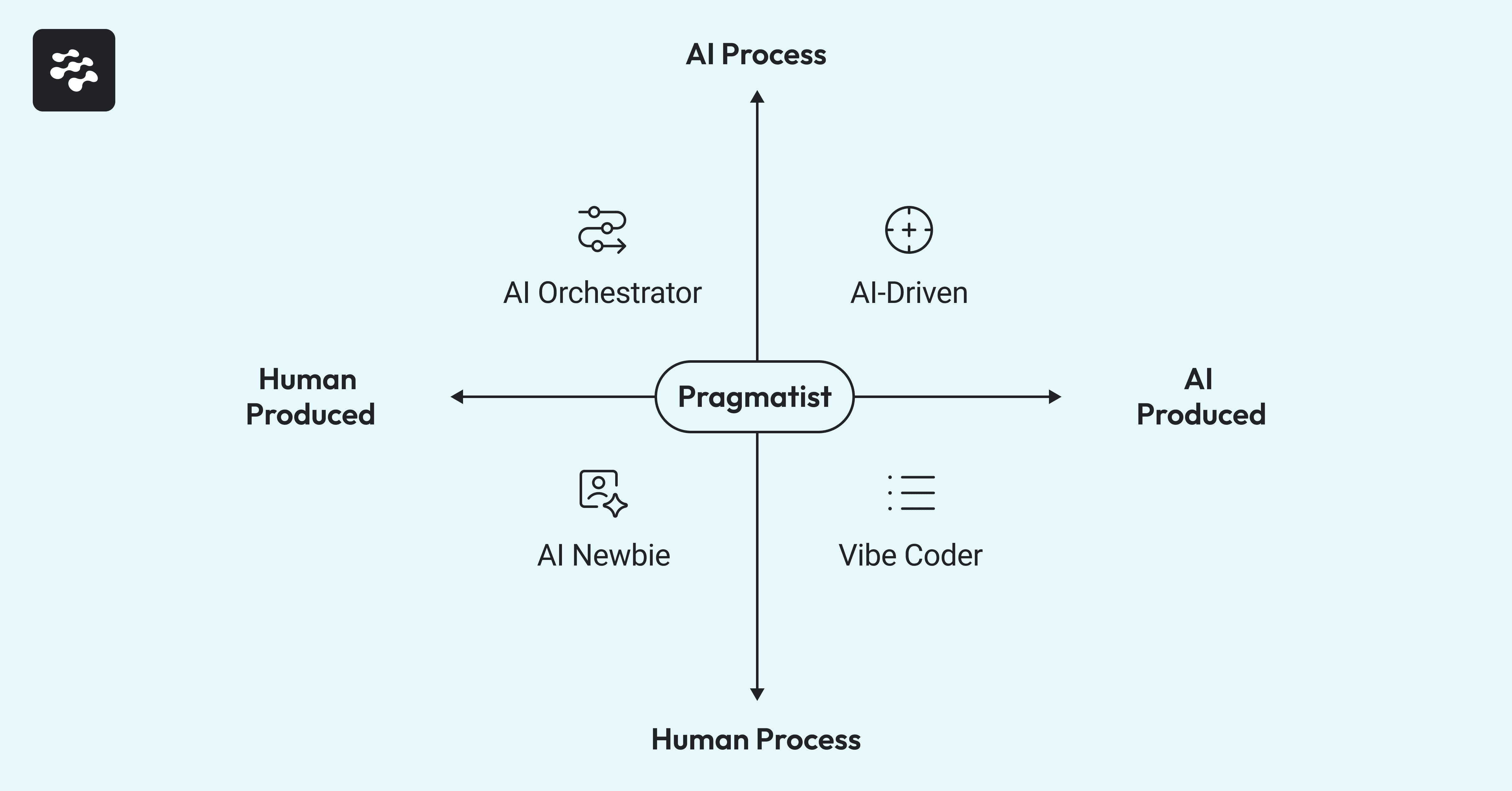

Where Do You Fit? Understanding the Five Categories

Understanding where your team or organization sits within the AI Collaboration Matrix provides crucial insights for planning your next steps. Each quadrant presents distinct characteristics, opportunities, and challenges that require tailored strategies. When transitioning between quadrants, balance technical implementation with cultural adaptation. Different teams may occupy different quadrants simultaneously; leverage this diversity to accelerate learning across your organization.

AI Newbie (Bottom-Left Quadrant)

Characteristics: This quadrant features minimal AI integration in content creation and workflow management, with high reliance on manual quality control and traditional knowledge transfer methods.

Recommended Focus Areas: Start with low-risk, high-impact AI content generation opportunities, identify repetitive tasks for initial automation, invest in AI literacy training, and implement clear metrics to measure the impact of initial AI implementations.

Pitfalls to Avoid: Beware of analysis paralysis from overly comprehensive AI strategies, underinvesting in change management, setting unrealistic expectations about immediate productivity gains, and failing to establish baseline measurements.

Vibe Coder (Bottom-Right Quadrant)

Characteristics: This approach leverages significant AI contribution to content/code generation while maintaining traditional human-led workflows for review and monitoring, resulting in increased output velocity but potential process bottlenecks.

Recommended Focus Areas: Develop robust quality assurance for AI-generated content, explore process automation for review steps, create feedback loops to improve AI generation quality, and optimize human-AI collaboration patterns.

Pitfalls to Avoid: Guard against over-reliance on AI-generated content without sufficient quality controls, neglecting to update evaluation criteria, creating process bottlenecks as generation outpaces review capacity, and failing to document effective prompting techniques.

AI Orchestrator (Top-Left Quadrant)

Characteristics: This quadrant combines strong human craftsmanship in content creation with sophisticated automation of workflow orchestration, enhancing consistency in delivery timelines while reducing coordination overhead.

Recommended Focus Areas: Define clear handoff protocols between human creators and AI process managers, establish appropriate override mechanisms, develop metrics evaluating process efficiency beyond output volume, and extend AI process management to increasingly complex decision scenarios.

Pitfalls to Avoid: Avoid creating overly rigid automated processes, undervaluing essential human creativity, failing to maintain human understanding of AI-managed processes, and neglecting opportunities to introduce AI into content creation incrementally.

AI Pragmatist (Center)

Characteristics: Balanced AI involvement across creation and workflows, with humans focused on strategy and exceptions, enabling continuous optimization that yields measured improvements in both quality and efficiency.

Recommended Focus Areas: Develop sophisticated oversight mechanisms with clear autonomy boundaries, focus on strategic differentiation beyond automation capabilities, and build adaptive governance frameworks that evolve with changing AI capabilities.

Pitfalls to Avoid: Guard against losing institutional knowledge, creating inscrutable "black box" workflows, and overlooking opportunities for human creativity to add unique value beyond what AI can provide.

AI-Driven (Top-Right Quadrant)

Characteristics: AI orchestrates content creation and workflow management while humans focus on strategic direction, enabling data-driven optimization that yields significant productivity and consistency gains.

Recommended Focus Areas: Develop sophisticated AI oversight mechanisms with clear autonomy boundaries, focus on strategic differentiation beyond automation capabilities, build adaptive governance frameworks, and share AI knowledge to expand institutional capabilities.

Pitfalls to Avoid: Guard against losing institutional knowledge, creating inscrutable "black box" workflows, overlooking opportunities for human creativity to add unique value, and failing to maintain critical human capabilities.

Want to know where you fall on this chart? Take our 30-second quiz to find out.

Prepare for the Agentic AI Future

We’re standing at the threshold of a massive shift in how software is built. AI is no longer just a tool for boosting productivity on the margins; it’s rapidly becoming an autonomous contributor in the software delivery process. The organizations that embrace this shift early, with structure and intention, will be the ones that lead the way.

The AI Collaboration Matrix gives you a map for this journey. It helps you visualize where your teams are today and where they’re headed. More importantly, it enables you to make smarter decisions about how and where to deploy AI across your workflows, whether generating code, automating reviews, or orchestrating complex delivery pipelines.

But this isn’t just about tracking progress. It ensures your AI adoption is purposeful, consistent, and scalable. AI adoption can become fragmented without a framework, leading to inefficiencies, frustration, and missed opportunities. With the framework, you can guide your teams, set clear expectations, and unlock the full potential of AI-powered software delivery.

The future of software engineering is agentic. Will your organization be ready to thrive in it?

Take the Next Step

Join LinearB for Beyond Copilot: Gaining the AI Advantage. This is a 35-minute virtual workshop designed for engineering leaders looking to take the next step in AI adoption. We’ll explore how top-performing enterprise teams are orchestrating AI beyond the IDE to transform how work gets done. You’ll discover where your team stands, identify high-impact areas to introduce agentic AI, and hear real stories from teams already reaping the benefits. Don’t miss this opportunity to learn how to unlock strategic value from your AI investment.