Agentic AI is beginning to reshape how software teams build, deploy, and maintain code. These AI-driven systems assist developers, take action, analyze data, and make decisions with minimal human input. As AI agents become more sophisticated, they will fundamentally alter software development processes, from writing and reviewing code to automating deployments and responding to incidents.

While this shift promises massive efficiency gains, it also introduces new risks that engineering leaders must navigate. In this article, we’ll explore the five most significant ways Agentic AI will disrupt the software delivery lifecycle and what you can do to stay ahead.

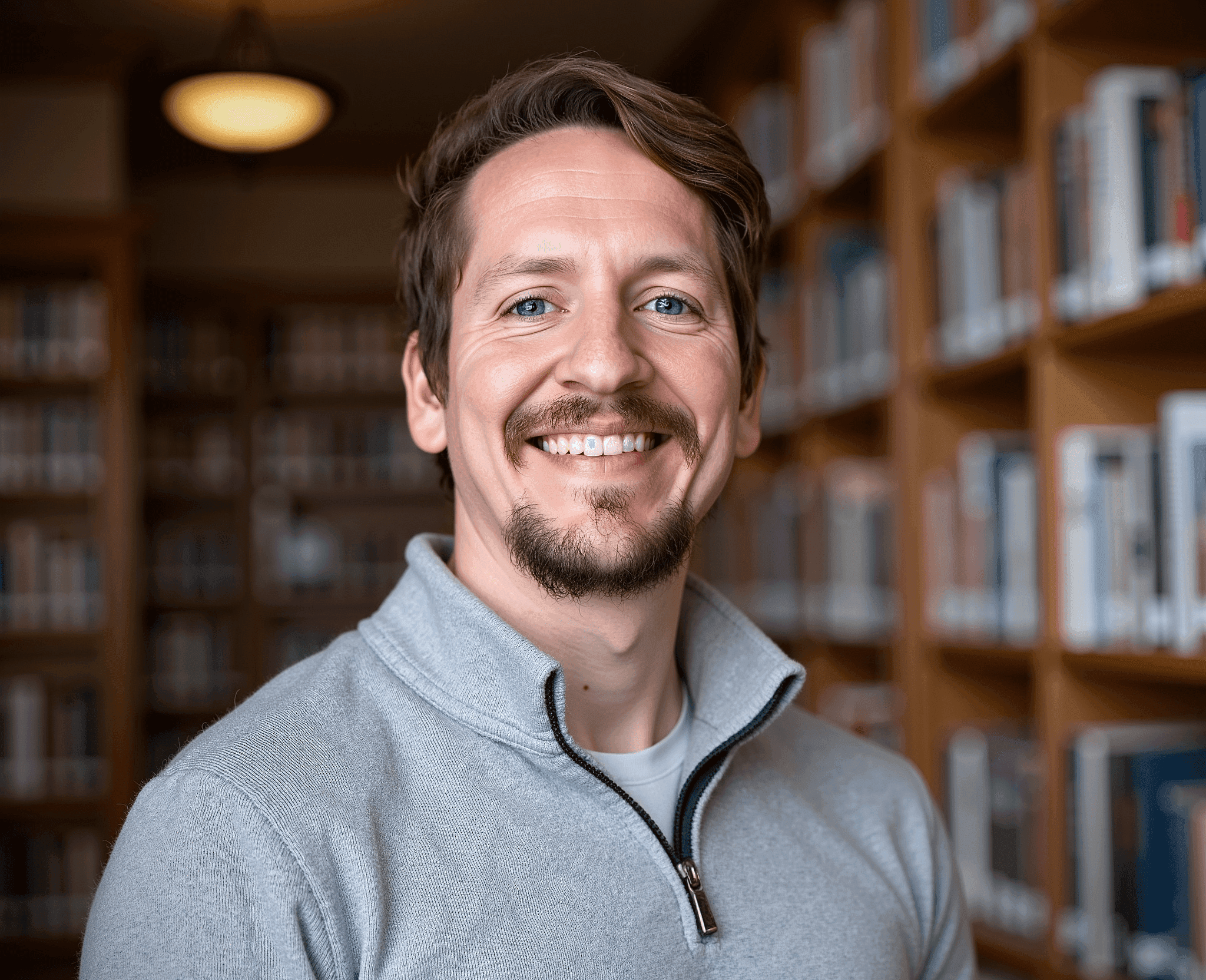

What is Agentic AI?

Agentic AI refers to AI systems capable of using external tools to accomplish tasks with some autonomy in decision-making and task execution. AI agents can proactively analyze situations, make decisions, and take action based on objectives, constraints, and feedback loops. These systems operate with a degree of self-governance, adapting to dynamic environments and optimizing workflows with minimal human intervention.

Here are the key characteristics of an AI Agent:

- Generative AI - They can create text, images, or other assets related to software development.

- External interactions - They can communicate with other systems, APIs, or users to execute complex workflows.

- Learning and Adaptation - AI agents often include feedback mechanisms to improve reinforcement learning.

- Goal-oriented behavior - They can operate based on designed objectives and refine their actions to achieve the desired outcome.

- Autonomy - AI agents can perform tasks with minimal human intervention, anticipate needs, and respond to external stimuli.

The Impact of Agentic AI on Software Development: Top 5 Things AI Agents Will Disrupt

As Agentic AI continues to evolve, it will reshape workflows, automate complex tasks, and challenge traditional engineering practices. Let’s explore the five most significant ways these AI agents will disrupt the software delivery lifecycle.

Automated Code Generation and Refactoring

Refactoring large codebases is tedious and error-prone. Developers spend significant time manually reviewing inefficiencies, leading to inconsistent results and accumulating technical debt. Keeping up with evolving best practices adds further complexity, making maintaining code quality at scale difficult.

Agentic AI will streamline this process by autonomously analyzing code, identifying inefficiencies, and refactoring based on best practices. These AI agents ensure consistency across teams, but they also introduce risks. Developers may distrust AI-generated changes, modifications could disrupt workflows, and over-reliance may erode deep technical expertise.

Organizations should implement AI-assisted code review policies to mitigate these risks that maintain human oversight for high-impact changes. Ensure developers upskill alongside AI adoption, where AI enhances productivity without diminishing engineering expertise.

![Automated PR review suggestion from LinearB AI via gitStream, highlighting a bug in a Python file. The issue involves unsafe type coercion with astype(str), applied inconsistently across different DataFrames. The suggested fix ensures proper column reference and consistent string conversion. The review includes a code snippet with a corrected assignment for df_issues_summaries["issue_provider_id"]. Enhancing code quality and reducing errors in data processing workflows.](https://assets.linearb.io/image/upload/v1740677729/git_Stream_AI_code_review_84a71b56e5.png)

How Agentic AI Enhances CI/CD Pipelines

Managing CI/CD pipelines requires constant tuning, yet diagnosing test failures, adjusting configurations, and scaling across teams often slow deployment cycles. As organizations grow, ensuring efficiency while minimizing human errors becomes increasingly complex.

Agentic AI will optimize CI/CD by dynamically adjusting build configurations, detecting flaky tests, and managing rollback decisions. These autonomous systems improve deployment efficiency, but reduced human oversight can complicate failure diagnosis, introduce bypassed approvals, and increase regression risks.

To address these challenges, organizations should adopt AI observability tools for transparency, establish rollback safeguards for human intervention, and implement hybrid approval models to balance automation with oversight, ensuring confidence in AI-driven CI/CD.

Incident Response and Debugging

Debugging production incidents is time-consuming and stressful, requiring engineers to sift through logs and coordinate across teams. As systems scale, the need for faster, more efficient solutions grows.

Agentic AI will accelerate incident response by detecting anomalies, correlating logs across distributed systems, and even applying fixes autonomously. While this reduces downtime, AI-generated patches could introduce new issues, requiring robust validation. Security policies must also evolve to audit AI-driven resolutions.

Organizations should implement AI-human collaboration frameworks where engineers retain approval authority to ensure reliability. AI auditing logs provide transparency, while controlled environment testing refines automation strategies before full deployment.

Code Review and Governance

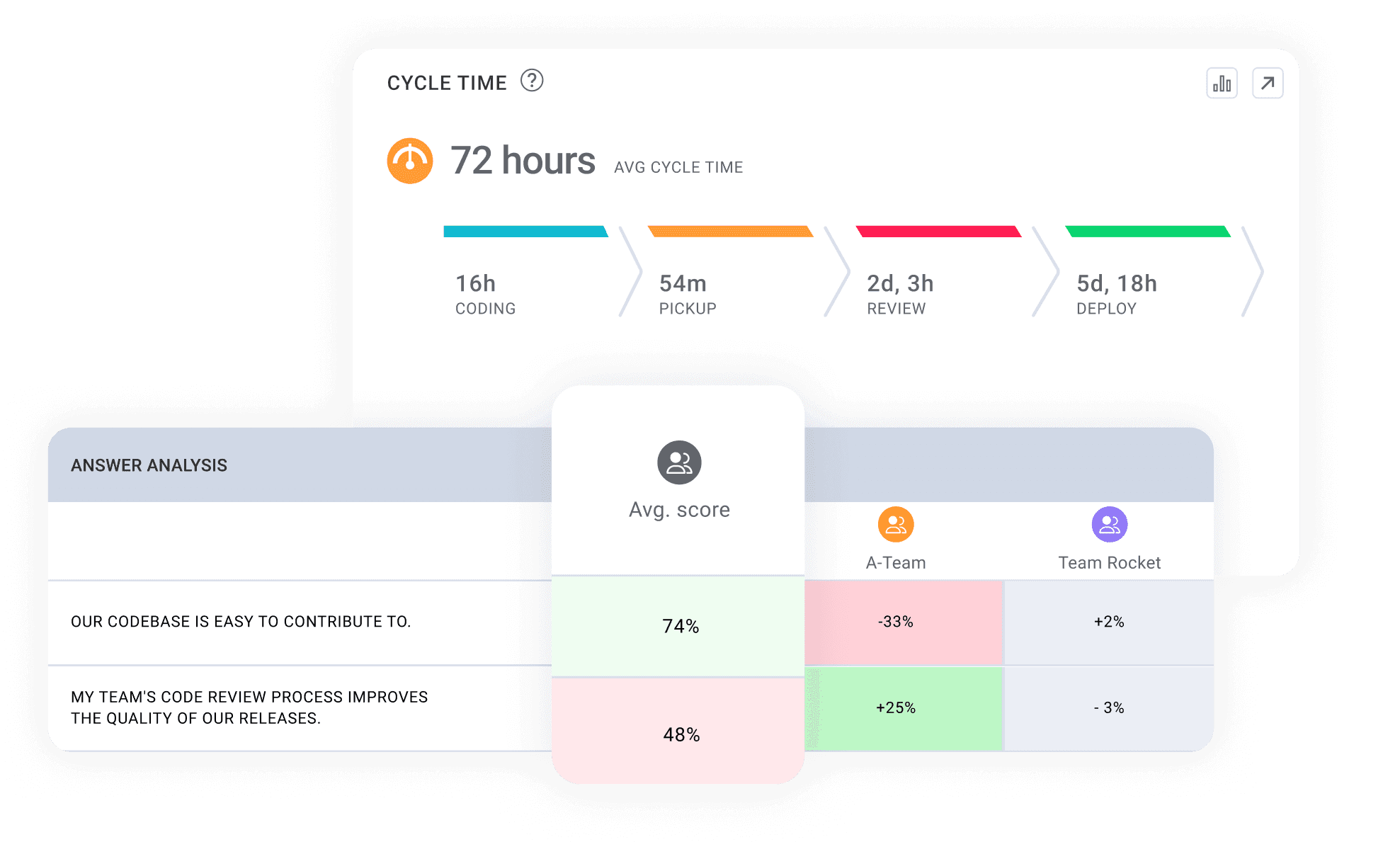

Traditional code reviews are inconsistent and inefficient, leading to missed vulnerabilities, slower cycles, and bottlenecks. Scaling high-quality reviews without adding manual overhead remains a challenge.

Agentic AI will automate key aspects of code review, dynamically assigning reviewers, assessing risk, enforcing standards, and detecting security vulnerabilities. However, rigid AI feedback may cause developer pushback, excessive alerts can lead to fatigue, and overly strict governance may stifle creativity.

To mitigate these risks, organizations should fine-tune AI models to align with team workflows, introduce review thresholds for high-risk changes, and filter out false positives to maintain a productive development environment.

Developer Workflows

Developers lose valuable time on administrative tasks, context switching, and manual backlog grooming, slowing productivity and increasing friction.

AI-driven assistants will reduce this burden by automating documentation, managing tickets, and prioritizing work based on impact. However, AI-generated priorities may misalign with business goals, and over-reliance could reduce team adaptability.

Organizations should establish AI-human collaboration frameworks to balance efficiency and control, regularly review AI recommendations against strategic objectives, and incorporate developer feedback to refine AI-driven optimizations over time.

Now is The Time to Prepare for the Agentic Future

Agentic AI is poised to transform the software delivery lifecycle, driving unprecedented efficiency and automation. However, its adoption comes with challenges. Without proper oversight, AI-driven decisions can introduce risk, disrupt workflows, and create blind spots in engineering processes. To harness the full potential of AI agents while maintaining control, organizations need a structured approach to governance, risk management, and policy enforcement.

LinearB helps engineering leaders establish consistent policies, monitor AI-driven workflows, and ensure that automation enhances productivity without sacrificing quality or security. With built-in oversight and intelligent automation, LinearB provides the visibility and control needed to roll out Agentic AI safely and effectively. Ready to take the next step? Learn how LinearB can help you manage AI adoption with confidence.