The software industry is constantly evolving and developers frequently need to update legacy code to maintain compatibility with new technologies. One such challenge is migrating code from one format to another: a process that has traditionally been tedious, time-consuming, and error-prone. However, recent advancements in AI, particularly Large Language Models (LLMs), are changing how these migrations are handled.

A recent whitepaper from Google offers fascinating insights into how the company is leveraging LLMs to automate code migrations at scale.

To summarize: AI wrote nearly 75% of the code, and Google developers believe it increased productivity by 50%. Let's look at the key findings and implications of this groundbreaking work.

Leveraging AI for Routine System Migrations

Google leveraged a relatively routine task for their experiment: transitioning identifiers from 32-bit to 64-bit integers. This functionally simple change requires modifications across potentially hundreds of files in multiple programming languages.

Traditional approaches to such migrations typically involve:

- Using regular expressions to find potential code references

- Manually inspecting and modifying each reference

- Manually testing changes to ensure correctness

- Managing the frustrating, time-consuming process of finding all instances requiring changes

When done manually, these migrations could take years to complete - as evidenced by a previous similar migration at Google that took approximately two years.

Google's Innovative Approach to Leveraging AI

To address these challenges, Google developed an automated system that integrates LLMs into the migration workflow. The system follows a structured process:

- Find Potential References: Using Google's code indexing system (Kythe), the tool identifies direct references to the identifiers being migrated, as well as indirect references up to a distance of five.

- Categorize References: The system classifies identified references into groups based on confidence levels:

- Not-migrated: Locations identified with 100% confidence as not yet migrated

- Irrelevant: Locations determined to be irrelevant with 100% confidence

- Relevant: Locations that need further investigation

- Left-over: Locations intentionally set aside for manual developer review to determine if migration is required or to mark explicitly as irrelevant.

- Change & Validate: This is where the LLM comes into play. The system uses Google's Gemini model (fine-tuned on internal Google code) to suggest appropriate code changes, which are then validated through multiple steps to ensure correctness.

The validation process is particularly thorough, including checks for successful completion, whitespace-only changes, AST parsing, a "punt" check where the LLM itself determines if the change was necessary, and build/test validations.

How AI Handled Most of the Migration Workload

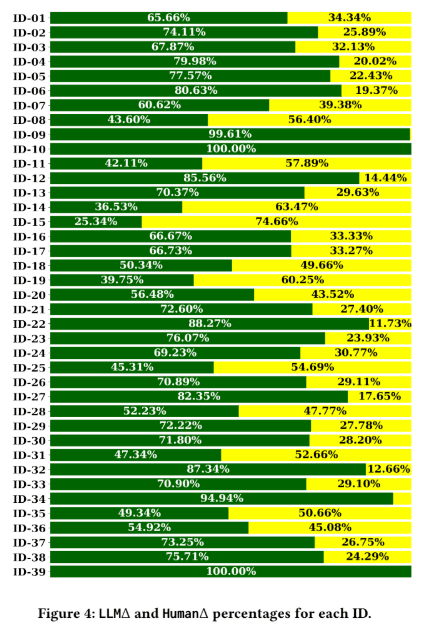

The results of this approach are remarkable. Over twelve months, three developers used the system to perform 39 distinct migrations, resulting in:

- 595 total code changes submitted

- 93,574 total edits across all changes

- 74.45% of code changes were generated by the LLM

- 69.46% of edits performed by the LLM

- An estimated 50% reduction in developer time compared to manual approaches

The developers reported high satisfaction with the automated system, particularly appreciating how AI helped with continuous progress, system accuracy, and reducing tedious work.

Google’s Strategic AI Rollout is Key to Their Success

Despite its success, the system wasn't without challenges. They reported the following issues:

- LLM Context Window Limitations: Large files sometimes couldn't fit entirely within the LLM's context window, requiring manual intervention. Although newer versions of Gemini now have a much larger context window that may mitigate this issue.

- LLM Hallucinations: The model occasionally produced irrelevant changes, unnecessary comments, or simply reformatted code without making meaningful changes.

- Language Support Variances: The LLM performed well with languages like Java, C++, and Python, but struggled with less common languages like Dart.

- Pre-existing Issues: Test failures unrelated to the migration sometimes hindered the automated process.

You should always expect your AI initiatives to encounter uncertainties like these and ensure developers are looped in at the right steps to mitigate risk and catch errors.

Google's approach demonstrates a strategic deployment of AI that accelerates software maintenance while maintaining high quality standards. By focusing on routine but essential tasks like code migrations - the "low-hanging fruit" of software engineering - Google has created a structured initiative that delivers immediate value while minimizing risks.

This research shows how AI can transform engineering practices when deployed with careful planning and rigorous validation. The success metrics are compelling: with nearly 75% of code changes generated by AI and development time cut in half, Google has proven that targeted AI applications can dramatically improve developer productivity on routine tasks.

How to Apply Google’s Research

This research demonstrates the significant potential of LLMs to transform software engineering practices. By automating substantial portions of repetitive migration tasks, they can free developers to focus on more creative and complex aspects of software development. The approach's success highlights the potential for similar applications in other code transformation tasks, from library migrations to framework updates, potentially saving thousands of developer hours across the industry.

For engineering leaders across the industry, this research offers a practical roadmap for AI implementation - start with well-defined, repetitive tasks that consume significant developer time but don't require deep creative thinking. As Google's experience demonstrates, this approach allows teams to build confidence in AI tools while simultaneously freeing skilled developers to focus on innovation and complex problem-solving.

As LLM capabilities continue to advance, particularly with larger context windows and reduced hallucinations, we can expect these benefits to become even more pronounced. Google's methodical approach to AI deployment for system migrations serves as a model for how organizations can effectively integrate these powerful tools into their engineering workflows.

For more info on how you adopt AI effectively for your engineering team, check out the blogs below: