The engineering productivity measurement landscape has become increasingly crowded with frameworks promising to solve the age-old challenge of understanding and improving developer performance. The latest addition to this space is DX Core 4, a framework that aims to provide a unified approach to measuring developer productivity across four key dimensions: Speed, Effectiveness, Quality, and Impact.

But does DX Core 4 meaningfully advance the conversation around engineering productivity, or does it simply repackage existing concepts with new terminology? In this comprehensive guide, we'll explore what DX Core 4 is, how it compares to established frameworks, and whether it provides the actionable insights engineering leaders need to drive meaningful improvement.

What is DX Core 4?

DX Core 4 is a developer productivity framework developed by DX that organizes engineering metrics into four key categories. The framework positions itself as a unified approach that builds on DORA, SPACE, and DevEx methodologies while providing a streamlined implementation approach.

Key components and measures of DX Core 4

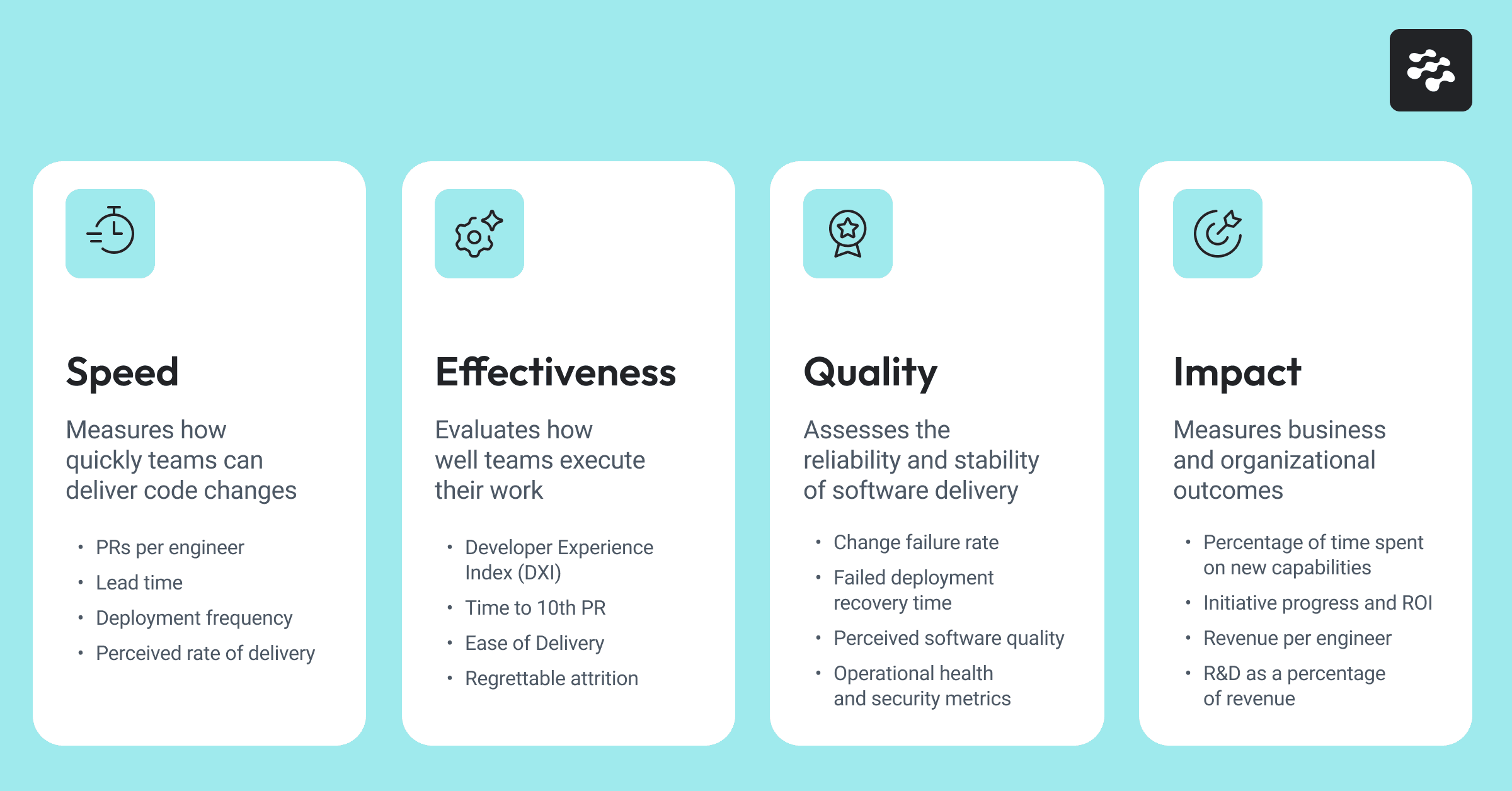

The DX Core 4 framework has four primary dimensions, with metrics for each category:

Speed: Measures how quickly teams can deliver code changes

- PRs per engineer – The number of pull requests the average engineer submits over a specified time.

- Lead time – The average time from the start of work on a code change until it is deployed to production.

- Deployment frequency – The number of times code is deployed to production within a given period.

- Perceived rate of delivery – The rate at which team members subjectively feel they are shipping valuable work to users.

Effectiveness: Evaluates how well teams execute their work

- Developer Experience Index (DXI) - proprietary measurement based on 40+ survey questions

- Time to 10th PR – The number of days it takes a new engineer to submit their tenth pull request.

- Ease of Delivery – Subjective opinion on how easily engineers can deliver code changes from development to production.

- Regrettable attrition – The percentage of high-performing employees who leave the organization voluntarily.

Quality: Assesses the reliability and stability of software delivery

- Change failure rate – The percentage of deployments to production that result in a failure or require hotfixes.

- Failed deployment recovery time – The average time it takes to restore service after a failed deployment.

- Perceived software quality – The subjective assessment by team members of the quality and reliability of the software.

- Operational health and security metrics – Measurements tracking system uptime, incidents, vulnerabilities, and security events.

Impact: Measures business and organizational outcomes

- Percentage of time spent on new capabilities – The proportion of engineering time allocated to building new features versus maintenance or other work.

- Initiative progress and ROI – The measurement of how much progress has been made on strategic initiatives and their return on investment.

- Revenue per engineer – The total company revenue divided by the number of engineers.

- R&D as a percentage of revenue – The proportion of total revenue invested in research and development activities.

At a high level, these four categories holistically cover the primary components that contribute to engineering productivity. This article will dive into individual metrics later.

Technical requirements for implementing DX Core 4

Understanding DX Core 4 requires examining the data sources and infrastructure needed to track these metrics effectively:

Git repository (GitHub, GitLab, BitBucket): Required for speed metrics like PRs per engineer and lead time, quality metrics like change failure rate tracking, and some impact metrics for capability allocation analysis.

Project management systems (Jira, Shortcut, Azure Boards): Essential for lead time calculation, initiative progress tracking, and providing context for what constitutes "work started" and "work completed."

Survey platform: (LinearB, Survey Monkey, Typeform) Core 4 depends heavily on perception metrics for multiple categories that are gathered via recurring surveys.

CI/CD pipeline integration (GitHub Actions, GitLab CI, CircleCI): Necessary for deployment frequency, change failure rate, and failed deployment recovery time calculations.

Incident management tools (PagerDuty, DataDog): Required for accurate failed deployment recovery time and change failure rate measurements.

HR and financial systems: Needed for impact metrics like revenue per engineer, R&D percentage calculations, and regrettable attrition tracking.

Monitoring and observability tools: Essential for operational health metrics and defining what constitutes a "failure" in change failure rate calculations.

Comparing DX Core 4 with established productivity frameworks

To understand whether DX Core 4 advances the engineering productivity conversation, it's important to examine how it relates to established frameworks:

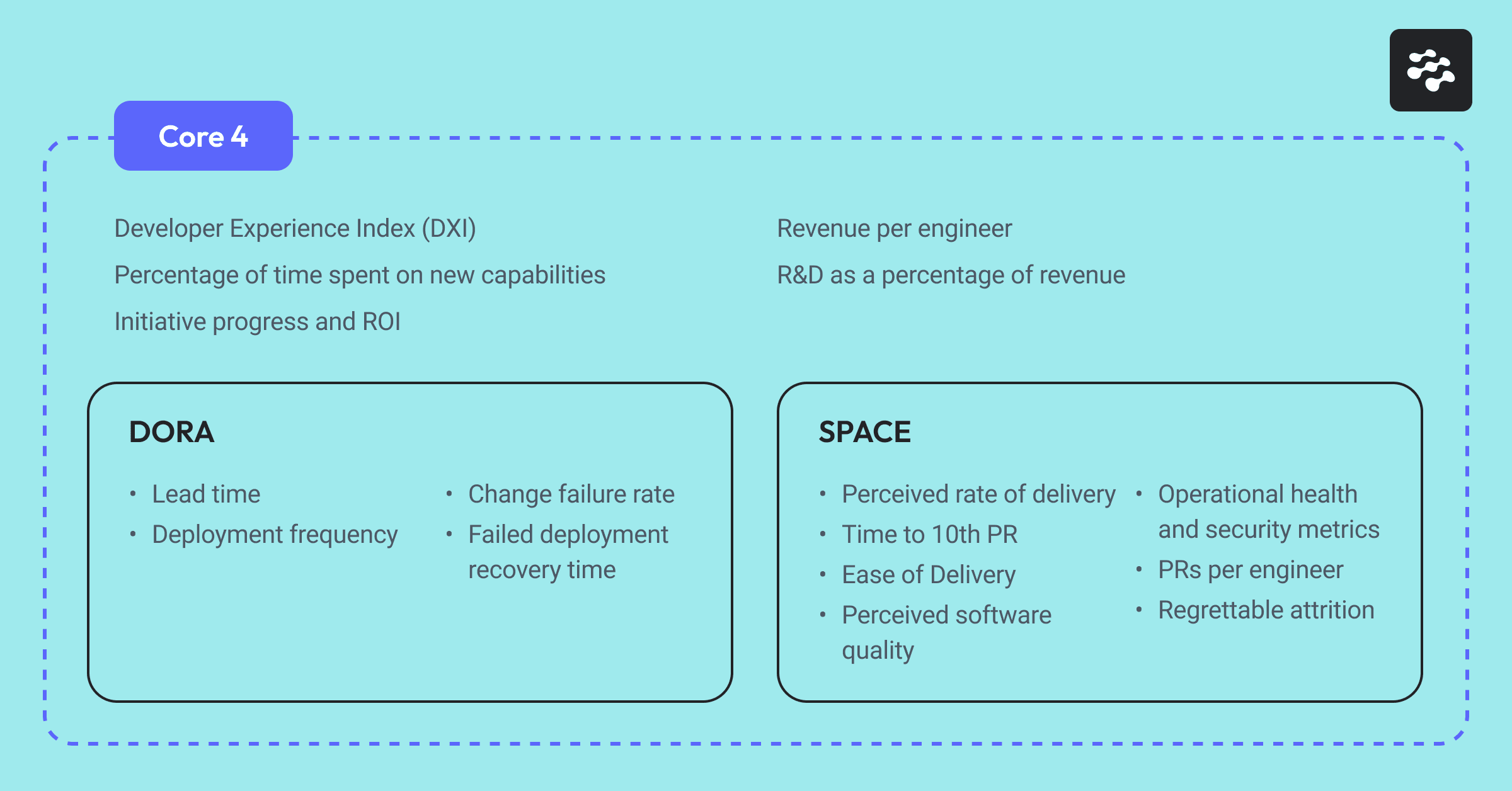

Relationship to DORA metrics

DX Core 4 incorporates several DORA metrics directly:

- Lead time

- Deployment frequency

- Change failure rate

- Failed deployment recovery time

These metrics represent the most valuable and proven elements of the Core 4 framework, having been validated and benchmarked through years of research across thousands of organizations.

Relationship to SPACE framework

The SPACE framework (Satisfaction, Performance, Activity, Communication, Efficiency) provides a more comprehensive view of developer productivity:

- Satisfaction and well-being elements appear in Core 4's effectiveness dimension

- Performance metrics overlap with Core 4's Speed and Quality dimensions

- Activity metrics like PRs per engineer appear in Core 4's Speed dimension

- Communication and collaboration aspects are largely absent from Core 4

- Efficiency and flow concepts appear across multiple Core 4 dimensions

Critical evaluation of DX Core 4 metrics

To evaluate whether DX Core 4 provides meaningful measurement, we can apply the vanity metrics analysis to each metric to determine whether it can help your engineering organization.

This analysis evaluates productivity metrics across 8 criteria:

- Actionable: Teams can directly influence the metric through their work

- Timely/responsive: Team changes reflect in the metric quickly based on behavior or performance

- Contextual/normalized: The metric accounts for relevant variables without oversimplifying

- Aligned with goals: The metric reflects the strategic objectives of your team or organization.

- Unambiguous: The metric is easy to interpret without debate or qualifiers.

- Resistant to gaming: The metric is hard to manipulate without impactful improvement.

- Drives good behavior: The metric encourages long-term improvements.

- Comparability: You can compare the metric in a meaningful way across teams or time periods

DX Core 4 metrics that pass the vanity test

Lead time

Lead time stands out as one of the most valuable metrics in the Core 4 framework, passing the majority of the vanity metric tests. Teams can directly influence this metric by improving processes, removing bottlenecks, and streamlining workflows, making it highly actionable for driving real productivity gains. The metric responds quickly to process improvements and is naturally resistant to gaming: you can't fake faster delivery without improving your development processes.

However, it does have limitations: it lacks context about work complexity and doesn't provide granular visibility into specific workflow stages, making meaningful comparisons across different teams challenging without proper context.

- ✅ Actionable: Teams can improve processes, remove bottlenecks, and streamline workflows to improve the rate at which they deliver ideas to production.

- ✅ Timely/responsive: Reflects process improvements relatively quickly.

- ⚠️ Contextual/normalized: Lacks context about work complexity and type. It also doesn’t provide a granular view of all stages of the software delivery workflow.

- ✅ Aligned with goals: Faster delivery aligns with business objectives.

- ⚠️ Unambiguous: Requires a clear definition of start and end points, which vary between teams and projects.

- ✅ Resistant to gaming: Difficult to game without making process improvements.

- ✅ Drives good behavior: Encourages process optimization and efficient collaboration.

- ⚠️ Comparability: Comparable within teams over time, but challenging across different teams/projects.

Deployment frequency

Deployment frequency is another strong metric from the Core 4 framework that passes most vanity metric tests. Teams can directly improve this metric by enhancing CI/CD pipelines, reducing batch sizes, and automating processes, making it highly actionable and responsive to changes. It drives positive behaviors by encouraging smaller batches and better automation, aligning with continuous delivery goals.

However, the metric has vulnerabilities: it could be gamed through trivial deployments, requires context about deployment complexity, and faces definitional challenges that make cross-organizational comparisons difficult without proper standardization.

- ✅ Actionable: Teams can improve CI/CD, reduce batch sizes, and automate processes.

- ✅ Timely/responsive: Changes to deployment practices will reflect in this metric quickly.

- ⚠️ Contextual/normalized: Requires additional context about deployment complexity and risk.

- ✅ Aligned with goals: Aligns with continuous delivery and faster feedback.

- ⚠️ Unambiguous: Teams may have different responsibilities and definitions for when software is deployed.

- ⚠️ Resistant to gaming: Could be gamed with trivial deployments.

- ✅ Drives good behavior: Encourages smaller batches and better automation

- ⚠️ Comparability: Comparable within similar contexts, but not across the organization.

Time to 10th PR

Time to 10th PR is a solid metric that passes most vanity metric tests by providing a clear, unambiguous milestone that teams can directly influence through improved onboarding, documentation, and mentoring. The metric is naturally resistant to gaming and drives positive behaviors by encouraging better tooling, documentation, and support systems, which aligns well with productivity goals.

However, it has limited applicability for organizations with a static headcount since it only reflects onboarding improvements for new hires. The metric also requires context about role complexity and prior experience to be meaningful, making comparisons challenging across different teams or experience levels.

- ✅ Actionable: Teams can improve onboarding, documentation, and mentoring.

- ⚠️ Timely/responsive: Reflects onboarding improvements for each new hire, but not valuable for organizations with a static headcount.

- ⚠️ Contextual/normalized: Requires context about role complexity and prior experience.

- ✅ Aligned with goals: Faster onboarding aligns with productivity goals and represents easier software development workflows.

- ✅ Unambiguous: Clear milestone and measurement.

- ✅ Resistant to gaming: Hard to game without improving developer experience.

- ✅ Drives good behavior: Encourages better tooling, documentation, onboarding, and support systems.

- ⚠️ Comparability: Comparable within similar roles and experience levels, but not across teams.

Change failure rate

Change failure rate is a strong metric that passes most vanity metric tests by being highly actionable. Teams can directly improve it through better testing, code review, and deployment practices. The metric is naturally resistant to gaming and drives positive behaviors by encouraging investment in software quality, which aligns well with stability goals.

However, it faces some challenges: as a lagging indicator, it reflects quality improvements after the fact rather than predicting them. The metric also requires clear context about failure complexity and risk tolerance, and teams need consistent definitions of what constitutes a failure to enable meaningful comparisons across different projects or contexts.

- ✅ Actionable: Teams can improve testing, code review, and deployment practices.

- ⚠️ Timely/responsive: Reflects quality improvements but is a lagging indicator.

- ⚠️ Contextual/normalized: Needs context about failure complexity and risk tolerance.

- ✅ Aligned with goals: Lower failure rates align with software stability goals.

- ⚠️ Unambiguous: Requires a clear definition of failure, which can vary by team or project.

- ✅ Resistant to gaming: Hard to game without improving software quality.

- ✅ Drives good behavior: Encourages better testing and review practices.

- ⚠️ Comparability: Comparable within similar contexts, but not across teams or projects due to its ambiguous definition.

Failed deployment recovery time

Failed deployment recovery time is a strong metric that passes most vanity metric tests by being highly actionable—teams can directly improve it through better monitoring, rollback procedures, and incident response. The metric is immediately responsive to changes and naturally resistant to gaming, driving positive behaviors by encouraging investment in incident response and monitoring capabilities, which aligns well with reliability goals.

However, it requires context about failure severity and complexity to be meaningful, and teams need consistent definitions of what constitutes a failure to enable fair comparisons across different systems or projects.

- ✅ Actionable: Teams can improve monitoring, rollback procedures, and incident response.

- ✅ Timely/responsive: Immediately reflects failure recovery efficiency.

- ⚠️ Contextual/normalized: Needs context about failure severity and complexity.

- ✅ Aligned with goals: Faster recovery aligns with reliability goals.

- ⚠️Unambiguous: The definition of failure varies between teams and projects.

- ✅ Resistant to gaming: Hard to game without improving incident recovery processes.

- ✅ Drives good behavior: Encourages better incident response and monitoring.

- ⚠️ Comparability: Comparable within similar systems, but not across teams that have varying definitions for failure.

DX Core 4 metrics that require additional context

Percentage of time spent on new capabilities

Verdict: ⚠️ Mixed

Percentage of time spent on new capabilities receives a mixed verdict as it's timely and responsive to allocation changes, but faces significant contextual challenges. While teams can adjust their allocation, the optimal balance depends heavily on broader business context, product stage, and whether innovation or maintenance is the current priority.

The metric is vulnerable to gaming through work reclassification and requires clear definitions to distinguish new capabilities from maintenance work. Most problematically, it may encourage innovation at the expense of necessary maintenance, and optimal levels vary so dramatically by context that meaningful comparisons across teams or organizations are challenging.

- ⚠️ Actionable: Teams can adjust allocation, but optimal balance depends on the broader business context.

- ✅ Timely/responsive: Reflects allocation changes quickly.

- ⚠️ Contextual/normalized: Optimal percentage varies by product stage and business needs.

- ⚠️ Aligned with goals: Depends on whether innovation or maintenance is the priority.

- ⚠️ Unambiguous: Requires clear definition of new capabilities vs. maintenance.

- ⚠️ Resistant to gaming: Can be gamed by reclassifying work types.

- ⚠️ Drives good behavior: May encourage innovation but could discourage necessary maintenance.

- 🛑 Comparability: Optimal levels vary dramatically by context.

Perceived software quality

Perceived software quality receives a mixed verdict as it provides actionable feedback on what would help developers and aligns with the strategic goal of producing high-quality software.

However, it faces significant challenges as a subjective measure: quality is interpreted differently by individuals based on their expectations and experience, making it heavily context-dependent and ambiguous. The metric is vulnerable to gaming through expectation management rather than actual improvements, and as a lagging indicator that’s subject to bias. While directionally useful, it may encourage perception management over measurable productivity gains and requires extensive context for meaningful cross-team comparisons.

- ✅ Actionable: Individual subjective perceptions provide direct advice on what actions would help developers.

- ⚠️ Timely/responsive: Perception is a lagging indicator that is subject to bias and may not reflect quality improvements.

- 🛑 Contextual/normalized: Heavily dependent on individual expectations and experience.

- ✅ Aligned with goals: Producing high quality software is a strategic business objective.

- 🛑 Unambiguous: Quality is subjective and interpreted differently.

- 🛑 Resistant to gaming: Can be influenced by managing expectations.

- ⚠️ Drives good behavior: Directionally accurate, but may encourage perception management over measurable productivity improvements.

- ⚠️ Comparability: Subjective measures require a lot of additional context to compare in an actionable way across teams and organizations.

Ease of delivery

Ease of delivery receives a mixed verdict as it can identify specific friction points in the delivery process that developers experience daily and aligns well with developer experience goals.

However, as a subjective measure, it faces significant challenges: perception may lag behind actual process changes and can be influenced by recent frustrating experiences rather than overall trends. The metric is ambiguous since ease is interpreted differently across individuals and teams, and it's vulnerable to gaming through expectation management or surface-level fixes rather than fundamental improvements. While it can encourage investment in developer experience and tooling, it may also lead to perception management over substantive change, and subjective measures are difficult to compare meaningfully across teams or individuals with different experience levels.

- ⚠️ Actionable: While subjective, can identify specific friction points in the delivery process that developers experience daily. However, requires structured follow-up to translate perceived difficulties into concrete process improvements.

- 🛑 Timely/responsive: Perception may lag behind or not reflect actual process changes, and can be influenced by recent frustrating experiences rather than overall trends.

- 🛑 Contextual/normalized: Subjective and varies by individual tolerance, experience level, and familiarity with tools and processes.

- ✅ Aligned with goals: Aligns well with developer experience goals - developers should feel empowered to deliver code without unnecessary friction or barriers.

- 🛑 Unambiguous: "Ease" is subjective and interpreted differently across individuals and teams.

- 🛑 Resistant to gaming: Can be influenced by managing expectations, improving communication, or addressing surface-level issues rather than fundamental process improvements.

- ⚠️ Drives good behavior: Can encourage investment in developer experience and tooling improvements, but may also lead to perception management over substantive change.

- 🛑 Comparability: Subjective measures are difficult to compare meaningfully across teams, tools, or individuals with different experience levels

DX Core 4 metrics that fail the vanity metric test

PRs per engineer

PRs per engineer fails most vanity metric tests.. The metric is simple to game by submitting smaller, more frequent PRs without improving actual productivity, and it completely ignores crucial factors like code quality, PR complexity, review time, or business value delivered. It actively encourages harmful behaviors by incentivizing quantity over quality, leading to rushed, fragmented work, technical debt, and poor code practices rather than thoughtful development that creates real value.

- 🛑 Actionable: Engineers can easily game this by submitting smaller, more frequent PRs without improving actual productivity.

- ✅ Timely/responsive: Changes reflect quickly in the metric.

- 🛑 Contextual/normalized: Ignores PR complexity, code quality, review time, or business value delivered.

- 🛑 Aligned with goals: May incentivize quantity over quality, leading to technical debt and poor code practices.

- ✅ Unambiguous: Easy to count and understand.

- 🛑 Resistant to gaming: Extremely easy to game by generating purposeless PRs.

- 🛑 Drives good behavior: Encourages rushed, fragmented work rather than thoughtful development.

- 🛑 Comparability: Meaningless across teams with different codebases, complexity levels, or development practices.

Key challenges with PRs per engineer:

- Incentivizes fragmentation: Developers may artificially split work into smaller PRs to boost their numbers, creating unnecessary overhead in review processes and making it harder to understand the complete context of changes.

- Ignores work complexity: A single PR that refactors a critical system component provides more value than ten trivial documentation updates, but this metric treats them equally.

- Encourages rushed work: Pressure to maintain high PR counts may lead developers to submit incomplete or poorly tested code, shifting quality problems downstream to reviewers and production.

- Creates perverse competition: Teams may compete on PR volume rather than collaborating effectively, damaging team dynamics and knowledge sharing.

- Misaligns with business value: High PR counts don't correlate with feature delivery, customer satisfaction, or business impact—the outcomes that actually matter.

- Penalizes thoughtful development: Developers who take time to design elegant solutions, write comprehensive tests, or engage in thorough code review may appear less productive under this metric.

Rather than tracking PR volume, organizations should focus on metrics that measure actual delivery effectiveness, such as cycle time (which captures the full journey from code start to production), change failure rate (which reflects code quality), and developer satisfaction surveys that identify real friction points in the development process. These alternatives provide actionable insights for improving both developer experience and business outcomes without encouraging counterproductive gaming behaviors.

Developer Experience Index (DXI)

Developer Experience Index (DXI) fails every single vanity metric test, making it perhaps the most problematic metric in the Core 4 framework. The proprietary black box formula makes it impossible to know what actions will improve the score, creating a dependency on vendor consulting services rather than empowering teams to drive meaningful change.

As a composite metric based on survey data, it obscures contextual factors and lags behind actual improvements, while the unknown methodology makes it impossible to interpret results or drive specific behaviors that would genuinely improve developer experience.

- 🛑 Actionable: Black box proprietary formula makes it impossible to know what actions will improve it.

- 🛑 Timely/responsive: Survey-based, so changes lag behind actual improvements.

- 🛑 Contextual/normalized: Composite metric obscures important contextual factors.

- 🛑 Aligned with goals: Unclear what specific goals it measures due to proprietary nature.

- 🛑 Unambiguous: Proprietary formula makes interpretation impossible.

- 🛑 Resistant to gaming: Unknown methodology makes gaming unpredictable but possible.

- 🛑 Drives good behavior: Can't drive behavior when the formula is unknown.

- 🛑 Comparability: Proprietary benchmarking against unspecified peer groups.

Key challenges with Developer Experience Index (DXI):

- Proprietary black box methodology: The formula is completely opaque, making it impossible to understand which specific actions will improve the score or how different factors are weighted, leaving teams to guess at improvement strategies.

- Vendor lock-in by design: The proprietary nature creates dependency on a specific vendor for calculation, interpretation, and benchmarking, preventing organizations from adapting the measurement to their unique context or migrating to alternative tools.

- Composite metric confusion: By combining multiple survey dimensions into a single score, DXI obscures which specific aspects of developer experience need attention. Is the problem with tooling, process, culture, or something else entirely?

- Unverifiable benchmarking: Comparisons against peer groups are meaningless when you can't verify the methodology, peer selection criteria, or whether the comparison companies face similar challenges and constraints.

- Survey fatigue without insight: Regular surveys are required to maintain the metric, but the black box calculation means teams can't learn from the data or iterate on their measurement approach based on what they discover.

- Executive communication nightmare: When leadership asks how improving DXI will impact business outcomes, there's no clear answer because the relationship between the proprietary score and actual productivity improvements is unknown.

Rather than relying on proprietary composite metrics, organizations should implement transparent developer experience measurement using established frameworks like SPACE or targeted surveys that ask specific, actionable questions about tooling friction, process bottlenecks, and workflow interruptions. These approaches provide clear visibility into what's measured, enable teams to adapt questions based on their context, and create direct pathways from survey insights to concrete improvements.

Operational health and security metrics

Operational health and security metrics fail the vanity metric test due to their overly broad and ambiguous definitions, which make standardized measurement extremely challenging. The category is so expansive that different teams interpret operational health in completely different ways. Without clear boundaries and proper normalization, teams may focus on easily measurable metrics rather than meaningful improvements, potentially creating misaligned priorities across the organization.

- ⚠️ Actionable: While teams can improve specific aspects like monitoring and incident response, the broad definition makes it unclear which actions will have the most impact. Different interpretations of operational health and security can lead to scattered improvement efforts.

- ⚠️ Timely/responsive: The broad nature of this category makes it hard to match signals to outcome. Some metrics can be effective, but they typically require additional context.

- 🛑 Contextual/normalized: The overly broad definition lacks proper normalization. operational health means different things to different teams: uptime for infrastructure teams, error rates for application teams, performance metrics for platform teams. Without clear boundaries, context becomes meaningless.

- ⚠️ Aligned with goals: Generally aligns with business objectives around reliability and security, but the broad scope makes it difficult to connect specific improvements to strategic outcomes.

- 🛑 Unambiguous: The definition is so broad it becomes ambiguous. Teams may focus on completely different metrics while claiming to measure the same thing. Does it include performance metrics? Infrastructure costs? Security vulnerabilities? Compliance scores?

- ⚠️ Resistant to gaming: While individual security and health metrics can be gamed (for example, marking incidents as resolved without fixing root causes), the broad nature makes systematic gaming more difficult but also makes meaningful measurement harder.

- ⚠️ Drives good behavior: The broad definition may encourage teams to focus on easily measurable metrics rather than meaningful improvements. Teams might optimize for uptime while ignoring security debt, or vice versa.

- 🛑 Comparability: The lack of standardization makes comparison across teams or time periods nearly impossible. One team's operational health dashboard will be completely different from another's.

Key challenges with Operational health and security measurements:

- Scope creep: The category is so broad it could include dozens of different metrics, from system uptime to vulnerability counts to compliance scores.

- Lack of prioritization: Without clear boundaries, teams may spread efforts too thin across multiple operational areas rather than focusing on high-impact improvements.

- Inconsistent implementation: Different teams will interpret and implement this metric differently, making organizational insights impossible.

- Analysis paralysis: The breadth of possible metrics can lead to endless debates about what to measure rather than actual improvement.

Rather than using this overly broad category, organizations should select specific, well-defined operational and security metrics that align with their context and goals, such as failed deployment recovery time and change failure rate This focused approach provides the clarity and actionability that the broad operational health and security metrics category lacks. You can augment these metrics with surveys to identify sources of friction relating to operational health and security.

Initiative progress and ROI

Initiative progress and ROI fail most vanity metric tests due to fundamental issues with measurement and actionability. While teams can adjust execution approaches, many work on foundational capabilities like platform, infrastructure, and security, where ROI is challenging to measure and may not be within their control to demonstrate. The metric is problematic because both progress and ROI require extensive definition work that varies dramatically across teams and organizations, making it easily gamed through scope adjustments and cherry-picking favorable timeframes while potentially discouraging necessary but hard-to-quantify work like technical debt reduction.

- 🛑 Actionable: While teams can adjust execution approaches, many teams work on foundational capabilities (platform, infrastructure, security) where ROI is difficult to measure and may not be within their control to demonstrate.

- 🛑 Timely/responsive: ROI is inherently a lagging indicator that may take months or years to materialize. Progress tracking varies widely in definition and quality across different types of work.

- 🛑 Contextual/normalized: "ROI" means different things to different teams - revenue impact for product teams, cost savings for platform teams, risk reduction for security teams. The metric lacks a standardized approach to normalization.

- ⚠️ Aligned with goals: May align with strategic objectives, but many engineering teams contribute to goals that aren't easily quantifiable in ROI terms (technical debt reduction, developer experience improvements, compliance).

- 🛑 Unambiguous: Both "progress" and "ROI" require extensive definition work. Teams measure progress differently (story points, milestones, user feedback), and ROI calculation methods vary dramatically across organizations.

- 🛑 Resistant to gaming: Progress metrics are notoriously easy to game through scope adjustment, milestone redefinition, or cherry-picking favorable ROI timeframes and calculation methods.

- 🛑 Drives good behavior: Pressures teams to work only on initiatives with measurable, short-term ROI, potentially discouraging necessary but hard-to-quantify work like technical debt, infrastructure improvements, or foundational capabilities.

- 🛑 Comparability: Meaningless across different team types, industries, and organizational contexts due to varying definitions of both progress and ROI.

Key challenges with initiative progress and ROI:

- ROI calculation impossibility: Platform teams can't easily quantify the ROI of improving CI/CD pipelines, security teams struggle to measure the ROI of preventing incidents that didn't happen, and infrastructure teams can't demonstrate the business value of technical debt reduction using traditional financial metrics.

- Definitional chaos: Organizations use wildly different approaches to measure both progress (story points vs. milestones vs. user feedback) and ROI (revenue impact vs. cost savings vs. risk reduction), making any attempt at standardization or comparison meaningless.

- Short-term optimization bias: Teams may abandon critical long-term investments like platform modernization, developer tooling improvements, or architectural refactoring in favor of quick wins that show immediate, measurable returns.

- Scope manipulation incentives: Teams can easily game progress metrics by reducing scope, redefining milestones, or cherry-picking favorable measurement periods, while ROI calculations can be manipulated through creative accounting and timeline selection.

- Foundational work penalty: Essential but hard-to-quantify work like compliance improvements, security hardening, and developer experience enhancements may be deprioritized because their value is difficult to express in ROI terms.

- Attribution complexity: In complex engineering organizations, it's often impossible to attribute specific business outcomes to individual team initiatives, making ROI calculations speculative at best.

Rather than forcing all engineering work into ROI frameworks, organizations should use context-appropriate metrics that align with each team's contribution to business value. Product teams can focus on feature adoption and user satisfaction metrics, platform teams on developer productivity improvements and system reliability, and infrastructure teams on performance and availability gains. These should be supplemented with planning accuracy metrics and stakeholder satisfaction surveys that capture value delivery without the artificial constraints of ROI calculations.

Revenue per engineer

Revenue per engineer fails every single vanity metric test, making it one of the most problematic metrics in the Core 4 framework. Engineers have no control over the primary drivers of this metric and can encourage negative behaviors like reducing headcount or reclassifying roles without creating any additional value.

- 🛑 Actionable: Engineers don't control pricing, customer mix, or sales strategy.

- 🛑 Timely/responsive: Revenue is a lagging indicator influenced by many external factors.

- 🛑 Contextual/normalized: Ignores differences in product type, company stage, and monetization strategy.

- 🛑 Aligned with goals: Incentivizes headcount reduction over value creation.

- 🛑 Unambiguous: Multiple definitions of revenue (ARR, GAAP, Gross vs. net) and engineer roles create ambiguity.

- 🛑 Resistant to gaming: Easily gamed by reducing headcount or reclassifying roles.

- 🛑 Drives good behavior: Promotes fear and internal competition rather than collaboration.

- 🛑 Comparability: Meaningless across different business models and contexts.

Key challenges with revenue per engineer:

- External factor dominance: Revenue fluctuates based on market conditions, pricing strategies, customer churn, sales effectiveness, and economic cycles—none of which engineering teams can directly influence, making this metric a poor reflection of engineering productivity or effectiveness.

- Headcount reduction incentives: The easiest way to improve this metric is to lay off engineers, creating perverse incentives that prioritize cost-cutting over capability building and can lead to organizational damage in pursuit of better numbers.

- Business model incompatibility: A SaaS company with recurring revenue operates completely differently from a consulting firm with project-based billing or a product company with one-time sales, making cross-industry or even cross-company comparisons meaningless.

- Role definition ambiguity: Organizations classify engineers differently—some include QA and DevOps, others don't; some count contractors, others only full-time employees; some include engineering managers, others exclude them—creating inconsistent calculations that undermine any benchmarking attempts.

- Timing mismatch: Engineering work done today may not generate revenue for months or years, while current revenue may reflect engineering decisions made years ago, creating a fundamental disconnect between effort and measurement.

- Innovation punishment: Teams working on foundational capabilities, developer tooling, or exploratory projects that don't directly generate revenue may be viewed as less valuable, discouraging the long-term investments that drive sustainable growth.

Rather than using revenue per engineer, organizations should focus on metrics that engineering teams can actually influence and that reflect their contribution to business value. These include delivery velocity metrics like cycle time and deployment frequency, quality indicators like change failure rate and system reliability, and developer experience measures that capture the team's ability to deliver value efficiently. For business impact measurement, track planning accuracy and stakeholder satisfaction to understand how effectively engineering delivers on commitments without the distortions of revenue-based calculations.

R&D as a percentage of revenue

R&D as a percentage of revenue fails most vanity metric tests as only executive leadership can meaningfully adjust R&D budgets, making it irrelevant for engineering teams who may be measured against it but can't influence the outcome. The metric is highly problematic due to extreme context dependency: optimal percentages vary dramatically by industry and company stages.

- 🛑 Actionable: Only the executive leadership team can adjust R&D investment, and optimal levels depend entirely on the broader business context.

- 🛑 Timely/responsive: Revenue is lagging; R&D investments may take years to show results.

- 🛑 Contextual/normalized: Optimal percentage varies dramatically by industry and company stage.

- ⚠️ Aligned with goals: Depends on whether growth or profitability is the priority.

- ⚠️ Unambiguous: Requires clear definition of what constitutes R&D.

- ⚠️ Resistant to gaming: Can be gamed by reclassifying expenses or timing revenue recognition.

- ⚠️ Drives good behavior: May encourage innovation investment but could discourage necessary operational spending.

- 🛑 Comparability: Optimal levels vary dramatically by industry and business model.

Key challenges with R&D as a percentage of revenue:

- Executive-only actionability: Only C-suite executives can meaningfully adjust R&D budgets, making this metric irrelevant for engineering teams and managers who are measured against it but can't influence the outcome.

- Industry context dependency: A pharmaceutical company may invest 15-20% in R&D while a retail company invests 1-2%, making any universal benchmarking meaningless without deep industry and competitive context.

- Stage-dependent optimization: Early-stage companies may need to invest 30-50% in R&D to build their product, while mature companies may optimize for 5-10% to maintain competitiveness, making temporal comparisons misleading.

- Revenue volatility distortion: A company with consistent R&D spending can see this percentage spike during revenue downturns or plummet during revenue growth, creating metric movements that don't reflect actual investment decisions.

Rather than using R&D as a percentage of revenue, organizations should focus on metrics that engineering teams can influence and that reflect actual innovation effectiveness. These include investment allocation tracking (percentage of engineering time spent on new capabilities versus maintenance), innovation pipeline metrics like time-to-market for new features, and developer productivity measures that show how efficiently R&D dollars are converted into valuable capabilities. For executive-level investment decisions, track competitive positioning, market share growth, and product differentiation metrics that demonstrate the business impact of R&D investments without the distortions of percentage-based calculations.

A more practical approach to engineering productivity

The analysis reveals that Core 4's most valuable metrics are borrowed from established frameworks like DORA, while its novel additions frequently fail to meet the criteria for meaningful measurement. Rather than focusing on complex frameworks with problematic metrics, engineering leaders should prioritize measurements that directly connect to actionable improvements. Here's a more practical approach:

Practical steps to implement effective productivity measurement

For engineering leaders considering their approach to productivity measurement, here's a practical implementation guide:

Step 1: Start with actionable metrics

Begin with well-established metrics that have demonstrated value:

- Efficiency metrics like cycle time, deployment frequency help teams diagnose bottlenecks.

- Workflow metrics like PR size, review time, and pickup time help teams stay on top of work that needs their attention.

- Quality metrics like change failure percentage and failed deployment recovery time help identify sources of risk.

- Team health metrics like planning accuracy, capacity accuracy, and developer satisfaction ensure teams aren’t being overworked or underutilized.

Step 2: Choose an integrated platform

Select a platform that provides both quantitative operational data and qualitative survey capabilities. The ideal platform should:

- Integrate with your existing development tools (Git, CI/CD, project management)

- Provide real-time operational insights rather than periodic pollng

- Offer workflow automation capabilities to act on insights

- Support both individual team and organizational-level reporting

- Enable custom metrics and dashboards for your specific context

Step 3: Connect data sources

Ensure comprehensive data collection across your development lifecycle:

- Source Control: All Git repositories and branching strategies

- Project Management: Jira, Azure DevOps, or similar tools

- CI/CD Pipelines: Build, test, and deployment systems

- Incident Management: PagerDuty, Datadog, or similar tools

- Communication Tools: Slack, Microsoft Teams for collaboration insights

Step 4: Implement workflow automation

Use insights to drive automated improvements:

- Intelligent PR routing: Optimize downstream development workflows

- Quality gates: Automate testing, compliance, and security scanning

- Deployment automation: Streamline release processes

- Notification systems: Use proactive alerts to address bottlenecks

Step 5: Establish data-driven improvement habits

Create sustainable processes for ongoing optimization by embedding data into your existing ceremonies and establishing new data-driven habits:

- Weekly flow optimization: Use operational data to identify bottlenecks and implement targeted automation that addresses specific friction points like excessive PR pickup times or large batch sizes.

- Monthly team metrics check-ins: Have both leaders and team members review relevant metrics beforehand to enable productive discussions that combine quantitative data with qualitative developer feedback.

- Quarterly business alignment reviews: Connect engineering metrics to business outcomes by demonstrating how productivity improvements translate to faster delivery and optimized resource allocation across projects.

- Individual developer coaching: Use productivity metrics in 1:1 sessions to identify skill gaps, workload imbalances, and growth opportunities through transparent, data-informed conversations.

LinearB's approach to engineering productivity

While frameworks like Core 4 focus primarily on measurement, the most successful engineering organizations go beyond metrics to implement automated improvements. This is where LinearB's approach differentiates itself from traditional measurement-focused solutions.

Integrate quantitative and qualitative data

LinearB combines real-time operational data from your development tools with qualitative survey insights, providing a complete picture of engineering productivity. Unlike Core 4, which relies on proprietary composite metrics, LinearB offers transparent, actionable insights that connect directly to improvement opportunities.

Improve developer experience

Rather than just measuring bottlenecks, LinearB uses AI to automatically address them:

- AI code reviews: Get immediate, actionable feedback on code changes without breaking developers out of their flow.

- Intelligent PR routing: Match pull requests to the most qualified reviewers based on code expertise and review requirements.

- Automated quality gates: Automatically enforce quality checks without adding to cognitive burden.

- Proactive metrics response: Alert developers to help them stay on track without overwhelming them with noise.

- Workflow orchestration: Automated processes that reduce manual work and human error.

Enterprise-grade governance

For organizations adopting AI tools and processes, LinearB provides governance capabilities that ensure security, compliance, and quality:

- AI code governance: Track and manage AI-generated code contributions

- Policy enforcement: Automated compliance checks and approvals

- Risk management: Identify and mitigate potential issues before they impact production

- Audit trails: Comprehensive logging and reporting for regulatory compliance

By focusing on actionable improvements rather than just measurement, LinearB helps engineering teams achieve meaningful productivity gains while maintaining high standards for quality and developer experience.

The future of engineering productivity beyond DX Core 4

DX Core 4 represents an attempt to create a unified framework for engineering productivity measurement, but it doesn't meaningfully advance the conversation beyond existing frameworks like DORA and SPACE. While it incorporates some proven metrics from these established frameworks, its novel additions often fail to meet the criteria for meaningful measurement.

The framework's reliance on proprietary composite metrics, over-emphasis on perception-based measurements, and inclusion of problematic metrics like revenue per engineer suggest it's more focused on creating marketable metrics dashboards than solving real engineering productivity challenges.

Your engineering team will be better served by:

- Starting with proven metrics from established frameworks

- Focusing on leading indicators that predict outcomes

- Implementing workflow automation to address identified bottlenecks

- Creating continuous improvement processes that connect insights to actions

- Choosing platforms that provide both measurement and automation capabilities

The future of engineering productivity isn't about finding the perfect measurement framework; it's about creating systems that automatically identify and address inefficiencies while empowering developers to do their best work. By focusing on actionable improvements rather than vanity metrics, engineering organizations can achieve meaningful productivity gains that benefit both their teams and their business outcomes.

The key is to remember that metrics are a means to an end, not the end itself. The goal isn't to measure developer productivity perfectly; it's to improve it continuously. That improvement comes through action, automation, and a relentless focus on reducing friction in the development process, not through increasingly complex measurement frameworks that promise easy answers to complex challenges.

FAQ

What is DX Core 4 and how does it work?

DX Core 4 is a developer productivity framework that measures four dimensions: Speed, Effectiveness, Quality, and Impact. It incorporates DORA metrics alongside proprietary elements like the Developer Experience Index (DXI) and includes both system-based and self-reported metrics.

Does DX Core 4 include DORA metrics?

Yes, DX Core 4 incorporates several DORA metrics including deployment frequency and lead time within its Speed dimension, while adding additional metrics for effectiveness, quality, and business impact measurement.

What are the main problems with DX Core 4?

Key concerns include reliance on proprietary metrics like DXI that create vendor lock-in, heavy dependence on perception-based survey data, and inclusion of controversial metrics like "diffs per engineer" that can encourage gaming behaviors.

How does DX Core 4 compare to other frameworks?

Core 4 combines elements from DORA, SPACE, and DevEx frameworks but adds proprietary components. While it offers a unified approach, it lacks the proven track record and vendor independence of established frameworks.

Can you implement DX Core 4 without vendor tools?

Some Core 4 metrics can be tracked independently, but key components like the Developer Experience Index (DXI) are proprietary to GetDX, creating dependency on vendor-specific tooling for complete implementation.

What results have teams achieved with DX Core 4?

DX Core 4 is relatively new with limited independent case studies. Most reported results come from GetDX customers, whereas established frameworks like DORA have extensive independent validation showing improvements like 81% cycle time reductions.

Is DX Core 4 worth implementing?

The value depends on your specific needs. If you're already using DORA metrics effectively, Core 4 may add unnecessary complexity. Consider whether the additional metrics justify the implementation costs and vendor dependencies.

What's the best alternative to DX Core 4?

Rather than seeking alternatives, focus on proven frameworks like DORA for delivery metrics and SPACE for broader productivity measurement. Platform-agnostic tools can support multiple frameworks without vendor lock-in.