2025 is the year AI shifted from novelty to necessity in software development.

Tools like GitHub Copilot, Cursor, and Claude Code are no longer experimental, they’re embedded in the workflows of thousands of teams, accelerating delivery and rewriting the rules of collaboration. But with adoption soaring, a deeper challenge has emerged:

How do you know if AI is actually helping your team?

Executives are asking for outcomes. Boards are tracking AI productivity as a KPI. And engineering leaders are under pressure to demonstrate the ROI of generative AI. Yet most of the available metrics, like time saved or suggestions generated, fail to connect AI usage with actual business value.

That’s why AI measurement is now table stakes for engineering leadership. Not just to prove ROI, but to build trust, align strategy, and scale AI responsibly.

At LinearB, we’ve developed an AI Measurement Framework grounded in delivery outcomes, not guesses. This guide breaks it down.

The LinearB philosophy: throughput and quality are the key metrics

Most teams start their AI journey with enthusiasm and surface metrics. But that’s not enough.

In working with some of the world’s best engineering teams, LinearB has identified two key metrics that matter the most:

- Throughput: are you delivering more value, faster? (notice: not more code, more value)

- Quality: are you avoiding bugs, tech debt, and unnecessary rework along the way?

Lines of code and hours saved miss the bigger picture. AI should increase the impact of your team and their quality, not just their output.

We also believe that adoption isn’t binary, it’s a journey. Teams don’t flip a switch and become AI-powered overnight. Measuring AI effectiveness requires both quantitative telemetry and qualitative insights to understand how deeply it’s integrated across people, workflows, and tools.

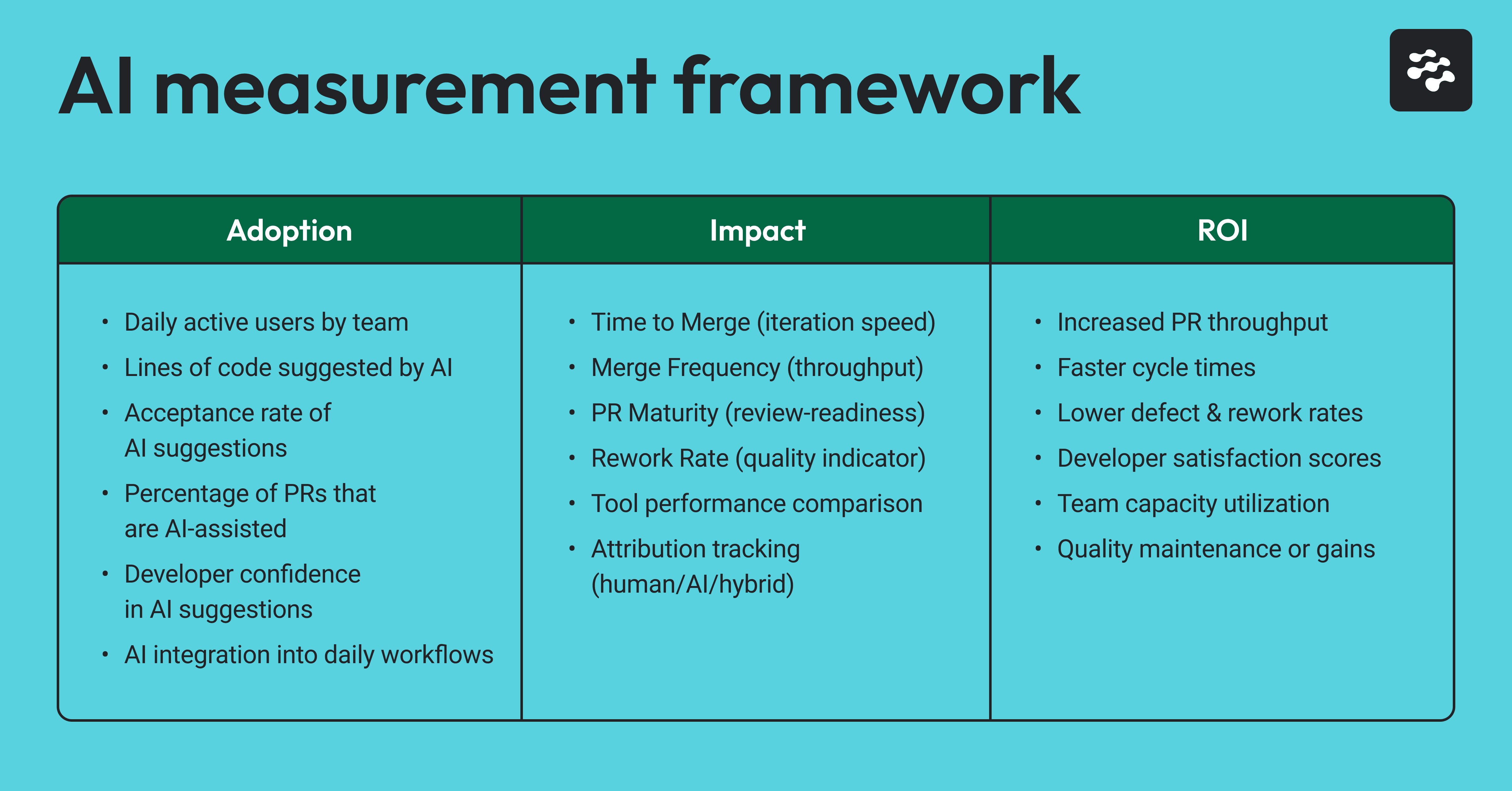

To make this philosophy actionable, we’ve developed a two-part framework:

Adoption: are teams actively using AI tools in their day-to-day workflows?

Impact: is AI making a measurable difference in engineering performance and quality?

This framework combines telemetry, delivery metrics, and developer feedback to give engineering leaders visibility they can trust.

How to measure adoption beyond seats counts

Buying 100 Copilot licenses doesn’t guarantee adoption.

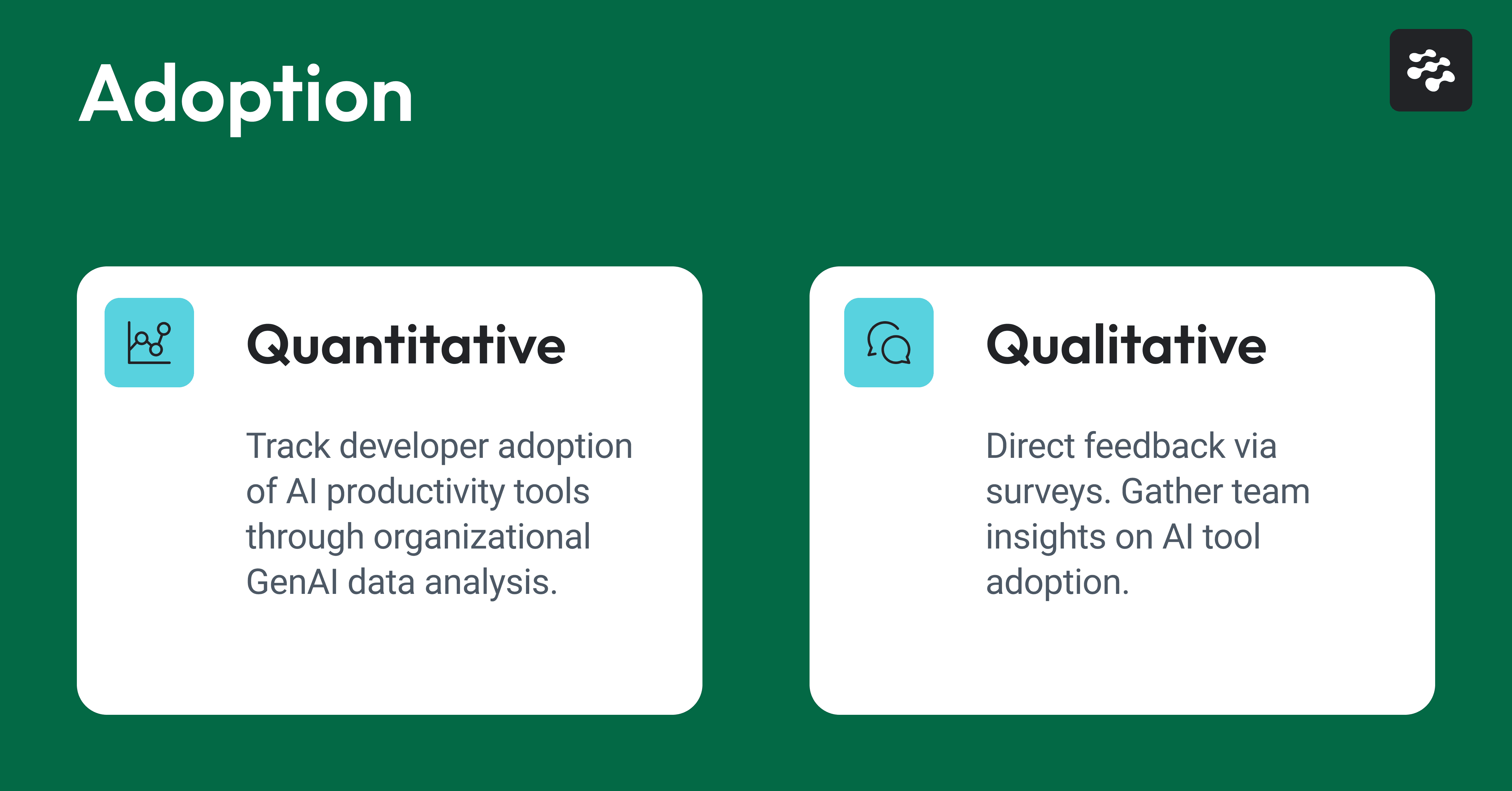

At LinearB, we separate true adoption from procurement by measuring how often and how meaningfully AI is used. We break this down into two dimensions: quantitative and qualitative.

Quantitative adoption signals:

- Daily active users by org, group, or team

- Lines of code suggested by GenAI tools

- Acceptance rate of AI-suggested code

These metrics tell you: Are developers engaging with the tool? Are they finding its suggestions helpful? Is usage growing, stable, or dropping off?

Qualitative adoption signals:

- How confident do developers feel in AI suggestions?

- Are they integrating AI into daily tasks or ignoring it?

- What’s holding them back: hallucinations, trust, noise?

These surveys surface resistance, champions, and usage patterns that telemetry alone can’t uncover.

How to measure impact beyond time saved

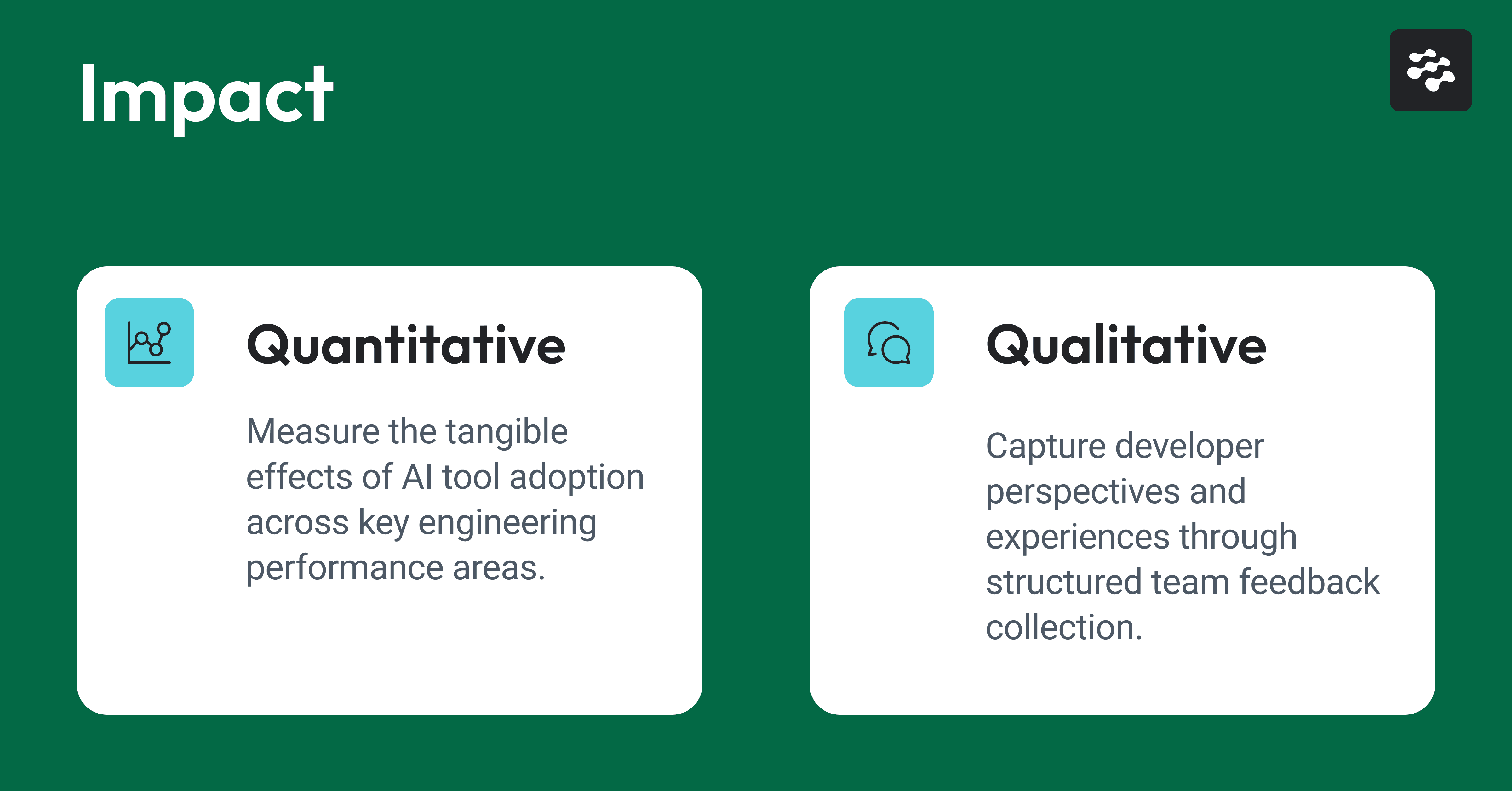

Adoption is only half the picture. The ultimate question is whether AI is actually helping you ship better software, faster.

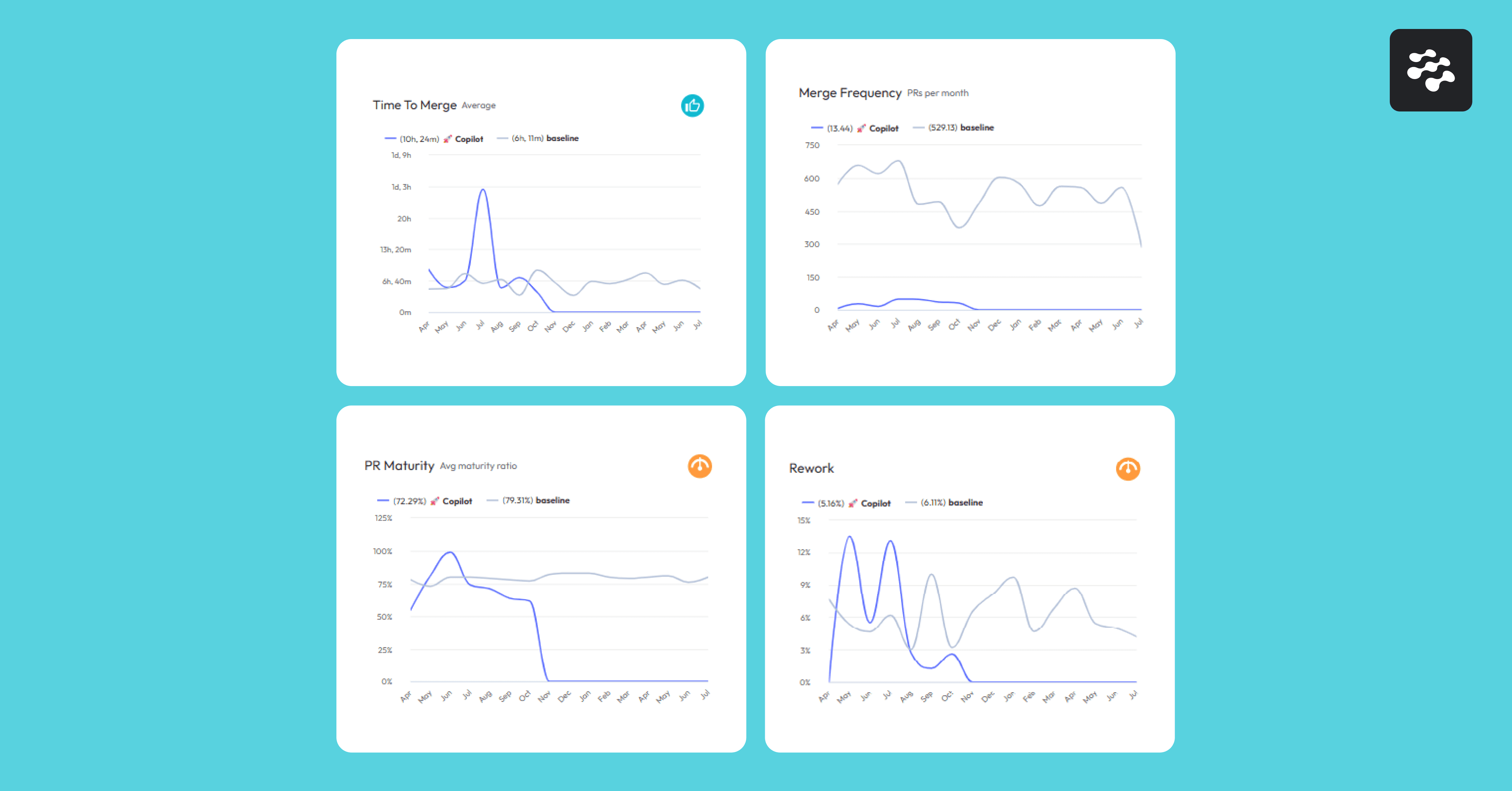

We track four core delivery metrics on the quantitative side to assess impact:

- Time to Merge: How long does it take for an approved pull request to be merged into main? A drop in this number signals faster iteration cycles.

- Merge Frequency: Are teams shipping more PRs each sprint? This is a proxy for throughput gains.

- PR Maturity: How review-ready is a PR on its first submission? High maturity means fewer cycles and better prep.

- Rework Rate: How much recently merged code is being rewritten within 21 days? High rework indicates low quality or rushed delivery.

The combination of these four gives you a balanced view of speed and sustainability.

We also correlate these with attribution labels (AI-generated vs. human-written) so you can track how AI is influencing each of these metrics directly.

Connecting AI output to engineering impact

Without attribution, AI measurement is meaningless. You need to distinguish between:

- Human-authored code

- AI-suggested code, human-edited

- Fully AI-generated code

LinearB’s platform supports commit-level tagging and downstream tracking of AI-authored changes across the entire delivery pipeline, from merge to deployment.

This lets you:

- Benchmark one AI tool vs. another (e.g., Copilot vs. Cursor)

- Track code quality on AI vs. non-AI PRs

- Spot over-reliance or under-utilization by team

With attribution in place, you can start to build a defensible model of GenAI ROI.

Calculating ROI to find the value

AI vendors love to talk about "hours saved," but that’s a soft metric.

We prefer to model ROI around hard, delivery-focused signals:

- Increased PR throughput

- Faster cycle times

- Lower rework and defect rates

- Higher engagement and satisfaction scores

Together, these indicate not just how much faster you’re working, but how effectively.

Best practices for using an AI measurement framework

Measuring AI effectiveness across engineering workflows requires more than dashboards, it demands operational discipline and cultural alignment. Here’s how high-performing teams implement AI measurement:

1. They benchmark before rollout, not just after

Start by capturing baseline data before deploying GenAI tools. Track metrics like PR merge frequency, time to merge, and rework rate. Without a pre-AI benchmark, you can’t isolate impact. You need every inch of your adoption runway to nail this, don’t squander any of it.

2. They tag AI-influenced code at the source

Use commit-level attribution to distinguish between human-authored, AI-assisted, and fully AI-generated code. This data unlocks time-series analysis and quality comparisons between teams or tools.

3. They enable real-time feedback loops

Deploy micro-surveys through Slack or Teams to gather qualitative feedback from developers. Ask about trust in suggestions, perceived productivity shifts, and workflow fit.

4. They integrate KPIs into pre-existing engineering rituals

Or they replace those rituals altogether. Don’t treat AI measurement as a separate initiative. Review GenAI metrics during sprint retros, QBRs, and roadmap planning. Include them in executive reporting.

5. They monitor dashboards that combine both usage and delivery outcomes

Avoid siloed metrics. A strong AI measurement dashboard should correlate AI adoption rates, code suggestion acceptance, and PR-level delivery performance in one view.

6. They actually scale their AI measurement strategy

You can’t set this up then walk away from it. Every new venture around AI or new projects using AI need to have a way back to your measurement strategy. Start with basic usage telemetry and sentiment tracking. As you mature, add tool-specific comparisons, ROI attribution modeling, and cross-team benchmarking.

Together, these practices turn your AI measurement strategy from reactive to repeatable, and from tactical to strategic.

Final thoughts on AI measurement

AI is changing how we build software, but change without measurement is risky.

You don’t need to measure everything. You need to measure the right things:

- Are we using AI in meaningful ways?

- Is it actually helping us move faster and better?

- Can we prove that value to the business?

With LinearB’s AI Measurement Framework, engineering leaders get the clarity they need to make smarter decisions, adopt the right tools, and scale AI with confidence.

Adoption is easy. Accountability is harder. But that’s where the leadership happens.

Want to see how LinearB measures AI adoption, impact, and ROI in real time? Schedule a demo today to explore our engineering productivity platform.

FAQs about AI measurement

How do I measure AI in software development?

Track usage telemetry (suggestions, acceptance), delivery metrics (merge time, rework), and developer sentiment.

What’s the best AI measurement framework?

LinearB’s Adoption → Impact → ROI model, which combines both qualitative and quantitative signals.

What tools should I use?

LinearB for delivery and attribution data, which can itself source your Copilot and Cursor metrics for IDE usage (quant), and distribute Slack surveys for developer feedback (qual).

How do I prove AI is worth it?

Connect AI-attributed PRs to throughput and quality improvements. If delivery is faster, quality is stable, and developers are engaged, that’s ROI because you’re getting more done in less time and creating less technical debt.