Are your engineering teams still being evaluated by how many lines of code they write? Do sprint reviews focus on volume rather than impact? Or perhaps you're struggling to articulate why your most productive developers aren't necessarily the ones generating the most code.

You're not alone. Despite decades of evidence against it, Lines of Code (LOC) remains a surprisingly persistent metric in engineering management discussions. This outdated approach creates misaligned incentives, rewards inefficiency, and ultimately undermines the very outcomes you're trying to achieve.

Modern engineering leadership focuses on outcomes, not output. The most effective engineering organizations understand that true productivity is defined by delivering customer and business value, not simply writing more code.

In this blog, you'll learn:

- Why LOC fails as a developer productivity metric and creates harmful incentives

- Which metrics actually correlate with high-performing engineering teams

- How to implement a more effective measurement framework

- Practical strategies to improve both developer productivity and experience

What are Lines of Code (LOC) in software engineering?

Lines of Code (LOC) is a size-oriented software metric that counts the number of lines in program source code, and comes in two primary variants:

Physical LOC counts raw line numbers in a source file, including comments, blank lines, and formatting. This basic measurement treats every line equally, regardless of content or significance.

Logical LOC (or SLOC) attempts to improve accuracy by counting only executable statements or declarations. While more nuanced than physical LOC, logical SLOC still focuses solely on quantity over quality, complexity, or business impact.

Both metrics share a fundamental limitation: they measure size, not complexity, quality, or value. A 10-line algorithm that solves a critical business problem delivers far more value than 100 lines of boilerplate code—yet LOC metrics would suggest the opposite.

LOC is also trivially easy to manipulate. Developers can artificially inflate their "productivity" by:

- Using verbose coding styles with unnecessary line breaks

- Avoiding code reuse in favor of copy-pasting

- Writing inefficient implementations that require more code

- Generating excessive comments or documentation

This gamification potential makes LOC not just an ineffective metric, but an actively harmful one.

Why Lines of Code is a misleading productivity metric

Language differences skew LOC counts

Different programming languages express the same functionality with dramatically different line counts. A function that requires 50 lines in Java might need only 5 in Python. Even within the same language, different libraries or frameworks can affect verbosity.

Consider this example of a simple function to read a file and count word occurrences:

In Python:

def count_words(filename):

with open(filename) as f:

return len(f.read().split())The equivalent Java implementation might require 15-20 lines with imports, exception handling, and more verbose syntax. This disparity makes cross-language productivity comparisons meaningless when using LOC.

More code doesn't mean more value

High-performing developers often write less code instead of more via better architecture, modularization, and reuse. They understand that every line of code is a liability that must be maintained, tested, and debugged.

The most valuable engineering contributions frequently involve:

- Finding elegant solutions that reduce complexity

- Creating reusable components that prevent duplication

- Implementing algorithms that solve problems with minimal code

- Making architectural decisions that simplify the codebase

By measuring LOC, you inadvertently discourage these high-value activities and instead reward verbosity and inefficiency.

LOC incentivizes the wrong behaviors

When developers know they're being evaluated on code volume, they naturally optimize for that metric, often at the expense of more important outcomes like code quality, maintainability, and business impact.

This misalignment creates several problematic behaviors:

- Avoiding refactoring (it often reduces line count)

- Writing verbose, inefficient implementations

- Adding unnecessary features to inflate code production

- Focusing on easy, code-heavy tasks rather than challenging problems

Refactoring and code complexity are invisible

Some of the most valuable engineering work reduces LOC rather than increases it. Refactoring, simplification, and technical debt reduction often result in smaller, more maintainable codebases—yet LOC metrics would register this critical work as "negative productivity."

Similarly, LOC fails to capture cognitive complexity—how difficult code is to understand and maintain. A dense, complex 50-line function might be far more challenging to work with than a clear, well-structured 200-line implementation with proper abstraction.

Optimizing for LOC vs. Better Code

LOC-Focused Teams:

- Prioritize quantity over quality

- Avoid refactoring and simplification

- Create verbose implementations

- Incur higher maintenance costs

Quality-Focused Teams:

- Prioritize maintainability and efficiency

- Regularly refactor and improve code

- Focus on elegant, minimal solutions

- Reduce long-term technical debt

What metrics actually reflect developer productivity?

Instead of focusing on Lines of Code, effective engineering leaders measure outcomes and flow efficiency. These metrics provide more meaningful insights into both individual and team productivity while aligning technical work with business value.

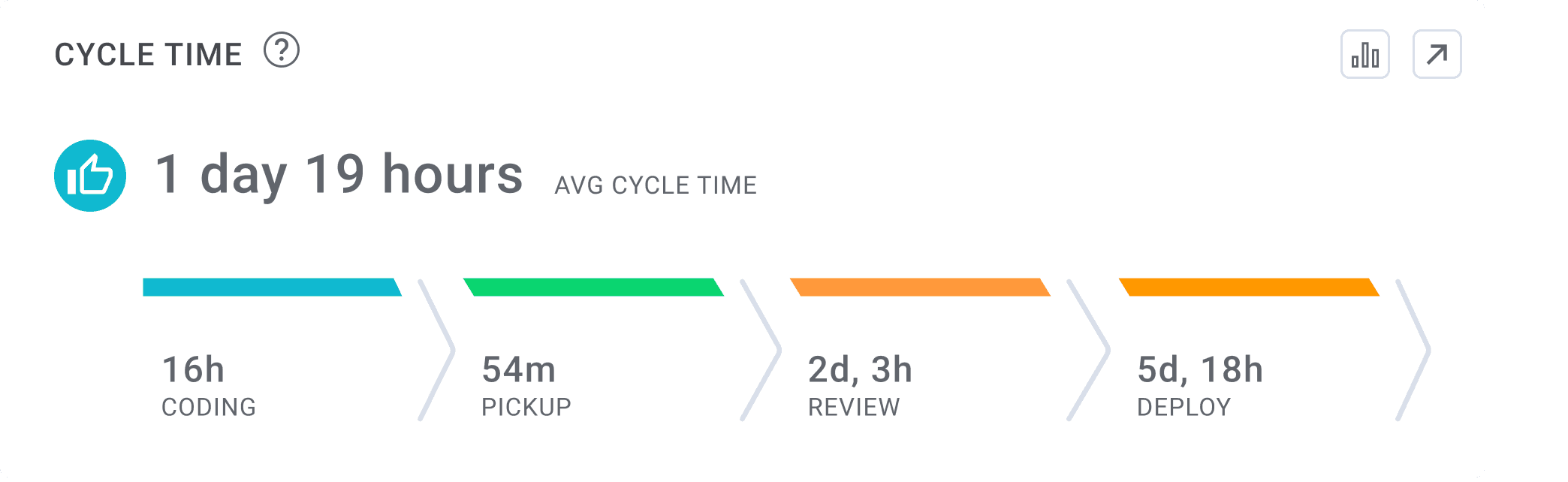

Lead Time for Changes (aka Cycle Time) measures how quickly your team can deliver code improvements from first commit to production deployment. This comprehensive metric tracks your entire delivery pipeline efficiency and directly correlates with your ability to deliver customer value. Unlike LOC, shorter Lead Time indicates a healthier engineering organization with streamlined processes. Breaking this metric down into components—coding time, pickup time, review time, and deploy time—reveals exactly where bottlenecks form in your workflow.

PR Size and Merge Frequency offer actionable insights into your development efficiency. Smaller PRs (under 200 lines changed) typically receive faster reviews, contain fewer defects, and merge more quickly. Teams that optimize for manageable PR sizes and higher merge frequency see compounding benefits in delivery speed and team morale.

Merging developers are happy developers.

PM Hygiene Metrics like Issues Linked to Parents and Branches Linked to Issues help ensure alignment between code changes and planned work items. While paradoxically, elite teams sometimes show lower PM hygiene metrics (prioritizing speed over documentation), most organizations benefit from strong traceability to improve forecasting and predictability.

Accuracy Scores measure planning effectiveness and execution. Planning Accuracy tracks how much planned work is actually delivered, while Capacity Accuracy measures completed work against planned capacity. Together, these metrics reveal whether teams can reliably meet delivery commitments—a critical aspect of engineering productivity.

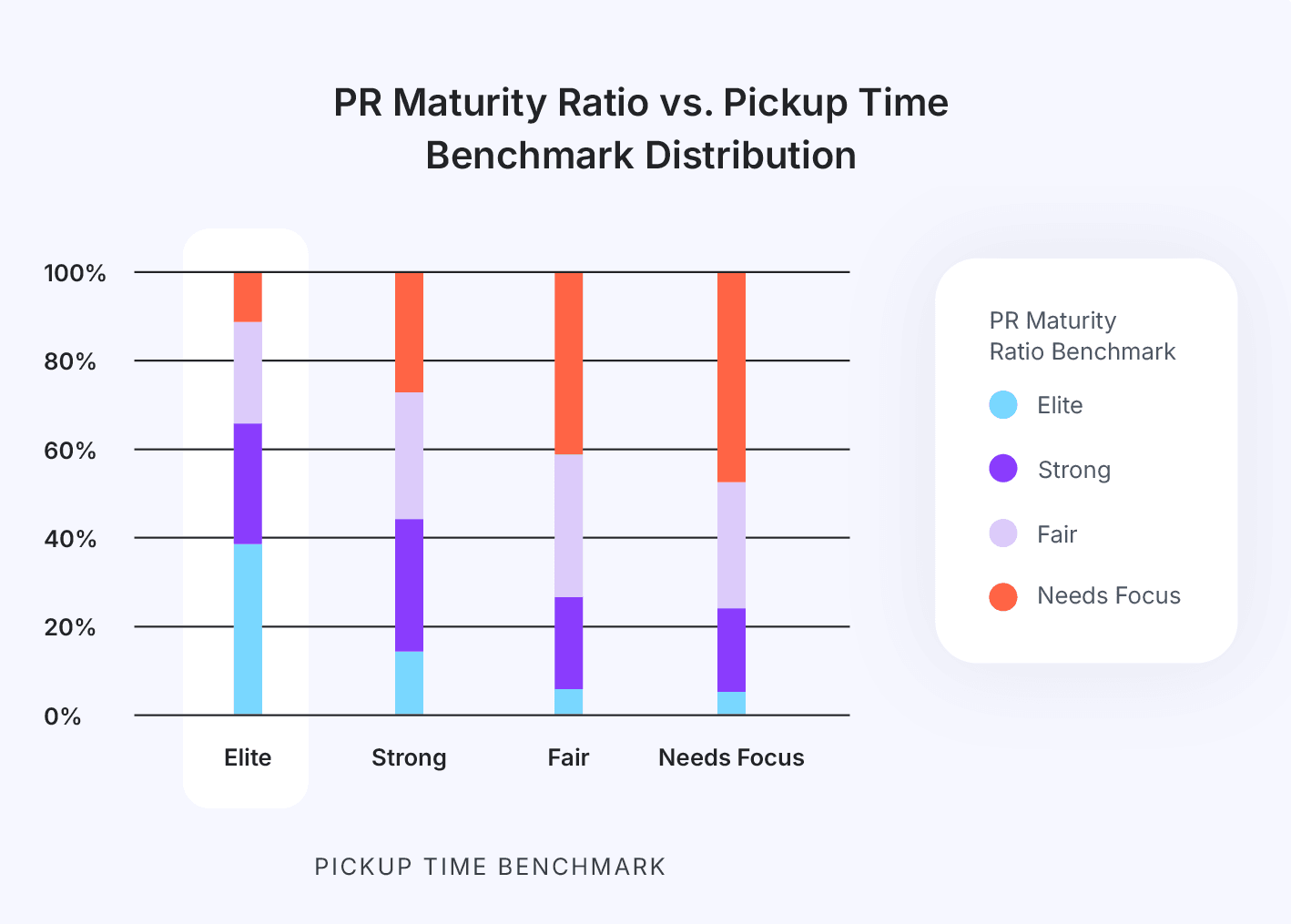

PR Maturity indicates how effective your development process is before code review. High PR maturity (where PRs need minimal changes after submission) correlates with higher velocity, as developers create more complete and polished work before requesting reviews.

By focusing on these outcome-oriented metrics instead of code volume, you align engineering incentives with true business value: delivering working, high-quality features to customers quickly and reliably.

Learn more about the Developer Productivity Metrics that matter in our Engineering Leader’s Guide to Accelerating Developer Productivity →

Why Developer Experience (DevEx) is the key to sustainable productivity

Beyond metrics, the most forward-thinking engineering organizations recognize that Developer Experience (DevEx) directly impacts productivity, quality, and retention. DevEx encompasses all factors that affect how effectively developers can deliver value; from tools and workflows to culture and cognitive load.

DevEx isn't just a feel-good initiative; it's a strategic investment with measurable returns. Removing friction from the development process unlocks capacity and creativity. When developers spend less time wrestling with tooling, waiting for builds, or navigating confusing requirements, they dedicate more energy to solving core business problems.

Effective DevEx improvements include:

- Optimizing CI/CD pipelines to provide faster feedback (sub-10 minute builds)

- Implementing AI Code Review tools that catch issues early

- Creating self-service internal platforms for common development tasks

- Reducing cognitive load through better documentation and knowledge sharing

- Establishing clear requirements and communication channels with stakeholders

One critical DevEx element often overlooked is cognitive overload. Productivity plummets when developers context-switch between too many tasks or navigate overly complex systems. By measuring and reducing these mental burdens, you help your team maintain flow state and peak performance.

This isn't just a feel-good slogan; it's a business reality. When your team spends less time fighting tooling and more time creating value, productivity naturally increases without artificial metrics or pressure tactics.

How engineering leaders should rethink engineering metrics

Transforming your approach to engineering metrics requires intentional leadership and a clear focus on business outcomes. The most successful engineering leaders follow these principles when evolving their measurement approach:

First, stop incentivizing raw code volume in any form. Remove LOC from performance reviews, sprint reports, and team dashboards. This sends a clear signal that quality and impact, not volume, drive recognition and advancement.

Instead, track and report on:

- Feature delivery speed and predictability

- User-facing reliability and performance metrics

- Incident response effectiveness

- Customer satisfaction and adoption rates

Want to align engineering metrics with business outcomes that executives and stakeholders value? Download our CTO Board Deck Template →

This alignment helps demonstrate how engineering work directly contributes to company goals, elevating technical discussions beyond just "how much code did we write?"

Implement a balanced measurement framework that combines engineering efficiency with developer experience signals. The most effective approach integrates:

- DORA metrics (Deployment Frequency, Lead Time, Change Failure Rate, Mean Time to Restore)

- Flow metrics (efficiency, load, distribution)

- Developer Experience indicators (satisfaction, friction points, tool effectiveness)

- Business impact measures (feature adoption, customer retention)

This holistic view prevents optimization of one dimension at the expense of others, creating sustainable high performance rather than short-term productivity spikes.

5 Steps to Modernize Your Engineering Metrics:

- Establish baseline measurements for Lead time, Cycle Time, and Change Failure Rate, and compare them against industry benchmarks

- Identify your biggest bottlenecks using Cycle Time breakdown analysis

- Implement workflow automation to address those specific bottlenecks

- Create team-level dashboards that highlight outcomes over output

- Build a feedback loop connecting engineering metrics to business results

These steps create a virtuous cycle where measurement drives improvement, not just assessment. Teams gain visibility into how their work impacts the broader organization while leaders gain insights that inform smarter resource allocation and strategic planning.

Delivering value, not just writing code

The shift from measuring code volume to measuring outcomes represents a fundamental evolution in how you view engineering productivity.

Developer productivity isn't about counting; it's about impact. The most valuable engineers often write less code, not more, because they understand that every line introduces complexity and maintenance costs. They deliver outsized impact through smart architecture decisions, elegant abstractions, and focused problem-solving.

By modernizing your measurement approach, you unlock significant gains across key dimensions:

- Higher team performance through better-aligned incentives

- Improved retention by focusing on meaningful work

- Enhanced business results from faster, more reliable delivery

- Greater organizational resilience through sustainable engineering practices

Are you ready to move beyond Lines of Code and build a more effective, outcome-oriented engineering organization?

Learn how LinearB’s Engineering Productivity Platform helps engineering leaders track real productivity and improve DevEx with actionable metrics that connect development work to business outcomes.

FAQ

What is a source line of code?

A source line of code (SLOC) is a software metric that counts the number of lines in a program's source code. There are two main types: physical SLOC, which counts all lines including comments and blank lines, and logical SLOC, which counts only executable statements or declarations. While easy to measure, SLOC varies significantly between programming languages and doesn't reflect code quality or functionality.

Why is LOC a bad metric for productivity?

LOC is a poor productivity metric because it encourages writing verbose, inefficient code rather than elegant solutions. LOC ignores that skilled developers often create more value with less code, varies dramatically between programming languages, and fails to capture refactoring efforts that improve code but reduce line count.

As Bill Gates once said:

"Measuring software productivity by Lines of Code is like measuring progress on an airplane by how much it weighs."

What are alternatives to Lines of Code metrics?

More effective alternatives to LOC include:

- DORA metrics (Deployment Frequency, Lead Time, Change Failure Rate, Recovery Time)

- Function Point Analysis for measuring software size based on functionality

- Cyclomatic complexity for assessing code's logical complexity

- Pull request metrics like PR Size, Review Time, and Merge Frequency

- Business outcome measures like feature adoption rates and customer satisfaction

- Developer experience indicators gathered through team surveys and feedback

What is Function Point Analysis and how does it compare to LOC?

Function Point Analysis (FPA) measures software size by counting its functional components from a user perspective rather than counting lines of code. Developed by Allan Albrecht at IBM in 1979, FPA categorizes and weights software functions like inputs, outputs, queries, and data files, providing a language-independent measure of software functionality. Unlike LOC, FPA correlates better with development effort across different languages and technologies, though it requires more expertise to implement correctly.

How do DORA metrics improve on traditional code metrics?

DORA (DevOps Research and Assessment) metrics focus on delivery outcomes rather than code quantity, providing a more meaningful measure of engineering effectiveness. Unlike LOC, they track metrics that directly impact business success: how frequently you deploy (Deployment Frequency), how quickly you deliver changes (Lead Time), how often changes cause problems (Change Failure Rate), and how quickly you recover from incidents (Time to Restore). These metrics align technical performance with business goals and customer satisfaction rather than rewarding code volume.

How should engineering leaders measure team productivity?

Effective engineering leaders measure productivity through outcome-based metrics rather than output metrics like LOC. Focus on delivery metrics (like DORA), business impact measures (feature adoption rates, revenue generated), and developer experience indicators (team satisfaction, friction points). Combine quantitative data with qualitative feedback to understand both what teams produce and how efficiently they work. The most meaningful productivity metrics connect engineering activities directly to business goals and customer value.