Are you running a healthy engineering organization? Most engineering leaders can't answer this question with confidence because they lack reliable software development metrics to measure team performance.

Based on analysis of 6.1 million pull requests from over 3,000 development teams, we've identified the software development metrics that separate elite performers from struggling teams. This data represents the largest study of software development performance metrics ever conducted, spanning 32 countries and 167,437 active contributors.

Why this guide is different: While most metrics advice relies on theoretical frameworks and best practices, every benchmark and recommendation in this guide comes from real production data. You'll get actionable insights tested across thousands of engineering teams, not generic advice that may not work in your specific context.

Engineering teams generate massive amounts of data through every code commit, pull request review, and deployment. The challenge is knowing which software development metrics actually predict success and how to use them for continuous improvement. Most organizations either track too many vanity metrics or rely on gut feelings instead of measurable data.

Elite engineering teams use software development performance metrics to identify bottlenecks before they impact delivery, optimize developer workflows, and demonstrate clear business value. They measure both delivery outcomes and developer experience, creating sustainable high performance instead of unsustainable sprints toward arbitrary deadlines.

The business impact is significant. Organizations with mature software development metrics programs achieve 2.2x faster delivery times and report 60-percentage-point improvements in customer satisfaction ratings. They make data-driven decisions about tool investments, team structure, and process improvements instead of relying on assumptions.

This guide provides the complete framework for implementing software development metrics that drive real results. You'll learn industry-standard benchmarks from our analysis of millions of pull requests, discover which metrics predict problems before they occur, and get practical implementation strategies tested across thousands of engineering teams.

What are software development metrics?

Software development metrics are quantitative measurements that provide visibility into how engineering teams create, review, and deploy code. These metrics capture the unique characteristics of software delivery: collaboration patterns, code quality trends, deployment frequency, and developer productivity levels.

The evolution of software development performance metrics reflects our industry's growing maturity. Traditional approaches focused on output-based measurements like lines of code and function points. These software development metrics failed because they ignored the collaborative and creative nature of engineering work. Writing more code doesn't necessarily create more value, and individual productivity metrics miss the team dynamics that determine delivery success.

Modern software development metrics balance two critical dimensions: Developer Experience (DevEx) and Developer Productivity (DevProd). Developer Experience encompasses developer morale and engagement when interacting with tools, processes, and environments. Developer Productivity measures how effectively teams complete meaningful tasks with minimal waste.

This balance between experience and productivity distinguishes effective software development performance metrics from vanity metrics. Teams that optimize purely for output often create unsustainable conditions leading to burnout and attrition. Teams focusing only on developer happiness without measuring delivery outcomes struggle to demonstrate business value. The best engineering teams achieve both high developer satisfaction and exceptional delivery performance through balanced software development metrics.

Many leaders believe engineering work can't be measured, but this thinking is outdated. Software development generates rich data at every step: commits, pull requests, code reviews, deployments, and incident responses. Every interaction creates measurable signals about team health and delivery capability. The key is identifying which software development metrics matter and interpreting them correctly.

Effective software development performance metrics serve multiple stakeholders. Engineering managers use them to identify process bottlenecks and resource allocation opportunities. Individual contributors benefit from clear feedback loops that accelerate professional growth. Executive stakeholders gain confidence in engineering investments and delivery commitments. Product teams make better tradeoffs between feature scope and delivery timelines.

The challenge with software development metrics isn't data collection, it's correlation across different tools and timeframes. Git repositories capture code changes but miss context about why changes were necessary. Project management tools track story completion but don't reveal technical complexity. CI/CD systems measure deployment frequency but can't explain failure patterns.

Modern software development metrics programs solve this correlation problem by combining data from multiple sources into actionable insights. They track both lagging indicators showing historical performance and leading indicators predicting future outcomes. This dual approach enables proactive optimization instead of reactive problem-solving.

Engineering metrics impact the entire organization, not just development teams. Research from DORA shows that teams with effective metrics achieve 208x more frequent deployments and 106x faster recovery from incidents. Companies with mature software development metrics programs attract top engineering talent, respond faster to market opportunities, and make better technology investment decisions.

However, software development metrics can create negative incentives if implemented incorrectly. Teams measured solely on deployment frequency might sacrifice code quality for speed. Developers knowing their commit frequency is tracked might fragment meaningful changes into smaller, less coherent updates. The solution is measuring complete systems and workflows, not isolated behaviors.

Successful software development metrics programs start with clear objectives and work backward to identify relevant measurements. Improving delivery predictability requires tracking cycle time and planning accuracy. Reducing production incidents focuses on change failure rates and recovery time. Addressing developer retention involves measuring task distribution and satisfaction levels.

Software development performance metrics also evolve with organizational maturity. Startup teams typically focus on basic deployment frequency and code quality metrics. Scale-up organizations need sophisticated measurements around collaboration effectiveness and technical debt management. Enterprise teams require comprehensive dashboards providing insights across multiple products and technology stacks.

The foundation of any software development metrics program is trust between leadership and engineering teams. Developers need confidence that metrics improve systems rather than punish individuals. Managers need assurance that measurements reflect genuine performance rather than gaming behaviors. Executives need evidence that engineering investments create measurable business outcomes.

Implementation requires balancing measurement sophistication with practical utility. The most effective software development metrics are those that teams actually use for decision-making, not those that look impressive on executive dashboards but provide no actionable insights for day-to-day engineering work.

DORA metrics: The foundation of engineering measurement

The DevOps Research and Assessment (DORA) team established the first industry-standard framework for measuring software development performance. After analyzing thousands of organizations, DORA identified four key metrics that consistently differentiate high-performing engineering teams from average performers. These software development metrics have become the foundation for engineering measurement programs across the industry.

DORA metrics focus on delivery speed and stability, providing a balanced view of engineering effectiveness. Speed without stability leads to production fires and customer dissatisfaction. Stability without speed results in missed market opportunities and competitive disadvantage. Elite engineering organizations excel at both dimensions simultaneously.

The four DORA metrics explained

Cycle Time measures the duration from when development work begins until code reaches production. This software development metric captures the entire delivery pipeline, including coding, review, testing, and deployment phases. Cycle time reflects process efficiency and identifies bottlenecks that slow feature delivery.

Deployment Frequency tracks how often teams release code to production. Higher deployment frequency indicates mature automation, effective testing, and confident release processes. Teams that deploy frequently can respond quickly to customer feedback and market changes.

Change Failure Rate calculates the percentage of deployments that cause production failures requiring immediate fixes or rollbacks. This software development performance metric measures code quality and release process reliability. Lower failure rates indicate better testing practices and deployment automation.

Mean Time to Recovery (MTTR) measures how quickly teams restore service after production incidents. Fast recovery requires effective monitoring, clear incident response procedures, and automated rollback capabilities. MTTR reflects organizational preparedness for handling inevitable failures.

Industry benchmark standards

Our analysis of 6.1 million pull requests reveals specific performance tiers that define elite, good, fair, and needs-focus categories for each DORA metric. These benchmarks provide objective standards for evaluating engineering team performance.

Get actionable insights from 6.1M pull requests across 3,000 teams to improve developer productivity. Download the 2025 Benchmarks Report

Metric | Elite | Good | Fair | Needs Focus |

| Cycle Time | Under 26 hours | 26-80 hours | 81-167 hours | Over 167 hours |

| Deployment Frequency | More than 1 deployment per service daily | 1-0.5 deployments per service daily | 0.4-0.15 deployments per service daily | Less than 0.15 deployments per service daily |

| Change Failure Rate | Under 1% | 1-4% | 5-23% | Over 23% |

| Mean Time to Recovery | Under 6 hours | 6-11 hours | 12-30 hours | Over 30 hours |

Cycle time benchmarks:

- Elite: Under 26 hours

- Good: 26-80 hours

- Fair: 81-167 hours

- Needs Focus: Over 167 hours

Elite teams complete features in roughly one business day, while struggling teams require over a week for the same work. The difference often stems from manual processes, large batch sizes, and unclear requirements rather than coding speed.

Deployment frequency benchmarks:

- Elite: More than 1 deployment per service daily

- Good: 1-0.5 deployments per service daily

- Fair: 0.4-0.15 deployments per service daily

- Needs Focus: Less than 0.15 deployments per service daily

Elite teams deploy multiple times daily with confidence, while low-performing teams deploy weekly or monthly due to fear of breaking production systems.

Change failure rate benchmarks:

- Elite: Under 1%

- Good: 1-4%

- Fair: 5-23%

- Needs Focus: Over 23%

Elite teams maintain exceptional quality while deploying frequently. Teams with failure rates above 23% typically lack adequate testing automation or clear quality standards.

Mean time to recovery benchmarks:

- Elite: Under 6 hours

- Good: 6-11 hours

- Fair: 12-30 hours

- Needs Focus: Over 30 hours

Elite teams restore service within a single work shift, while struggling teams may require multiple days to resolve production issues.

These software development metrics benchmarks come from analyzing engineering teams across organizational sizes, from 10-person startups to 10,000-person enterprises. The data shows that elite performance is achievable regardless of company size, though the implementation approaches differ.

Performance correlation patterns

Our research reveals strong correlations between DORA metrics and business outcomes. Teams achieving elite DORA performance report 2.6x higher revenue growth and 2.2x higher profitability compared to low performers. Elite teams also demonstrate 2.1x higher employee retention rates and 1.8x higher customer satisfaction scores.

The correlation between DORA metrics and organizational success stems from their focus on flow efficiency. Teams that deliver features quickly and reliably can experiment faster, respond to customer feedback sooner, and adapt to market changes more effectively. This competitive advantage compounds over time.

Implementation considerations

DORA metrics require careful instrumentation across your development toolchain. Cycle time measurement needs integration between project management systems, version control, and deployment platforms. Many teams start with manual tracking before investing in automated data collection.

Organizational context significantly impacts DORA metric interpretation. Regulated industries like healthcare and finance may prioritize stability over speed, accepting longer cycle times in exchange for additional compliance checks. Startups might optimize for deployment frequency to enable rapid market feedback, temporarily accepting higher change failure rates.

The key is establishing baselines for your current performance and tracking improvement trends rather than comparing absolute numbers to other organizations. A team improving from 200-hour to 100-hour cycle times has made significant progress, even if they haven't reached elite benchmarks yet.

Beyond basic DORA implementation

While DORA metrics provide essential visibility into delivery performance, they represent lagging indicators that show what already happened. Elite engineering organizations supplement DORA metrics with leading indicators that predict future performance and identify improvement opportunities before problems impact delivery.

DORA metrics also focus primarily on delivery pipeline efficiency rather than developer experience or code quality trends. Comprehensive software development metrics programs use DORA as the foundation while adding measurements that capture the full spectrum of engineering effectiveness.

Leading indicators that predict DORA performance

DORA metrics excel at measuring delivery outcomes, but they reflect what already happened rather than predicting future performance. Elite engineering teams supplement these lagging indicators with leading metrics that identify bottlenecks before they impact cycle time or deployment frequency. These predictive metrics help teams fix problems before they impact delivery.

Leading indicators focus on the individual steps within your delivery pipeline that aggregate into DORA performance. By measuring pull request patterns, code review effectiveness, and work distribution, teams can spot emerging problems weeks before they appear in cycle time trends.

Pull request and code review metrics

PR size analysis

Pull request size directly correlates with review quality and merge velocity. Our analysis shows that elite teams maintain PR sizes under 194 code changes, while struggling teams regularly create PRs exceeding 661 changes. Large pull requests create multiple problems: reviewers struggle to provide thorough feedback, authors resist making significant changes after extensive work, and merge conflicts become more likely.

The relationship between PR size and cycle time is exponential, not linear. A 200-line PR might receive approval within hours, while a 2,000-line PR could languish for days waiting for adequate review time. Teams that enforce PR size limits through automation achieve 40% faster cycle times compared to teams without size constraints.

PR maturity ratio

PR Maturity measures the percentage of final code that existed when the pull request was first published. Elite teams achieve PR Maturity ratios above 91%, indicating that developers thoroughly prepare their code before requesting review. Teams with low PR Maturity (under 77%) create additional work for reviewers and extend cycle times through multiple revision rounds.

Our research reveals a strong correlation between PR Maturity and merge frequency. Teams with high PR Maturity ratios achieve 2.25 merges per developer per week, while teams with low maturity struggle to exceed 0.75 merges per developer weekly. This shows that preparing code thoroughly before review speeds up the entire process.

Review time breakdown

Traditional cycle time measurements obscure where delays actually occur. Elite teams break review time into granular components: Pickup Time (how long until someone starts reviewing), Approve Time (time from first comment to approval), and Merge Time (approval to merge completion).

Pickup time benchmarks:

- Elite: Under 8 hours

- Good: 8-14 hours

- Fair: 15-23 hours

- Needs Focus: Over 23 hours

Long pickup times often indicate team capacity issues or unclear review assignment processes. Teams achieving elite pickup times typically use automated reviewer assignment and maintain explicit service level agreements for review response times.

Approve time analysis

Elite teams complete the approval process within 15 hours of first review comment, while struggling teams require over 45 hours. The difference stems from review quality and communication effectiveness. Elite teams provide actionable feedback that authors can address quickly, while ineffective reviews create back-and-forth discussions that extend approval cycles.

Code quality and rework metrics

Refactor rate vs rework rate

Understanding the difference between intentional code improvement and unplanned rework provides insights into technical debt management and development efficiency. Refactor Rate measures changes to code older than 21 days, typically representing planned technical debt reduction or feature enhancement. Rework Rate tracks changes to recent code, often indicating unclear requirements or inadequate initial implementation.

Elite teams maintain Refactor Rates below 11% and Rework Rates under 3%. This balance suggests they invest appropriately in technical debt reduction while minimizing thrash from poor planning or hasty implementation decisions.

Work distribution analysis

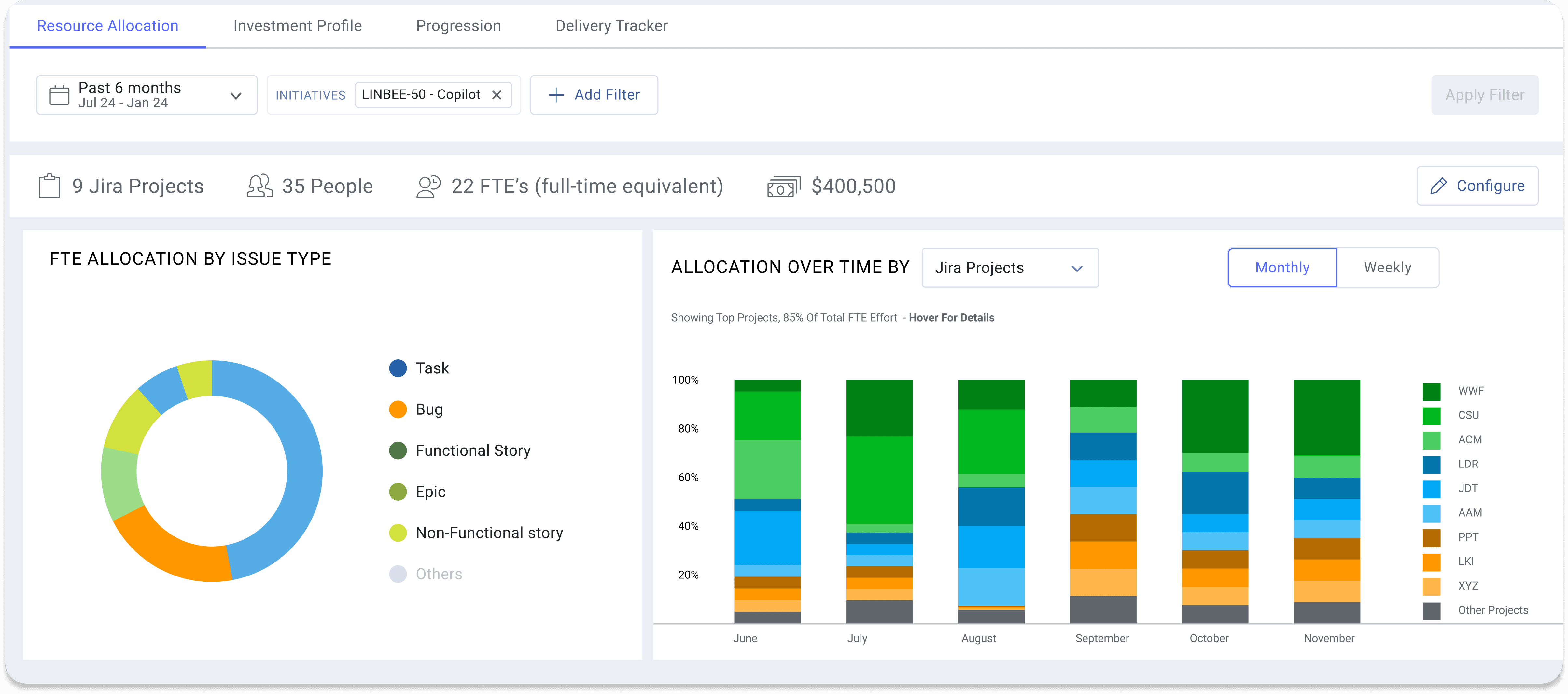

Our engineering investment research reveals that elite teams allocate approximately 55% of development time to new value creation, 20% to feature enhancement, 15% to developer experience improvements, and 10% to operational maintenance. Teams with healthy work distribution achieve better long-term velocity because they balance immediate feature delivery with foundational improvements.

Teams spending over 25% of their time on operational maintenance often struggle with technical debt or infrastructure reliability issues. Conversely, teams spending under 40% on new value creation may face competitive pressure or product-market fit challenges.

Predictive power of leading indicators

Leading indicators provide 2-4 week advance warning of DORA metric degradation. For example, increasing PR sizes and pickup times typically precede cycle time increases by 3-4 weeks. Teams monitoring these patterns can implement corrective actions before delivery performance suffers.

The predictive relationship works because leading indicators measure process health while DORA metrics measure process outcomes. Process degradation always precedes outcome degradation, giving teams intervention opportunities if they track the right signals.

Implementation strategy

Most teams should start with PR size and pickup time measurements because they require minimal tooling investment and provide immediate actionable insights. These metrics can be calculated from git and code review platform APIs without complex data pipeline development.

Teams achieving consistent performance on basic leading indicators can add more sophisticated measurements like PR Maturity ratios and work distribution analysis. The key is establishing measurement discipline before adding complexity.

Developer experience metrics: The human side of productivity

While delivery metrics reveal process efficiency, developer experience metrics capture the human elements that drive sustainable engineering performance. Poor developer experience leads to higher turnover rates, significant delays in feature delivery, and reduced code quality. Teams with optimized developer workflows achieve faster delivery times, lower turnover rates, and higher code quality.

Research consistently shows strong correlations between developer experience and business outcomes. These software development metrics provide early warning signals for retention risks and productivity degradation that impact long-term engineering effectiveness.

Key developer experience metrics

PR size and code review flow

PR Size measures the number of lines of code changed in pull requests. Our research shows that smaller PRs (under 200 lines) correlate with faster review cycles, lower defect rates, and improved developer satisfaction. Developers experience higher job satisfaction when their code moves smoothly through the review process rather than getting stuck in lengthy approval cycles.

Teams maintaining PR sizes under 194 lines achieve merge frequencies 5x faster than teams with oversized pull requests. This acceleration reduces the friction developers experience during code reviews and maintains development momentum.

Review time and pickup time impact

Review Time measures how long it takes to complete code reviews, while Pickup Time tracks how long PRs wait before someone begins reviewing them. Elite teams complete reviews in under 12 hours and achieve pickup times under 8 hours, enabling faster feedback loops and improved learning opportunities.

Extended review times disrupt developer flow and create frustration as developers wait for feedback on their work. When reviews drag on, developers often start new tasks, creating costly context switches when they need to revisit the original PR to address feedback.

Merge time and deploy time

Merge Time measures the duration from PR approval to actual merge, while Deploy Time tracks the journey from merge to production. Elite teams maintain merge times under 7 hours and deploy times under 21 hours, enabling faster feature delivery and stronger connections between development work and user impact.

Long merge and deploy times create developer frustration as completed and approved work sits idle. Developers lose the satisfaction of seeing their contributions reach users quickly, which impacts motivation and engagement over time.

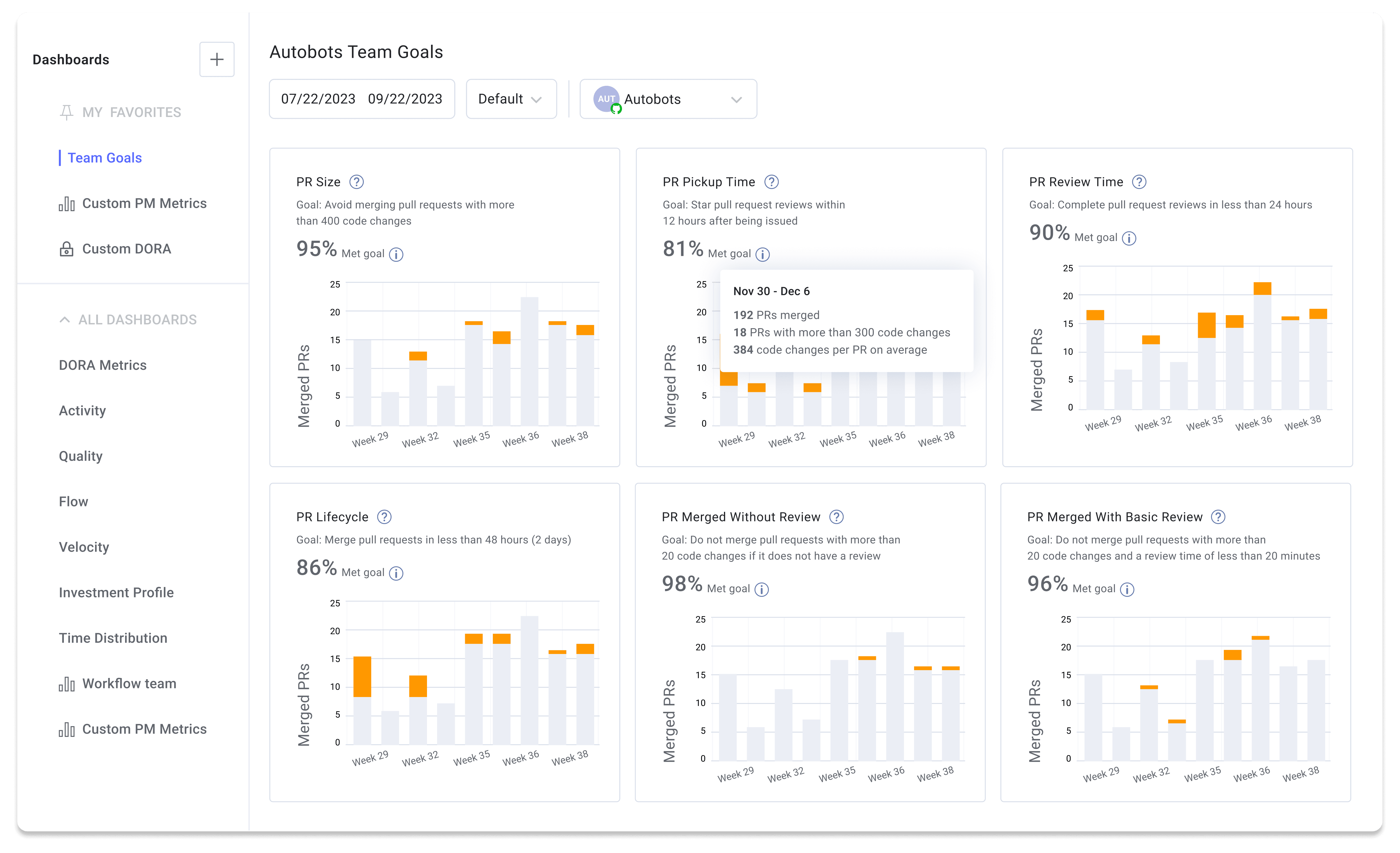

Focus time and meeting team goals

Focus Time measures periods of uninterrupted work developers achieve without context switching or waiting on external factors. Optimizing for focus time contributes to increased productivity through deeper problem-solving, enhanced code quality through concentrated attention, and improved developer satisfaction.

Meeting Team Goals tracks how well teams achieve their organizational objectives. Regularly achieving goals builds team cohesion and demonstrates visible progress, which is essential for developer satisfaction. Teams that consistently meet goals experience enhanced motivation, clearer direction, and data-backed celebration of wins.

Task balance and work distribution

Task Balance measures the distribution of different work types across your team: new features, refactoring, and maintenance. A healthy mix includes approximately 55% new value creation, 20% feature enhancement, 15% developer experience improvements, and 10% operational maintenance.

When developers focus exclusively on one task type, particularly maintenance or bug fixes, satisfaction and engagement decline. Balanced workloads provide developers with both challenges and wins, keeping them engaged while avoiding burnout from repetitive work.

Measuring developer toil and workflow efficiency

Quantifying developer toil

Developer toil encompasses repetitive, manual tasks that could be automated but currently require human intervention. Examples include manual deployment processes, flaky test debugging, environment setup, and routine maintenance tasks. Elite teams minimize toil through automation and process improvement.

Teams achieving elite performance typically report under 15% of development time spent on toil-related activities. Teams exceeding 30% toil time struggle with delivery velocity because developers spend more energy on process overhead than feature creation.

Unplanned work and context switching

Unplanned work refers to unexpected tasks like production issues or urgent bug fixes that arise during development cycles. High levels of unplanned work disrupt developer flow, create context switching, and undermine development process predictability.

Work in Progress (WIP) measurement tracks concurrent tasks per developer. High WIP creates the sensation of working hard while accomplishing little. Developers may stay busy all day but end without completing substantial work, which becomes demoralizing over time. Maintaining appropriate WIP limits (typically 1-2 items per developer) leads to improved focus, faster task completion, and higher quality work.

While developer experience metrics reveal team satisfaction and workflow efficiency, engineering investment metrics show whether resource allocation decisions support both developer happiness and business objectives.

Want to learn more about how you can accelerate developer productivity for your team? Download the Engineering Leader's Guide to Accelerating Developer Productivity

Engineering investment metrics: Resource allocation analysis

Understanding where engineering teams actually spend their development time provides crucial insights for optimizing both productivity and business outcomes. Engineering investment metrics reveal whether teams allocate resources effectively across competing priorities: new feature development, technical debt reduction, operational maintenance, and developer experience improvements.

Our analysis of engineering investment patterns across 3,000+ development teams reveals significant variations in resource allocation, with direct correlations to delivery performance and developer satisfaction. Teams with optimized investment profiles achieve 40% faster feature delivery while maintaining higher code quality and developer engagement.

The four categories of engineering work

Engineering work falls into four distinct investment categories, each serving different strategic purposes. Elite teams maintain specific allocation patterns that balance immediate business needs with long-term sustainability and developer productivity.

New Value Creation represents work that directly increases revenue and growth through new customer acquisition or expansion. This includes implementing new features, building roadmap functionality, and supporting new platforms or partner integrations. Elite teams allocate approximately 55% of their development time to new value creation, ensuring consistent progress toward business objectives.

Teams spending less than 40% of time on new value often struggle with competitive positioning or technical debt burden. Teams exceeding 70% new value allocation risk accumulating maintenance problems that eventually slow delivery velocity.

Feature Enhancements involve improving existing functionality to ensure customer satisfaction and retention. This category includes customer-requested improvements, performance optimizations, security enhancements, and iterations to improve adoption or retention metrics. Healthy teams invest around 20% of development time in feature enhancements.

Developer Experience Improvements encompass activities that enhance team productivity and satisfaction: code restructuring, testing automation, better developer tooling, and reducing operational overhead. Elite teams dedicate 15% of development time to developer experience, recognizing that productivity investments compound over time.

Teams underinvesting in developer experience often experience declining velocity as technical debt accumulates and developer frustration increases. Teams overinvesting may sacrifice short-term delivery for long-term optimization.

Keeping the Lights On (KTLO) includes minimum operational tasks required for daily business continuity: security maintenance, service monitoring, troubleshooting, and maintaining current service levels. Well-functioning teams limit KTLO work to approximately 10% of development time.

Teams spending over 25% of time on KTLO work typically face infrastructure reliability issues or technical debt problems that require immediate attention before focusing on new feature development.

Investment profile optimization strategies

Our research reveals that investment allocation patterns vary significantly by organizational context and growth stage. Startups often skew toward higher new value percentages (60-70%) to achieve product-market fit and competitive differentiation. Enterprise organizations may invest more heavily in feature enhancements and operational stability.

The key insight is intentionality: elite teams make conscious decisions about resource allocation rather than allowing work distribution to emerge organically. They track investment patterns quarterly and adjust based on business priorities and technical health indicators.

Teams achieving optimal investment profiles report 25% higher developer satisfaction scores and 30% more predictable delivery timelines compared to teams with unbalanced allocation patterns. This correlation suggests that thoughtful resource management benefits both business outcomes and team morale.

Predictability and planning metrics

Predictability in software development enables better business planning, resource allocation, and stakeholder communication. While perfect prediction remains impossible due to inherent complexity and discovery-driven work, elite teams achieve remarkable consistency in delivery timelines through effective planning and execution metrics.

Planning accuracy and capacity management

Planning Accuracy measures how well teams estimate the scope of work they can complete within specific timeframes, typically sprints or quarters. This software development metric reflects both estimation skills and scope management discipline.

Planning accuracy interpretation:

- High Planning Accuracy (75-96%): Excellent scope estimation and execution balance

- Moderate Planning Accuracy (60-74%): Room for improvement in estimation or scope control

- Low Planning Accuracy (below 60%): Significant planning or execution challenges

Teams with high planning accuracy demonstrate better stakeholder communication, reduced scope creep, and more effective resource utilization. They typically achieve 35% more predictable delivery timelines and report higher confidence in meeting business commitments.

Capacity accuracy analysis

Capacity Accuracy measures how well teams estimate their available development bandwidth, accounting for meetings, code reviews, operational tasks, and other non-coding activities. Elite teams achieve capacity accuracy above 80%, indicating realistic workload planning and effective time management.

The correlation between Planning Accuracy and Capacity Accuracy reveals team health patterns:

- High Planning + High Capacity: Excellent scope and execution balance

- Low Planning + High Capacity: Taking on wrong work or facing unplanned interruptions

- High Planning + Low Capacity: Good planning discipline but team overload

- Low Planning + Low Capacity: High risk of delivery delays and scope creep

Teams achieving both high planning and capacity accuracy report 50% fewer delivery surprises and maintain more sustainable development pace over time.

Project management hygiene metrics

Project management hygiene metrics measure the discipline and consistency of development workflow management. These software development performance metrics indicate whether teams maintain clear traceability between planned work and actual code changes.

Issues Linked to Parents tracks the percentage of development tasks connected to higher-level objectives like epics or stories. Elite teams maintain linkage rates above 91%, ensuring clear traceability from strategic initiatives to specific implementation work.

Branches Linked to Issues measures the percentage of code branches that reference specific project management tasks. Elite teams achieve linkage rates above 81%, providing visibility into the alignment between code changes and planned work items.

High traceability scores enable better progress monitoring, clearer stakeholder communication, and more effective resource allocation decisions. Teams with poor traceability often struggle with scope creep and unclear progress reporting.

Estimation and assignment discipline

In Progress Issues with Estimation measures the proportion of active development tasks that have time or effort estimates assigned. Elite teams maintain estimation rates above 59%, enabling better capacity planning and progress tracking.

In Progress Issues with Assignees tracks the percentage of active tasks with designated owners. Elite teams achieve assignment rates above 97%, ensuring clear accountability and workload visibility.

Teams maintaining high estimation and assignment discipline report 40% more accurate delivery predictions and 25% fewer coordination problems compared to teams with poor project management hygiene.

Predictability as competitive advantage

Organizations with predictable engineering delivery gain significant competitive advantages: better customer communication, more effective sales pipeline management, reduced business risk, and improved investor confidence. McKinsey research shows that companies with predictable delivery achieve 30% higher customer satisfaction and 25% faster revenue growth.

The business value of predictability extends beyond engineering teams. Marketing can plan campaigns around feature releases, sales can make confident commitments to prospects, and customer success can proactively communicate roadmap progress to key accounts.

Building your engineering metrics program

Implementing an effective engineering metrics program requires more than collecting data from development tools. Elite organizations follow a systematic approach that balances measurement sophistication with practical utility, ensuring that software development metrics drive real improvements rather than creating reporting overhead.

The most successful engineering metrics implementations focus on solving specific problems rather than tracking metrics for their own sake. Teams that start with clear objectives and work backward to identify relevant measurements achieve 60% faster time-to-value and maintain higher adoption rates across engineering organizations.

The three-building-block framework

Get visibility: Correlating data sources

The foundation of any engineering metrics program is establishing visibility across your complete development toolchain. Modern software development generates data in multiple systems: version control, project management tools, CI/CD platforms, and deployment systems. The challenge is correlating these data sources into coherent insights about team performance and delivery health.

DORA metrics exemplify this correlation challenge. Cycle time measurement requires integration between project management systems (when work starts), version control (code changes), code review platforms (approval processes), and deployment systems (production delivery). Teams often start with manual tracking before investing in automated data collection pipelines.

Elite teams establish data correlation gradually, beginning with high-impact metrics that require minimal tooling investment. PR size and review time can be calculated from git and code review platform APIs without complex infrastructure. These foundational measurements provide immediate value while teams build more sophisticated data pipelines.

Diagnose and report: Using benchmarks to identify bottlenecks

Raw metrics become actionable when compared against meaningful benchmarks. Our analysis of 6.1 million pull requests provides industry-standard performance tiers that help teams understand whether their current performance requires attention or represents competitive strength.

For example, determining that your team has a 7-day cycle time only becomes meaningful when compared to elite performance (under 26 hours) and industry averages. This context enables prioritized improvement efforts focused on the most impactful bottlenecks.

Effective diagnosis requires segmenting metrics across different dimensions: teams, repositories, services, and time periods. A team-level cycle time problem might stem from specific repository complexity, individual skill gaps, or process inconsistencies that become visible through data segmentation.

The ability to segment software development metrics within your platform provides crucial diagnostic capabilities. We recommend analyzing four key segments: team-based bottlenecks, repository or codebase-specific issues, service-level performance variations, and custom metrics based on labels and tags.

Build improvement strategy: Setting data-backed goals

Translating diagnostic insights into improvement requires setting specific, measurable goals that teams can achieve within reasonable timeframes. Elite teams focus on moving up one performance tier (from Fair to Good, or Good to Elite) rather than attempting dramatic improvements that often fail due to unrealistic expectations.

Successful goal-setting follows several proven patterns. Teams narrow their scope to 1-2 metrics per quarter, avoiding the temptation to optimize everything simultaneously. They tie improvement goals to specific team outcomes and get specific about target metrics, such as "Keep PRs merged without reviews to under 1 per week across all teams."

Teams that build custom dashboards focused on their improvement metrics achieve 40% better goal attainment compared to teams relying on generic reporting tools. The key is keeping selected metrics visible and checking progress frequently rather than waiting for quarterly reviews.

Implementation roadmap

Starting with DORA + leading indicators

Most teams should begin their engineering metrics program with DORA metrics supplemented by key leading indicators. This combination provides both outcome measurement (DORA) and predictive insights (leading indicators) without overwhelming teams with excessive data collection requirements.

A foundational dashboard should include cycle time, deployment frequency, change failure rate, and mean time to recovery from the DORA framework. Add PR size, pickup time, and review time as leading indicators that predict DORA performance trends 2-4 weeks in advance.

Teams achieving consistent measurement and improvement on these core software development metrics can gradually add more sophisticated measurements like developer experience indicators and engineering investment analysis. The principle is establishing measurement discipline before adding complexity.

Goal setting and progress tracking

Effective goal setting requires balancing ambition with achievability. Teams moving from "Needs Focus" to "Fair" performance have made significant progress even if they haven't reached elite benchmarks. The key is consistent improvement direction rather than absolute performance levels.

Regular progress review cycles (monthly or bi-weekly) maintain momentum and enable course corrections when improvement strategies aren't working. Teams that review progress frequently achieve goals 70% more often than teams with quarterly-only check-ins.

Building team buy-in

Engineering metrics programs succeed when developers understand how measurements improve their daily work experience rather than simply providing management reporting. Transparent communication about metric purposes and improvement outcomes builds trust and engagement across engineering teams.

Share software development metrics dashboards with entire teams to create collective ownership of improvement efforts. When developers can see how their process changes impact team-level performance, they become active participants in optimization rather than passive subjects of measurement.

Organizational context: Adapting metrics by team size

Software development metrics interpretation requires understanding organizational context. The same metric values indicate different performance levels depending on team size, industry constraints, and business priorities. Elite performance looks different for 10-person startups compared to 1,000-person enterprise engineering organizations.

Startup performance characteristics

Startup engineering teams often achieve elite DORA performance through advantages unavailable to larger organizations. Small teams operate with minimal bureaucracy, enabling faster decision-making and reduced coordination overhead. Startups typically prioritize speed and market responsiveness over formal processes, allowing engineers to test, iterate, and release code rapidly.

Our research shows that startup teams consistently outperform larger organizations on deployment frequency and cycle time metrics. They face fewer compliance constraints, legacy system dependencies, and cross-team coordination requirements that slow delivery in enterprise environments.

However, startup speed often comes with tradeoffs in process maturity and quality assurance. Startup teams may achieve fast cycle times while maintaining higher change failure rates due to abbreviated testing processes or technical debt accumulation. The key is understanding these tradeoffs and optimizing for business-appropriate performance profiles.

Scale-up and enterprise considerations

Scale-up organizations (100-1,000 employees) face unique challenges as they transition from startup agility to enterprise reliability. They must implement more rigorous processes while maintaining delivery velocity, often resulting in temporary performance degradation during transition periods.

Enterprise teams operate within different constraint systems that impact software development performance metrics. They manage legacy systems requiring extensive regression testing, ensure compliance with industry regulations, and coordinate across multiple technology stacks and business units. These constraints naturally extend cycle times and reduce deployment frequency compared to startup environments.

The solution is establishing context-appropriate benchmarks rather than applying universal standards. Enterprise teams achieving 3-day cycle times may represent elite performance given their operational constraints, while startup teams with similar metrics might indicate process problems.

Industry-specific adaptations

Regulated industries like healthcare, finance, and aerospace require additional compliance steps that impact software development metrics. These teams might prioritize stability metrics (change failure rate, MTTR) over speed metrics (deployment frequency, cycle time) due to regulatory requirements and customer expectations.

Teams in regulated environments should focus on optimization within their constraint systems rather than attempting to match performance standards from unregulated industries. A financial services team that reduces cycle time from 2 weeks to 1 week while maintaining compliance requirements has achieved significant improvement.

Metrics evolution with organizational growth

Software development metrics programs should evolve as organizations mature. Early-stage teams might focus on basic DORA metrics and code quality indicators. Growing teams need more sophisticated collaboration and planning metrics. Enterprise organizations require comprehensive measurement across multiple dimensions, including developer experience, investment allocation, and predictability.

The key principle is matching measurement complexity to organizational capability. Teams that implement overly sophisticated metrics programs before establishing basic measurement discipline often fail to achieve value from their efforts.

Conclusion

Software development metrics provide the foundation for data-driven engineering leadership in an increasingly competitive technology landscape. The comprehensive framework outlined in this guide, based on analysis of 6.1 million pull requests from over 3,000 development teams, offers proven approaches for measuring and improving engineering effectiveness.

The best engineering teams achieve remarkable performance through systematic measurement and continuous improvement. They balance delivery speed with code quality, optimize developer experience alongside business outcomes, and make resource allocation decisions based on data rather than assumptions. These teams consistently outperform competitors in customer satisfaction, revenue growth, and talent retention.

The key to successful implementation is starting with clear objectives and building measurement discipline gradually. Begin with DORA metrics and essential leading indicators, establish team buy-in through transparent communication, and focus improvement efforts on moving up one performance tier at a time. Avoid the temptation to measure everything immediately or optimize metrics in isolation from business context.

Remember that software development metrics serve as tools for optimization, not absolute measures of team value. The most successful engineering leaders use data to identify improvement opportunities, celebrate progress, and create environments where developers can do their best work. When metrics programs focus on removing friction and enabling flow, they create virtuous cycles that benefit both engineering teams and business outcomes.

Ready to implement these metrics in your organization? LinearB automatically collects and analyzes all the software development metrics covered in this guide, from DORA benchmarks to developer experience indicators, without requiring manual data entry or complex integrations. See how LinearB can help you build a data-driven engineering culture that drives both developer satisfaction and business results.

As your organization grows and matures, evolve your measurement approach to match increasing complexity and changing priorities. Maintain focus on metrics that drive actionable insights and demonstrate clear connections between engineering improvements and business results. This balanced approach ensures that your engineering metrics program creates lasting value for both developers and stakeholders.

Frequently asked questions

What are the most important software development metrics to track?

Start with the four DORA metrics: how long features take to ship (cycle time), how often you deploy (deployment frequency), how often deployments break (change failure rate), and how quickly you fix problems (mean time to recovery). Based on analysis of 6.1 million pull requests, elite teams achieve cycle times under 26 hours and deployment frequencies greater than once per service daily. These software development metrics should be supplemented with leading indicators like PR size, pickup time, and review time that predict DORA performance trends.

What is a good cycle time for software development?

Elite teams maintain cycle times under 26 hours, while good performance ranges from 26-80 hours. Teams exceeding 167 hours need immediate attention to remove bottlenecks and improve delivery speed. Cycle time includes the complete journey from when development work begins until code reaches production, making it one of the most comprehensive software development performance metrics.

How do you measure developer productivity effectively?

Developer productivity combines delivery speed, code quality, and developer experience. Track DORA metrics for delivery outcomes, monitor PR size and review time for process efficiency, and measure developer experience through focus time and task balance. Avoid measuring individual developer output in isolation, as software development is inherently collaborative work that requires team-level measurement approaches.

What are DORA metrics and why do they matter for engineering teams?

DORA metrics are four key indicators measuring software delivery performance: cycle time, deployment frequency, change failure rate, and mean time to recovery. They provide standardized benchmarks for comparing team performance across organizations and correlate strongly with business outcomes. Teams achieving elite DORA performance report 2.6x higher revenue growth and 2.2x higher profitability compared to low performers.

How often should software development teams deploy code?

Elite teams deploy more than once per service daily, while good teams deploy 1-0.5 times daily. Teams deploying less than 0.15 times daily typically have process bottlenecks that reduce delivery speed and increase deployment risk. Higher deployment frequency enables faster customer feedback, reduced batch sizes, and more confident release processes.

How do you benchmark software development team performance?

Compare your software development metrics against industry benchmarks using Elite/Good/Fair/Needs Focus performance tiers. For example, elite cycle time is under 26 hours while elite change failure rate is under 1%. Use data from thousands of engineering teams for accurate comparisons rather than relying on theoretical targets or isolated case studies.

What metrics predict software development success?

Leading indicators like PR size (elite: under 194 changes), pickup time (elite: under 8 hours), and planning accuracy (75-96%) predict DORA performance 2-4 weeks in advance. Teams with smaller PRs and faster review cycles consistently achieve better delivery outcomes. These predictive metrics help teams fix problems before they impact customer-facing delivery timelines.

How do you implement an engineering metrics program successfully?

Start with DORA metrics plus key leading indicators (PR size, pickup time, review time) before adding complexity. Focus on 1-2 improvement goals per quarter rather than trying to optimize everything simultaneously. Build team buy-in by sharing dashboards transparently and demonstrating how metrics improve daily developer experience rather than serving only management reporting needs.

![2025 Software Engineering Benchmarks [Dec Update]](https://assets.linearb.io/image/upload/v1733950346/2025_Software_Engineering_Benchmarks_Dec_Update_dbb686bd59.png)