Developer Experience metrics directly impact your business outcomes, but communicating this value to the executives and investors requires strategic translation. This blog shows how to measure DevEx ROI, create compelling executive reports, and demonstrate the business impact of your engineering initiatives.

Are you struggling to justify DevEx investments to business stakeholders? Finding it challenging to demonstrate how engineering productivity metrics connect to business outcomes? You're not alone - this is one of the most common challenges facing Developer Experience leaders today.

In this blog, you'll discover:

- Which DevEx metrics truly matter to executive teams and boards

- How to translate technical metrics into business value

- Practical frameworks for measuring the ROI of your DevEx initiatives

- Strategies for creating compelling executive reports that resonate with business leaders

- How to integrate AI metrics into your DevEx reporting

Why Engineering Leaders Must Translate DevEx to Business Value

Executive teams increasingly recognize that engineering is the engine driving competitive advantage. But despite this growing awareness, many organizations still struggle to connect engineering metrics to business outcomes.

When presenting to executives or board members, you may only get one slide to communicate the health of your engineering organization. This high-stakes situation requires carefully selecting metrics that demonstrate both technical excellence and business impact.

"In many cases, engineering health is going to be the only slide that I get to show," explains Yishai Beeri, CTO at LinearB. "There's a pretty common bias to look at the business metrics, not so much where the leaders are also looking at how the engine is working."

Your engineering team is building your company's future through code. Key engineering health metrics like Cycle Time show not just internal efficiency but represent how quickly you can get to market with changes - a critical competitive advantage. Just as sales leaders report on their pipeline velocity, engineering leaders must communicate delivery velocity.

But raw numbers aren't enough. Effective reporting requires:

- Benchmarking: Contextualizing your metrics against industry standards

- Trend indicators: Showing progress over time rather than static snapshots

- Business relevance: Connecting technical metrics to outcomes executives care about

Guillermo Manzo, Director of Developer Experience at Expedia Group, emphasizes this translation aspect: "For non-technical executives, you may use language like 'time to market' rather than 'Cycle Time.' Making sure that depending on who you're talking to, your metrics may look a bit different, based on what is most important to the person receiving this information."

Core DevEx Metrics That Demonstrate Business Value

Selecting the right metrics is crucial when communicating with executives. Here are some core DevEx metrics that effectively demonstrate business value, organized into three categories that resonate with business stakeholders.

Automation ROI: From Hours Saved to Business Impact

Automation - including AI-driven workflows - represents a significant opportunity to demonstrate tangible ROI from DevEx initiatives. However, the key is focusing not just on hours "saved" but on how that time is reinvested.

"A lot of times people say, 'Hey, we saved 16 hours or 16,000 hours,' but in reality, you actually reinvested those hours," Manzo explains. "So it's probably worth making sure that how you're communicating the verbiage of what you're using is relevant for executive leaders."

This subtle but critical distinction transforms DevEx from a cost-saving initiative to a value-generating one. Rather than reporting that automation saved 16,000 hours, frame it as "We reinvested 16,000 hours due to leveraging AI and automation within our development processes."

This reinvestment focus aligns with executive priorities around creating new value, not just reducing costs. Supporting metrics to track include:

- Percentage of pull requests automatically merged

- Number of automated workflows executed

- Hours reinvested in value-creating activities

- Impact on developer satisfaction and retention

Efficiency Metrics: Connecting Cycle Time to Market Velocity

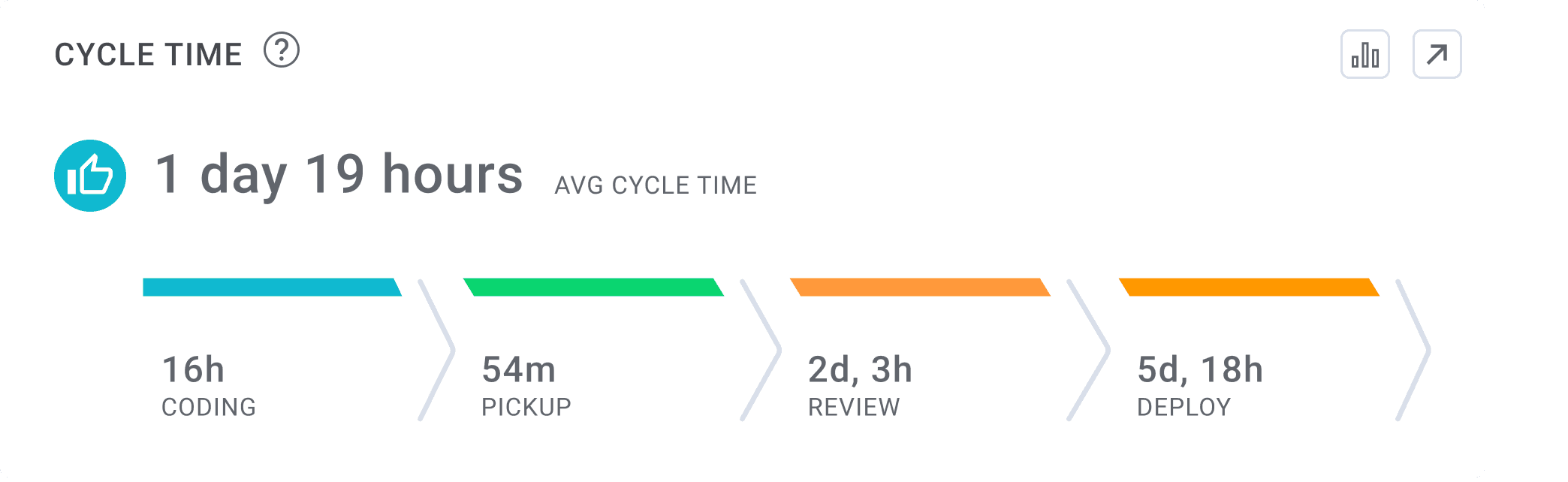

Cycle Time stands as perhaps the most powerful DevEx metric for executives because it directly correlates with business agility and time to market. However, reporting Cycle Time effectively requires breaking it down into its components and identifying where improvements can drive business value.

"I see Cycle Time as a strong outcome metric, but there are various other things that would work through and have inputs into, like whether it be quality, whether it be the review process or some other areas," says Manzo.

When communicating Cycle Time to executives, consider these approaches:

- Break down Cycle Time into its components (coding, pickup, review, deploy) to pinpoint bottlenecks

- Connect improvements to specific business outcomes (faster feature delivery, quicker bug fixes)

- Benchmark against industry standards to show competitive positioning

- Highlight trends over time to demonstrate sustainable improvement

Most importantly, connect Cycle Time directly to business language. For engineering-savvy executives, use technical terminology. For business-focused executives, translate Cycle Time into "time to market" or "speed of innovation" - concepts that immediately connect to business strategy.

Developer Experience: The Key to Sustainable Productivity

DevEx is sometimes perceived as a "nice to have" rather than a business-critical investment. Effective reporting bridges this gap by connecting developer experience directly to productivity and business outcomes.

"DevEx is a key strategic business lever," emphasizes Manzo. "It's not just something where we want to pun it down to the engineering community. They have to figure out how to have a good experience while also saving time to market and getting feature delivered for companies."

Key metrics that connect DevEx to business value include:

- Merge Frequency: Shows how often developers successfully deliver code, indicating reduced blockers

- Developer satisfaction (measured through NPS or similar surveys)

- Rework Rates: Shows efficiency and quality of the development process

- Context switching: Quantifies productivity losses from fragmented work

When presenting these metrics to executives, frame the discussion around retention, productivity, and output quality - all factors that directly impact the bottom line.

Measuring the ROI of Developer Experience Initiatives

Moving beyond tracking individual metrics, effective ROI measurement requires a comprehensive approach that connects investment to outcomes. Here's how to build a framework that resonates with business leaders.

From Hours Saved to Hours Reinvested

The distinction between saving and reinvesting developer hours represents a fundamental shift in how DevEx ROI should be calculated and communicated. Rather than positioning DevEx initiatives as pure cost-saving measures, frame them as investments that generate returns through increased value creation.

For example, when reporting on automation implementations:

- Track the total hours freed through automation

- Document where those hours were reinvested (new features, tech debt reduction, innovation)

- Calculate the business value of those reinvested hours

- Present the net gain rather than just the raw time savings

This approach aligns DevEx with business priorities around growth and innovation rather than mere efficiency. It transforms the conversation from cost-cutting to value generation - a critical distinction when seeking continued investment.

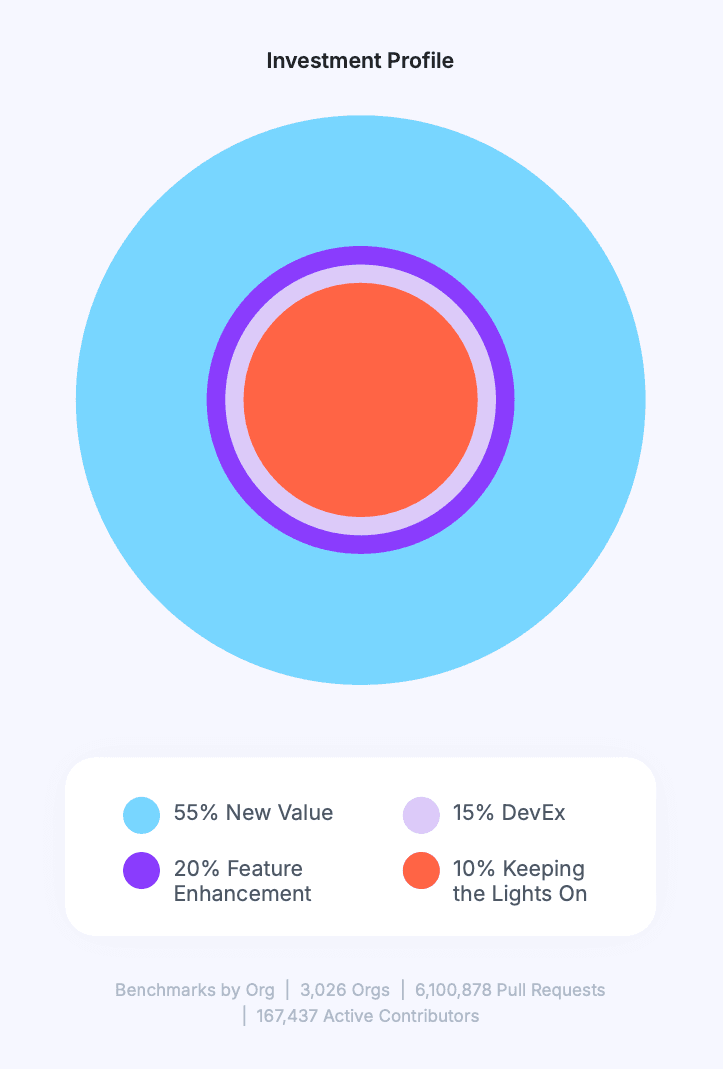

The Investment Profile: Balancing New Value with Operational Needs

One of the most effective tools for communicating DevEx ROI is the investment profile, which visualizes how engineering resources are allocated across different categories of work. This provides executives with a clear picture of where engineering time is spent and how DevEx initiatives can optimize this allocation.

"An investment profile is a good bridge area between product and engineering because it represents where we are actually investing," explains Beeri. "Not the plan, the actual work - where is it actually happening?"

A typical investment profile includes categories like:

New Value: The actions performed to invest in new features that increase revenue and growth by new customer acquisition or expansion.

Feature Enhancements: The actions taken to enhance features or deliver a product that ensures customer satisfaction.

Developer Experience: The actions performed to improve the productivity of development teams and their overall experience.

KTLO (Keeping the Lights On): The minimum tasks a company is required to perform in order to stay operational on a daily level while maintaining a stable level of service.

By tracking how this profile changes over time, you can demonstrate how DevEx investments shift resources toward value-creating activities. This directly addresses the common executive question: "If we're only delivering 24.5% new value, why can't we make that 50%?"

Manzo suggests addressing this by tying the investment profile to business outcomes: "Ensuring that the executives have the context, understand how we're tying our investments to their requested efforts is probably one of the key value drivers that we can focus on."

Multi-Dimensional ROI Measurement for AI Initiatives

AI initiatives in DevEx require special consideration when measuring ROI, as they impact multiple dimensions of engineering performance simultaneously. Traditional single-metric approaches often fail to capture the full picture.

"A lot of people come in asking what is the ROI of AI? Is this helping me become more productive?" says Beeri. "They typically come in with a single number in mind, like how much is it saving me. In reality, it's typically a multidimensional answer."

These dimensions might include:

- Coding Time reduction

- Code quality impacts

- Review Efficiency

- Developer satisfaction

- Learning curve and adoption rates

"It may be helping you code faster, but then quality goes down or review cycles are longer," cautions Beeri. "There's a bottleneck. It's almost never a single metric that's going to capture it."

This multidimensional approach helps manage executive expectations about AI initiatives while providing a more realistic picture of true ROI. It also helps identify areas where additional investment might be needed to realize the full potential of AI tools.

Effective Communication Strategies for Non-Technical Executives

Even the most impressive DevEx metrics won't drive investment if they aren't communicated effectively to business stakeholders. Effective DevEx reporting relies on adapting your language to match your audience's business context. Engineering metrics must be reframed in terms that resonate with business priorities. Create a translation guide for your most important metrics:

Engineering Metric | Business Translation |

| Cycle Time | Time to Market |

| PR Review Efficiency | Quality assurance speed |

| Automation hours | Reinvested engineering capacity |

| Change Failure Rate | Product stability |

| Development costs | Engineering investment efficiency |

"Reading the room" is essential, as Beeri notes. “Tailor your presentation to the specific executive audience, focusing on metrics that align with their priorities and using language that resonates with their domain expertise.”

Structuring Your DevEx Reporting for Maximum Impact

The structure of your DevEx reporting is as important as the content itself. A well-structured presentation leads executives through a logical progression from technical metrics to business outcomes.

Start with a high-level summary slide that connects directly to business priorities. This might include:

- A handful of key metrics showing engineering health

- Benchmarks that provide industry context

- Trend indicators showing improvement direction

- Clear business outcomes these metrics influence

From there, prepare detailed supporting slides that can be pulled in as needed to address specific questions. "Always think about the next level. Have those under the fold," suggests Beeri. "I've seen a lot of board decks where 85-90% is below the water. These are slides they're not going to get presented unless someone asks, but they're there."

This approach allows you to start strategic and go tactical only when necessary - adapting to the executive team's level of interest and technical understanding.

Measuring and Reporting AI Impact on Developer Experience

AI adoption presents unique challenges for Developer Experience reporting. The rapidly evolving nature of AI tools requires a structured approach to measurement and communication.

"People do get caught up sometimes on trying to find a way to simplify what they're trying to say or trying to package up Gen AI metrics," notes Manzo. "One thing I see is people try to bucket like one big metric or very high-level metrics of all the Gen AI that they've been doing, which actually reduces the ability to tell the right story."

Instead, structure your AI reporting by categorizing different types of AI implementation:

- Code assist tools (GitHub Copilot, cursor)

- Measure: Coding time reduction, developer satisfaction

- Report: "Leveraging GitHub Copilot allowed us to streamline the coding portion of the cycle time"

- Code review tools (AI-powered review)

- Measure: Review time, review quality, defect rates

- Report: "Instead of having engineers review 10 PRs a day, we had AI review those same PRs in seconds, freeing up engineers for more valuable work"

- AI coding agents (PR generation, documentation)

- Measure: Percentage of PRs created by AI, time saved, quality metrics

- Report: "7% of pull requests are now created by AI, representing approximately X hours reinvested in higher-value work"

Don't focus exclusively on quantitative metrics. "It's not just numbers within the context of quantitative numbers, but also the qualitative data itself," advises Manzo. "What was the experience of the developer and would they use it again?"

This balanced approach provides a complete picture of AI's impact on your development process while addressing executive concerns about the business value of AI investments.

Transforming DevEx Reporting at Engineering Scale

Effective DevEx reporting evolves through deliberate strategy and adaptation. Consider this approach used by a large enterprise that successfully transformed their DevEx reporting to drive continued investment:

- Started with engineering health: They began by establishing baseline metrics for cycle time, planning accuracy, and quality to demonstrate the current state of engineering operations.

- Connected metrics to business outcomes: Each metric was explicitly linked to specific business goals, such as accelerating time to market, improving predictability, and enhancing customer experience.

- Implemented multi-level reporting: They created a tiered reporting system with executive summaries for board meetings, detailed dashboards for VPs, and team-specific metrics for engineering managers.

- Measured AI adoption systematically: Rather than treating AI as a single initiative, they categorized different AI implementations and measured each according to its specific expected outcomes.

- Developed a common language: They created a shared vocabulary that bridged technical and business concepts, ensuring consistent communication across all levels.

The result? A DevEx program that maintained executive support through multiple budget cycles by consistently demonstrating business impact beyond technical improvements.

Putting It All Together: Your DevEx Reporting Framework

Creating an effective DevEx reporting framework requires thoughtful integration of metrics, business context, and communication strategies. Here's a structured approach you can adapt to your organization:

1. Establish Your Metrics Foundation

Start by selecting a balanced set of metrics that cover three essential dimensions:

- Efficiency: Cycle Time and its components, Deployment Frequency

- Effectiveness: Resource Allocation, Accuracy Scores, Investment Profile

- Experience: Developer satisfaction, Merge Frequency, context switching

For each metric, establish:

- Clear definitions

- Reliable measurement methods

- Current baseline values

- Industry benchmarks

- Target improvement goals

2. Map Metrics to Business Outcomes

Explicitly define how different metrics connect to specific business outcomes:

- How Cycle Time impacts Time to Market and competitive advantage

- How quality metrics affect customer satisfaction and retention

- How experience metrics influence developer productivity and retention

Create a matrix that executives can easily reference to understand these connections without requiring deep technical knowledge.

3. Design Your Reporting Strategy

Develop a multi-tiered reporting approach:

- Board level: 1-2 slides focusing on high-level engineering health, trends, and business impact

- Executive level: 5-7 slides covering key metrics, benchmarks, and strategic initiatives

- Management level: Detailed dashboards with team-specific metrics and improvement opportunities

For each audience, adapt your language, visual presentation, and level of technical detail to maximize impact.

4. Implement Continuous Feedback and Refinement

DevEx reporting should evolve based on executive feedback and changing business priorities:

- Solicit input from executives on which metrics resonate most

- Track which presentation approaches drive the most productive discussions

- Adjust your reporting framework as business priorities shift

This ongoing refinement ensures your DevEx reporting remains relevant and impactful over time.

From DevEx Metrics to Strategic Value

Translating DevEx metrics to business value is about fundamentally changing how engineering is perceived within your organization. By effectively communicating the ROI of developer experience initiatives, you elevate engineering from a cost center to a strategic driver of business success.

Remember these key principles as you build your DevEx reporting strategy:

- Focus on reinvestment over savings

- Connect technical metrics to business outcomes

- Adapt your language to your audience

- Structure reporting for maximum impact

- Measure AI impact across multiple dimensions

With this approach, you transform DevEx from a technical initiative to a business imperative, securing the support and investment needed to drive continuous improvement in your engineering organization.

Are you ready to transform how you communicate DevEx value to your executives? Start by evaluating your current metrics, mapping them to business outcomes, and designing a reporting framework that resonates with your specific stakeholders.

For more insights on translating DevEx to non-technical stakeholders, download our CTO Board Deck Template and check out our workshop with Guillermo and Yishai.

FAQ: Measuring and Reporting DevEx ROI

How do you quantify the business impact of developer experience improvements?

Quantify DevEx business impact by focusing on three key areas: efficiency gains (through cycle time reduction), quality improvements (through lower change failure rates), and talent outcomes (through retention rates and satisfaction scores). Connect these to financial metrics by calculating the value of faster time to market, reduced downtime costs, and decreased recruitment expenses. Use a balanced scorecard approach that connects technical improvements to tangible business outcomes like revenue acceleration, cost avoidance, and competitive advantage.

What metrics should I include when reporting developer experience to the board?

When reporting to the board, prioritize metrics that directly connect to business outcomes. Include one high-level engineering health metric (like cycle time with industry benchmarks), a productivity metric showing time reinvestment from automation/AI, a quality indicator demonstrating stability, and a planning accuracy metric showing predictability. Supplement these with trend indicators and business impact statements that explicitly link engineering performance to strategic objectives. Remember that with boards, less is often more – focus on 3-5 impactful metrics rather than creating metric overload.

How do you translate cycle time metrics into business language?

Translate cycle time to business language by framing it as "time to market" or "speed of innovation." Break down cycle time components and connect them to specific business concerns – coding time affects innovation capacity, pickup/review time impacts team efficiency, and deploy time relates to operational agility. Use competitor benchmarks to contextualize your metrics ("our 2-day cycle time represents a 30% market speed advantage"). Always express improvements in business terms: "By reducing cycle time from 5 days to 2 days, we can respond to market changes and customer feedback 60% faster."

What's the difference between developer productivity and developer experience?

Developer productivity focuses on output and efficiency metrics (PRs merged, story points completed, Cycle Time), while developer experience addresses the quality of developers' daily work environment (satisfaction, friction points, cognitive load). They're interconnected but distinct – productivity measures what developers accomplish, while experience examines how they feel while doing it. The most effective engineering organizations measure both, recognizing that superior developer experience drives sustainable productivity, while focusing exclusively on productivity metrics without considering experience leads to burnout and diminishing returns.

How can I measure the ROI of AI tools in my development process?

Measure AI tool ROI through a multi-dimensional approach rather than seeking a single metric. Track time savings through before/after comparisons of coding, review, and documentation time. Measure quality impacts by monitoring defect rates and change failure percentage to ensure automation doesn't compromise reliability. Assess developer experience through satisfaction surveys specifically addressing AI tool usage. Most importantly, document where time savings are reinvested – calculate value generated from freed capacity directed toward feature development or innovation. Remember that successful AI adoption requires measuring both immediate productivity gains and longer-term quality considerations.

What are the most important developer experience metrics for non-technical executives?

For non-technical executives, focus on DevEx metrics with clear business relevance: time to market (cycle time), delivery predictability (planning accuracy), quality stability (change failure rate), and capacity utilization (investment profile). Express these in business language – instead of "PR size decreased by 30%," report "feature delivery speed increased by 40% through workflow optimization." Include a developer satisfaction metric (NPS or eNPS) connected to retention cost savings. The key is presenting metrics that directly answer executive questions about speed, quality, cost, and competitive advantage without requiring technical context.

How do you create an effective investment profile for engineering resources?

Create an effective engineering investment profile by categorizing work into four key buckets: new value creation (features, products), technical debt reduction, maintenance/operations, and developer experience improvements. Track actual time spent in each category through work item tagging and repository analysis. Compare your current allocation against industry benchmarks and your strategic objectives – most organizations target 40-60% new value creation. Use the profile in executive discussions to show how DevEx investments shift resources toward value-creating activities over time. Regularly review your profile with product and business leaders to ensure alignment with company priorities and market conditions.

What's the connection between developer satisfaction and business outcomes?

Developer satisfaction directly impacts three critical business outcomes: retention (reducing recruitment costs and knowledge loss), productivity (improving output quality and velocity), and innovation capacity (enabling creative problem-solving). Research shows that teams with high developer satisfaction deliver 28% higher productivity and experience 30% lower turnover rates. Track this connection by correlating satisfaction scores with retention rates, cycle time, and innovation metrics. When reporting to executives, translate satisfaction improvements into financial terms by calculating reduced hiring costs, productivity gains, and accelerated time to market that result from maintaining a positive developer experience.

How frequently should DevEx metrics be reported to executives?

Report DevEx metrics to executives monthly or quarterly depending on your organization's reporting cadence. Monthly reporting works best for rapidly evolving initiatives like AI implementation, while quarterly reporting provides better trend visibility for stable metrics like cycle time or investment profile. Regardless of frequency, maintain consistent measurement definitions to enable accurate trend analysis. Between formal reports, consider sending brief "pulse updates" on high-visibility initiatives to maintain executive awareness. Adjust your cadence based on feedback – if executives request more frequent updates on specific metrics, be prepared to provide them while maintaining the broader reporting schedule.

What benchmarks should we use for developer experience metrics?

Use industry-standard benchmarks from research organizations like DORA or platform-specific benchmarks from tools like LinearB's Engineering Benchmarks Report. For cycle time, elite performers average under 24 hours, while good performers range from 24-80 hours. For developer satisfaction, benchmark both internally (tracking team-by-team variation) and externally (industry averages typically range from 20-60 NPS). When benchmarking, ensure appropriate contextualization – compare your metrics against organizations of similar size, industry, and product complexity. Most importantly, benchmark against yourself over time,