Every engineering leader investing in AI coding tools faces a pivotal question: Can these agents truly improve code quality and developer productivity, or are they just expensive autocomplete with a refactoring feature? The stakes are high, as organizations embed AI deeply into development workflows, with expectations ranging from automated technical debt reduction to wholesale codebase modernization.

New research from Nara Institute of Science and Technology and Queen's University delivers a comprehensive answer. By analyzing 15,451 refactoring operations performed by leading AI coding agents across real-world open-source projects, the study reveals a nuanced reality: today's agents are exceptional at routine code maintenance but fundamentally limited when it comes to architectural transformation.

For VPs and Directors of Engineering, these findings provide a strategic blueprint. AI agents can deliver immediate productivity gains by automating tedious cleanup work, but expecting them to function as software architects will lead to disappointment and wasted investment.

AI coding agents drive widespread adoption but lack architectural depth

AI coding agents like OpenAI Codex, Claude Code, Cursor, and Devin are no longer experimental curiosities; they are now established tools. They're active contributors in production codebases, autonomously planning and executing development tasks with minimal human intervention. Unlike traditional prompt-based tools where developers guide every step, these agents operate as collaborative teammates, proposing changes, running tests, and submitting pull requests.

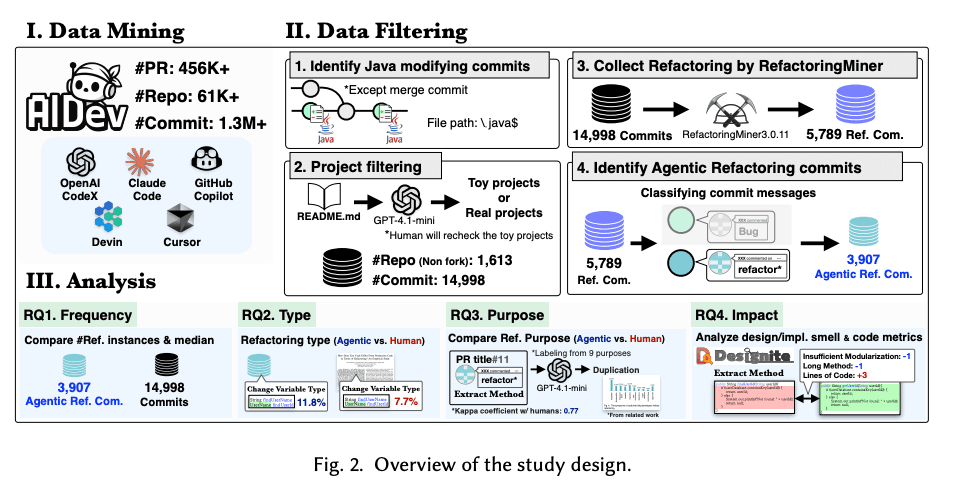

The research examined 12,256 pull requests and 14,998 commits from 1,613 Java repositories to understand how agents participate in refactoring. The scale of adoption is striking, as developers merged 86.9% of agent-generated pull requests, demonstrating substantial acceptance of AI-generated code in real-world projects.

But acceptance does not equal architectural sophistication. The study reveals that 26.1% of agent commits explicitly target refactoring, a significant portion of their activity, yet the nature of these refactorings tells a more constrained story.

AI agents transform code hygiene with routine maintenance

The data shows agents are highly proficient at low-level, consistency-oriented refactoring. 35.8% of agent refactorings are low-level operations, compared to just 24.4% for human developers. These operations include:

- Change Variable Type (11.8%): Updating variable declarations for type consistency

- Rename Parameter (10.4%): Standardizing parameter names across methods

- Rename Variable (8.5%): Improving local variable naming conventions

These three operations alone account for 30.7% of all agent refactoring instances. Agents also perform medium-level refactorings, such as extracting methods or changing parameter types, at rates similar to humans (21.2% for agents vs. 20.7% for humans).

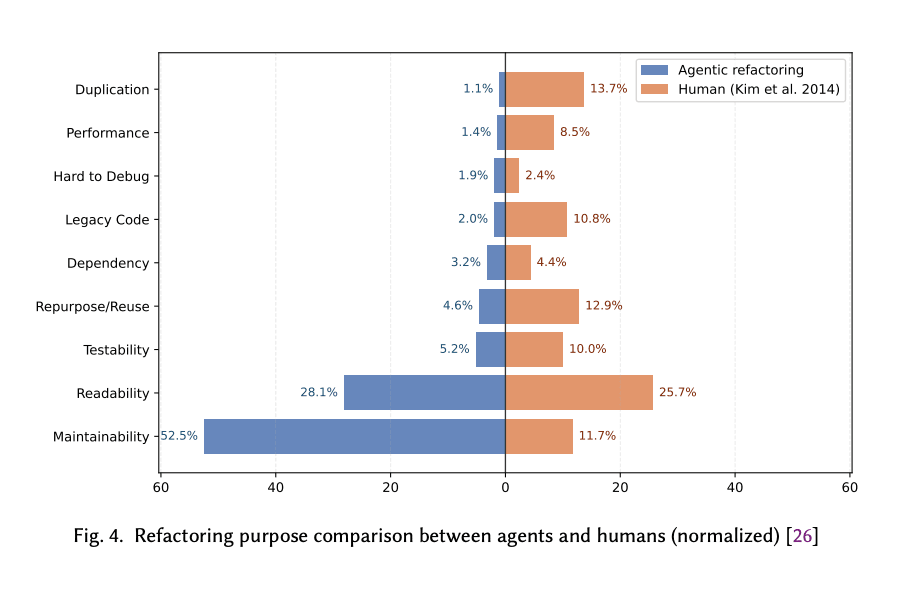

The motivations behind these refactorings are overwhelmingly focused on internal quality. 52.5% of agent refactorings target maintainability, far exceeding the 11.7% rate for human developers. An additional 28.1% focus on readability, closely matching human priorities. Together, these two motivations account for over 80% of all agent refactoring activity.

For engineering leaders, this represents a clear value proposition: agents can systematically handle the unglamorous but essential work of code hygiene, such as renaming for clarity, enforcing type consistency, and maintaining coding standards. These tasks consume developer time but don't require deep domain knowledge or architectural insight.

When agents perform medium-level refactorings with maintainability intent, the quality improvements are measurable. The research found that agents achieve a median reduction of 15.25 lines in Class Lines of Code and a median reduction of 2.07 in Weighted Methods per Class, which are concrete indicators of simplified code structure.

AI agents still struggle to deliver architectural refactoring

The limitations become apparent when examining high-level, architectural refactorings. Only 43.0% of agent refactorings are high-level operations, compared to 54.9% for human developers. This gap reveals a fundamental constraint, as agents struggle with refactorings that require system-wide understanding or strategic design decisions.

Human developers frequently perform major API changes like Rename Method (4.4%) and Add Parameter (4.3%), which ripple through codebases and require careful consideration of downstream impacts. Agents, by contrast, prioritize more localized changes like Rename Attribute (6.0%) and Change Method Access Modifier (3.8%).

Even more telling, agents rarely address design-level concerns that experienced developers prioritize. Only 1.1% of agent refactorings target code duplication, and just 4.6% focus on modularity and reuse, compared to 13.7% and 12.9% respectively for human developers.

The impact on code smells is similarly limited. Despite the focus on maintainability, agents show no significant reduction in design or implementation smell counts. The median change in both categories is 0.00, meaning that while agents can improve structural metrics like code size and complexity, they consistently fail to eliminate the deeper quality issues that experienced developers target.

Tangled commits increase review complexity and hidden costs

A critical finding that engineering leaders must consider is that 53.9% of agent refactorings occur in "tangled commits," which are changes bundled with feature work or bug fixes rather than isolated refactoring efforts. This pattern increases code review complexity, as reviewers must validate both the primary task and the incidental refactorings to ensure behavior preservation.

This tangling suggests agents often refactor opportunistically while implementing other changes, rather than executing focused quality improvement initiatives. For teams scaling AI adoption, this creates a hidden review burden that can offset productivity gains if not properly managed.

Engineering leaders must align AI agent use with strategic outcomes

These findings have direct implications for how organizations should deploy AI coding agents:

Delegate routine cleanup strategically. Agents deliver the highest ROI when assigned to low-level maintenance: variable renaming, type consistency enforcement, and code formatting. These tasks consume significant developer time but don't require architectural judgment. By systematically delegating this work to agents, engineering leaders can free senior developers to focus on complex design problems and business logic.

Enforce commit hygiene policies. To mitigate the review burden of tangled commits, require agents to submit refactoring changes separately from features and bug fixes. This practice, already a best practice for human developers, becomes even more critical when agents are contributing at scale. Separate refactoring PRs are faster to review, easier to validate, and build reviewer trust in AI contributions.

Reserve architectural work for human expertise. High-level refactorings, such as extracting classes, restructuring inheritance hierarchies, and managing dependencies, require domain knowledge and strategic intent that current agents lack. Attempting to delegate these tasks to AI will result in superficial changes that fail to address underlying design issues. Keep experienced engineers in the driver's seat for architectural decisions.

Monitor quality outcomes rigorously. While agents can improve structural metrics, they don't consistently reduce code smells or prevent technical debt accumulation. Engineering leaders should implement regular quality audits that track both immediate metrics (LOC, complexity) and long-term indicators (defect density, maintenance effort) to ensure AI-driven refactoring delivers sustained value rather than cosmetic improvements.