When we started testing AI code review tools, the market was full of promises: “AI-powered reviews,” “automated insights,” “intelligent feedback”. AI code review is everywhere now, but which tools actually work?

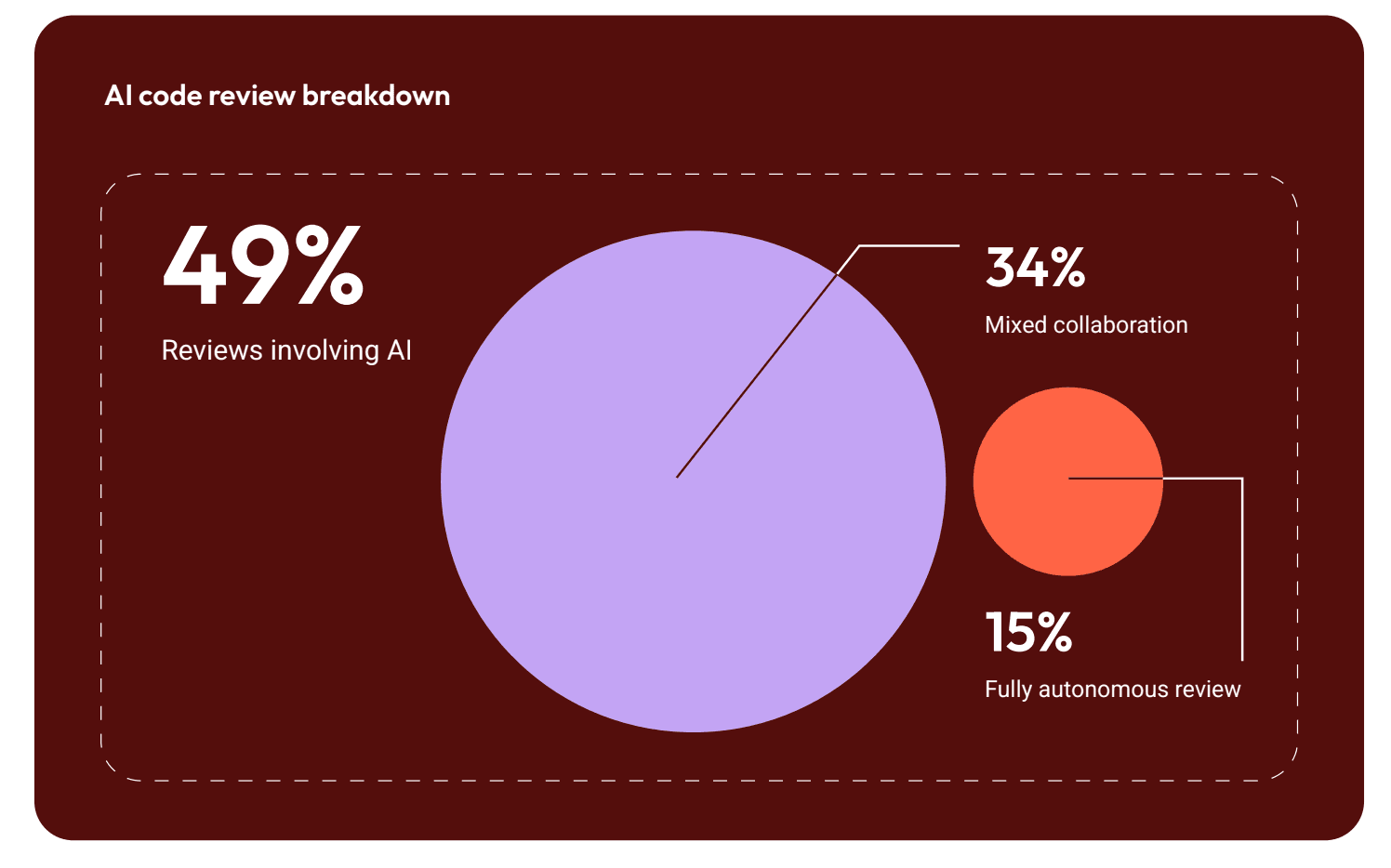

The stats were powerful: 49% of code reviews involved AI in some form, but no one could say how these different systems performed under the same conditions. Even we couldn’t. So we built something that didn’t exist yet: a controlled evaluation framework for AI code review tools.

What we observed

Patterns emerged quickly. Some reviewers flooded pull requests with redundant suggestions that were technically accurate, but applicably useless. Others went sideways the moment the diff grew too complex. A few repeated the same wrong advice after a fix, like a broken record.

Across all 16 bug types we identified, LinearB produced the best signal-to-noise ratio, meaning more valid findings per total comments. And because our platform attaches metadata to each recommendation, it can re-evaluate its own opinion after a code change. This statefulness is the difference between an AI that reviews code and one that collaborates.

To understand why this behavior matters, we turned those observations into a structured benchmark. The evaluation covered 16 bugs across two phases, from simple defects like unused variables to complex issues such as Go channel deadlocks and interface-nil semantics. Each system was tested not just on what it caught, but on how it communicated and evolved through a PR’s lifecycle.

How tools stacked up

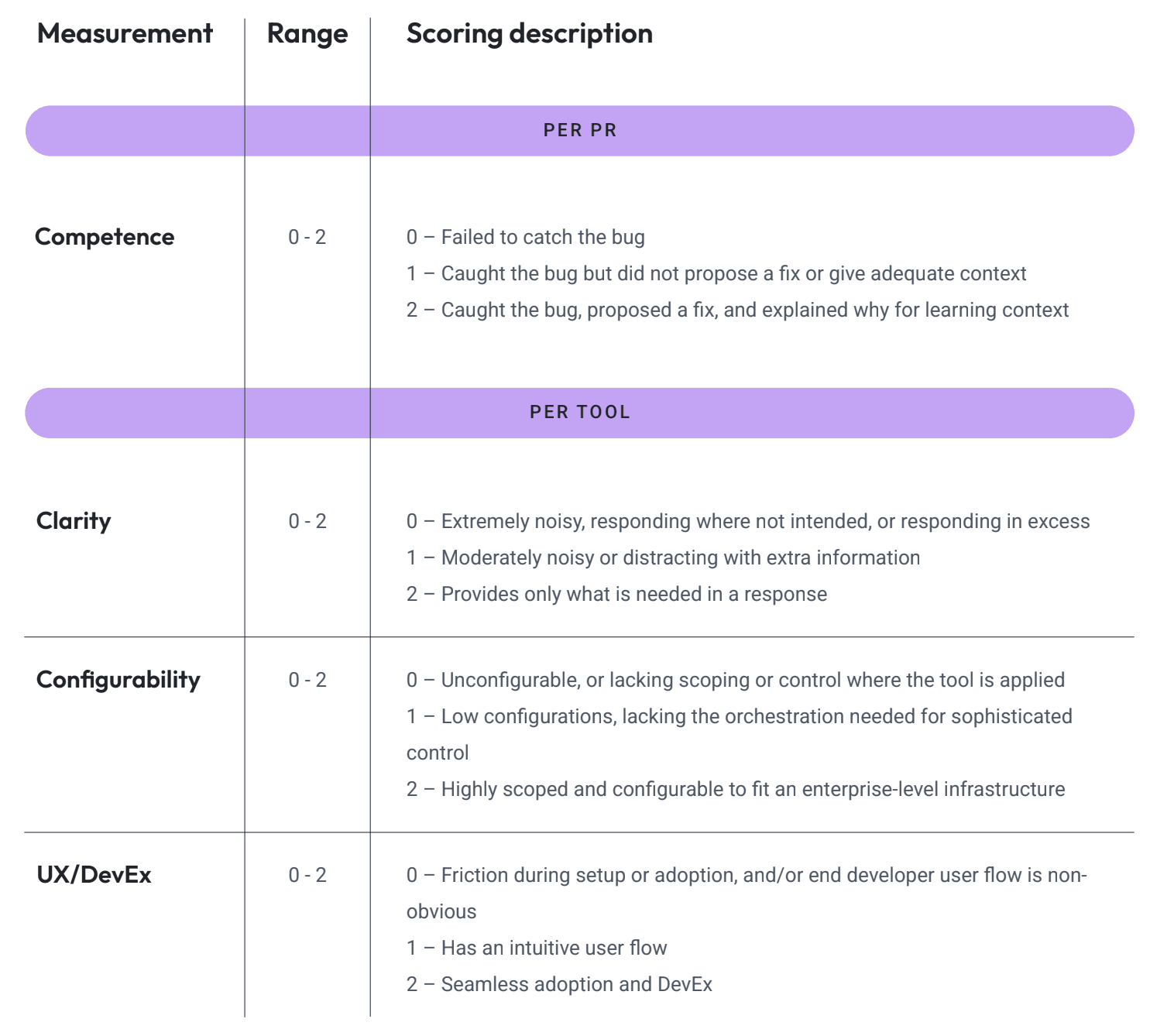

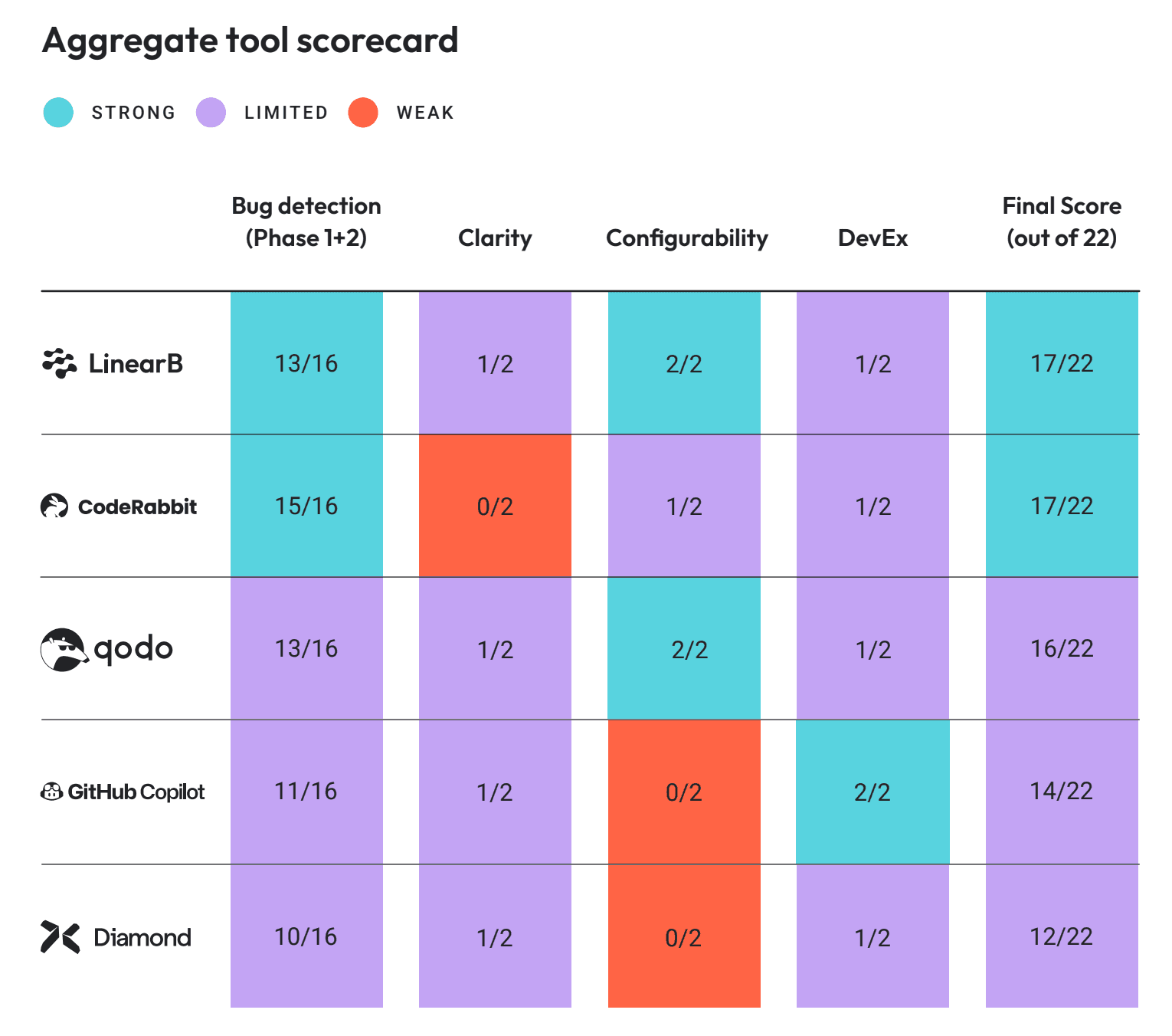

In evaluating each tool across 16 bugs and multiple dimensions of DevEx, we identified meaningful patterns in how these tools behave under real-world PR workflows. Each tool was scored on its competency in finding issues, the clarity of its feedback, the configurability of its behavior and rules, and the overall developer experience i.e. how naturally it fits into everyday review workflows. Together, these categories capture both the technical accuracy of the AI and the practical usability that determines whether teams actually adopt it:

With those dimensions in mind, the next chart brings everything together into an aggregate view that combines every scoring category. It shows how each AI reviewer performs when clarity, configurability, and DevEx are weighted equally against the sum competency:

The results revealed clear strengths and trade-offs between tools:

- LinearB stood out for its statefulness (the ability to revise or withdraw outdated comments) and for maintaining the best signal-to-noise ratio across scenarios.

- CodeRabbit caught the most total issues but generated heavy noise, often flagging the same pattern repeatedly without context.

- Qodo offered broad coverage and strong explanations yet struggled to adapt when code evolved between commits.

- GitHub Copilot delivered consistently relevant suggestions but with shallow context, missing multi-file reasoning.

- Graphite Diamond performed weakest overall, showing limited detection and minimal contextual awareness.

These contrasts help illustrate how “accuracy” alone doesn’t guarantee a better developer experience: the best reviewer says more with less. In fact, three dimensions drove those scores:

- Statefulness: how well the tool engineers context across commits and withdraws outdated advice.

- Noise ratio: the proportion of valid findings to total comments, a proxy for how much “signal” a reviewer adds to a PR.

- Time-to-useful-signal: the average time from PR open to the first correct, actionable comment.

Tools that performed best on these axes produced leaner, more human-like reviews that improved with each commit instead of starting over.

What engineering leaders should look for in an AI code review tool

The results of this benchmark highlight a broader truth: choosing the right AI reviewer is about improving flow of work. Accuracy matters, but the tools that drive measurable impact share a different set of strengths. Here’s what stood out that make for great buyer lessons:

- Contextual precision over raw detection. A good AI reviewer doesn’t just identify problems; it understands the intent of the code. Tools that chased quantity often produced redundant or irrelevant comments, eroding trust with developers. LinearB’s system, for instance, prioritized signal-to-noise.

- Statefulness across commits. Real-world pull requests evolve. Reviewers that treat every commit as a clean slate force engineers to re-litigate resolved issues. Statefulness shortens review cycles and builds confidence that the AI is actually “following along” instead of starting over.

- Configurability as a control surface. Different teams have different tolerances for verbosity, tone, and enforcement. Tools that allowed reviewers to tune these behaviors through rules, YAML files, or slash commands created a noticeably smoother developer experience.

- Developer experience as the deciding factor. Beyond metrics, usability determined adoption. Reviewers that integrated seamlessly with existing GitHub and GitLab workflows, formatted comments cleanly, and respected team conventions were consistently rated higher. No matter how capable the model, friction in UX or comment style quickly erodes trust.

For leaders evaluating AI code review tools, these dimensions should carry as much weight as detection accuracy. The right tool will fit naturally into your process and reinforce good engineering habits across the entire team.

The lessons we learned that changed the way we build

To run the benchmarks, we built the experiment to measure progress. But the resulting framework ended up reshaping how we engineer our own AI systems. Here’s what we learned and implemented:

- Configurability matters as much as accuracy. Teams differ in how much “useful noise” they want. We made review rules customizable in simple YAML so every repo can calibrate its own signal level.

- State tracking should be native, not an add-on. The same logic that lets LinearB withdraw outdated comments now powers a continuous re-evaluation loop. The reviewer checks code and checks itself.

- Evaluation is a feature, not a project. The benchmark harness we built for this study can now run inside our own build pipelines. It’s our way of ensuring each model iteration gets better or at least stays consistently good.

What began as an external comparison project became an internal discipline loop for evaluating and improving our AI product.

Why benchmarks and evals matter for agentic systems

Benchmarks are uncomfortable by design. They turn opinion into evidence. They also move the industry forward. When every vendor claims “AI-powered reviews,” the only responsible response is to measure.

Our benchmark proved that AI code review is about signal-to-noise. The best systems know when not to speak. They remember what’s been fixed. They adapt to context, not just syntax.

That’s why we’re sharing the full methodology, bug list, and data in our latest whitepaper. If you’re evaluating tools (or even building your own) you can use the same framework we did. Inside, you’ll find all 16 test cases, scoring criteria, and comparative results.

👉 Download the 2025 AI Code Review Buyer’s Guide