Slack alerts are necessary for dev team success. Which ones should your team be using?

As an engineering team lead at a software company it’s my responsibility to fence distractions and make sure my developers are focused on the goals of the sprint. Too much noise from the business or tools can cause interruptions in our workflows and defocus the team from our priorities. Slack alerts are one of those distractions that can become too noisy very quickly. Whether it’s Jira, Github, Docker or LinearB, I am constantly analyzing which Slack alerts are actually useful to my team. Here is what I’ve learned.

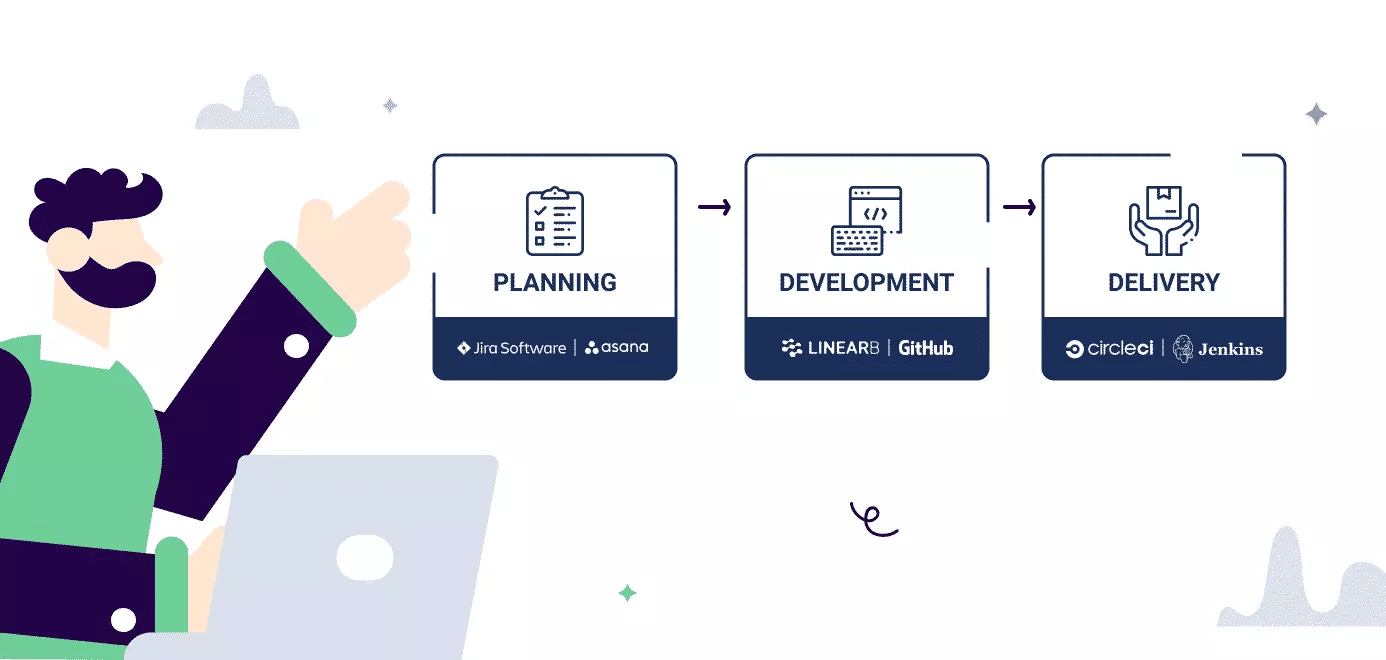

The most common Slack alerts for software developers are continuous integration and continuous deployment alerts. Automation servers like Jenkins and CircleCI provide in-chat deployment process alerts letting dev teams know the outcome of their build, test, and deployment workflows. The success of deployment alerts at the team level has made this feature a minimum requirement for any CI/CD tool trying to make it in today’s world. Is it possible to replicate the success of deployment alerts, in the development process phase?

Three Levels of Software Alerts

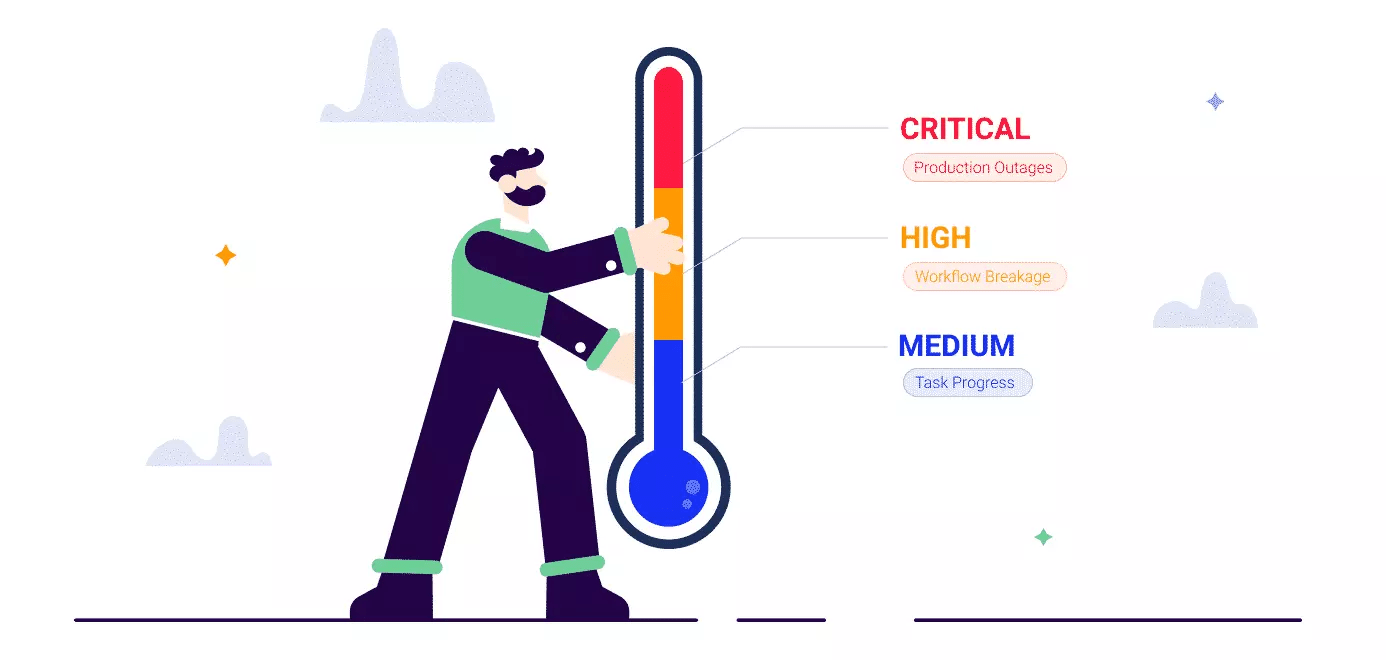

At LinearB we categorize automated software alerts into three distinct levels.

Critical – Production outage alerts. Companies use tools like PagerDuty to notify their dev leads when there is a production outage or service degradation.

High – Workflow breakages alerts. These are your deployment alerts sent to you from your CI/CD tools, letting your teams know the success or failure of your deployment.

Medium – Task Progress alerts. These are team alerts based on Jira subtask progress or Git operations that are often turned off due to the sheer amount of noise they produce.

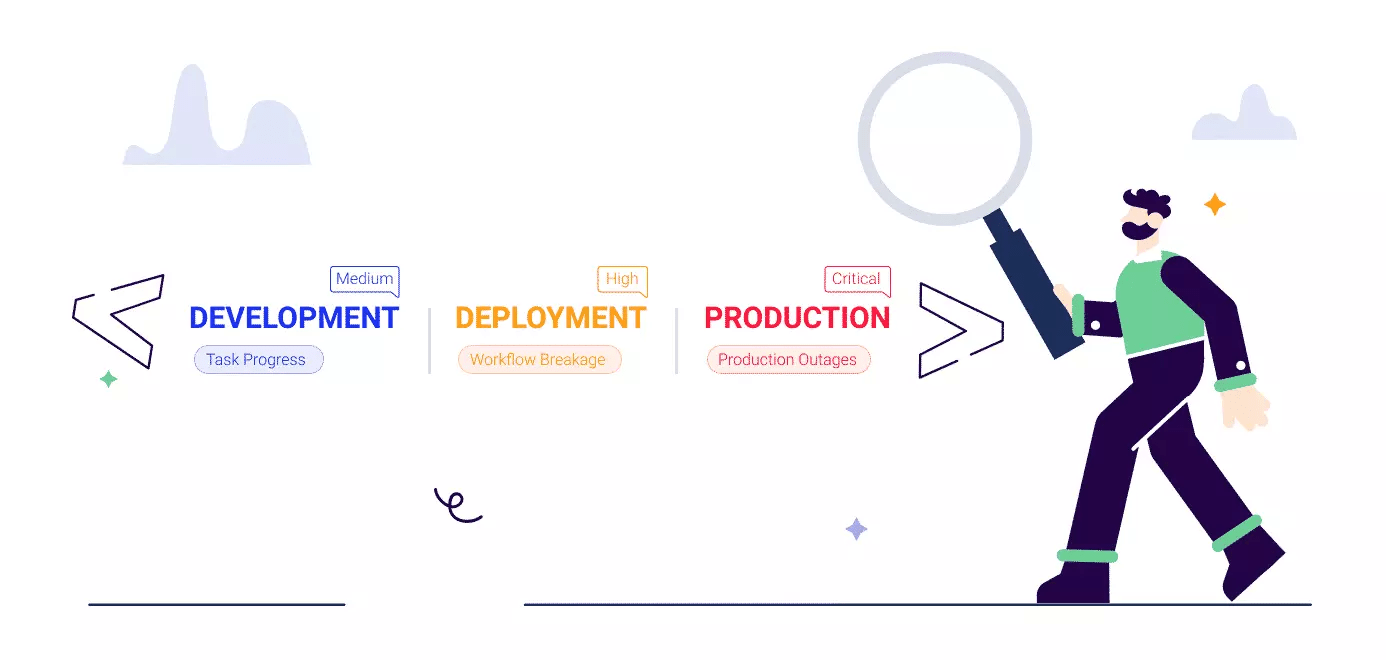

We can also visualize these levels in the form of Production – Deployment – Development

I believe most people agree that production and deployment level alerts are important to the success of engineering teams. But for many companies, the jury is still out on the importance and effectiveness of development process level alerts.

The Sound of a Thousand Branches

Production and deployment level alerts are very binary in nature. Production is running or it’s broken. The test passed or failed. The merge was complete or not.

As we go deeper into the development level, binary type alerts are not as effective due to volume and necessity. How many Jira stories get progressed throughout the week? How many pull requests are issued? For many teams, the volume would be enormous, they would cover up actually useful alerts, and team members generally don’t need to know when a pull request is issued.

Get Slack Alerts for high risk & stuck work

We analyze signals in Git & Jira and alert you to risky & blocked work

Noise is an efficiency killer. Any team who’s turned on out of the box slack alerts without any filters will tell you how unproductive it is to have hundreds of notifications flooding your Slack channel.

To cut through all the noise, dev teams carefully manicure which alerts are coming through so only the most important information is being reported. Build start, test status, and merge success should all sound familiar. If you’re just starting out, deployment alerts like these are a good place to begin.

Development Process Level Slack Alerts

How do you decipher which 10 development process level alerts are important when there are 500 per week? How do you find a needle in a haystack? Parameters.

What does the needle look like, should be your first question. What exactly are we looking for within these hundreds of development level alerts? At LinearB we developed parameters that allowed us to identify risk and stuck work.

Identifying stuck and at-risk work during development places these alerts somewhere between high and critical on the importance scale. Stuck work can easily mean other dependent branches being delayed or deployed out of order, resulting in a workflow issues. Work-at-risk slack alerts provide early identification of code that has the potential to cause a production breakage.

Risk

Identifying risk at the development process level can be incredibly useful. Whether it’s a massive PR merge or a PR issued with a high amount of refactor, notifying the team to keep an eye out when high risk actions take place is helpful.

Top Slack Alerts for Development Teams to identify work at risk include:

- Substantial PR merged without review

- Substantial branch with high rework or refactor rate

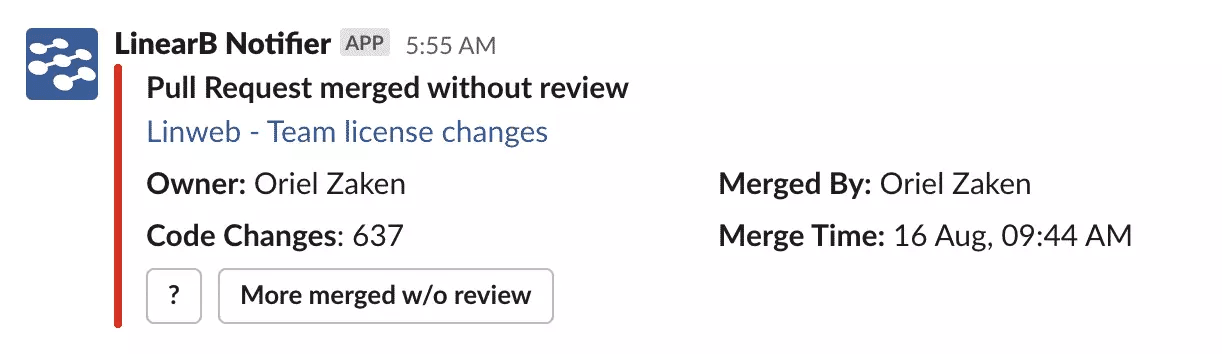

LinearB Slack Alert, Pull Request merged without review

LinearB Slack Alert, Pull Request merged without review LinearB Work-at-risk Slack Alert

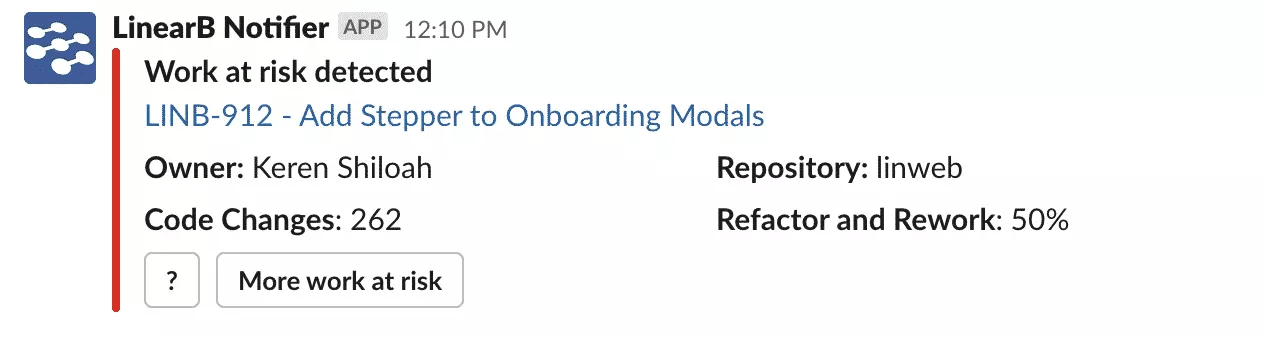

LinearB Work-at-risk Slack Alert

These preemptive notifications allow engineers to open a discussion about the work before it’s a problem. Earlier discovery almost always saves time during the iteration. Automated Slack alerts for these high risk actions also improve the way your teams work. By calling out high risk actions like Pull Request merged without review, it brings focus to detrimental behavior. Once it’s identified, it can be improved upon.

Stuck Work

Notifying the team about work that has stagnated in your pipeline increases team efficiency and helps give a voice to shy or distributed developers.

Top Development-level Slack alerts for stuck work include:

- PR is waiting for review (Review Request Pending)

- High interaction review

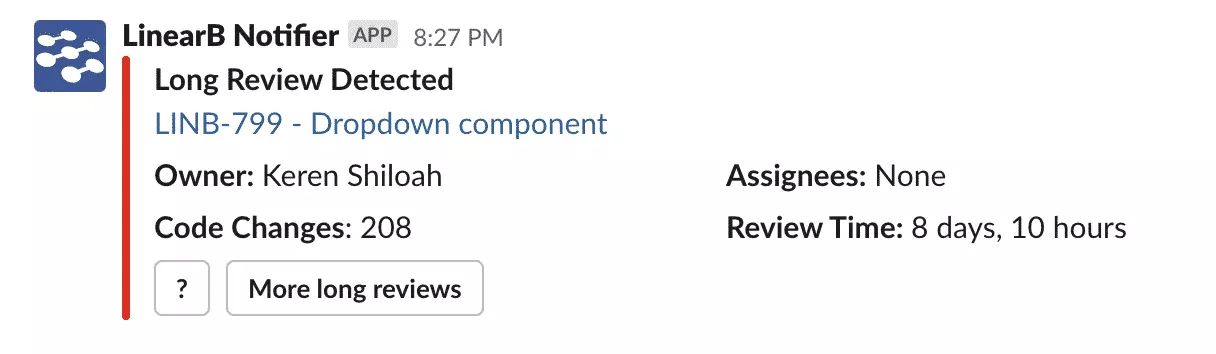

- Review Not Merged (Long Review Detected)

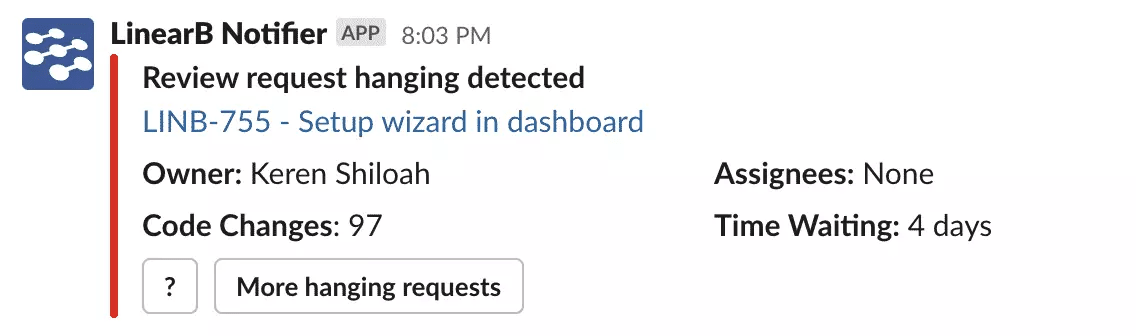

LinearB Slack Alert, Review request hanging

LinearB Slack Alert, Review request hanging LinearB Slack Alert, Long Review

LinearB Slack Alert, Long Review

Back when we were working in an office, if someone issued a PR and no one picked it up for review within the week, they would have to bring it up during synchronous communication periods like stand-up, lunch, or interrupting someone’s work. Now that everyone is distributed, it can be even more challenging to identify stuck work in our development process, or know when I’m not interrupting anyone. Slack alerts are an easy asynchronous way to communicate when something needs attention without someone having to personally reach out, or wait until the next stand up — which could be 20 hours away.

The other major pros to asynchronous stuck work slack alerts are using them to balance teamwork and drive discussions. Asking someone to drop what they are doing to follow-up on your request is bad form. An alert however can be read when someone is taking a break and picked up by whoever has time.

These alerts also provide an opportunity to have a focused Slack or Zoom discussion that may not have been otherwise had. Giving developers the chance to provide context around the alert is an easy way to make sure your devs are being heard, as well as learn how you can best support your teammates.

The Future of Software Development Slack Alerts

At LinearB, we’ve worked hard to provide development teams with all of the Slack alerts I mentioned above. Our risk and stuck work alerts have changed the way my team works and I hope they will help yours as well. With that in mind, we are focused on the continued development of even smarter alerts…and not just in Slack either. Coming soon from the LinearB development team: custom alerts based on specific stories, repositories, or branches, as well as alerts that will help teams focus on the right work order to help keep your iteration on schedule.

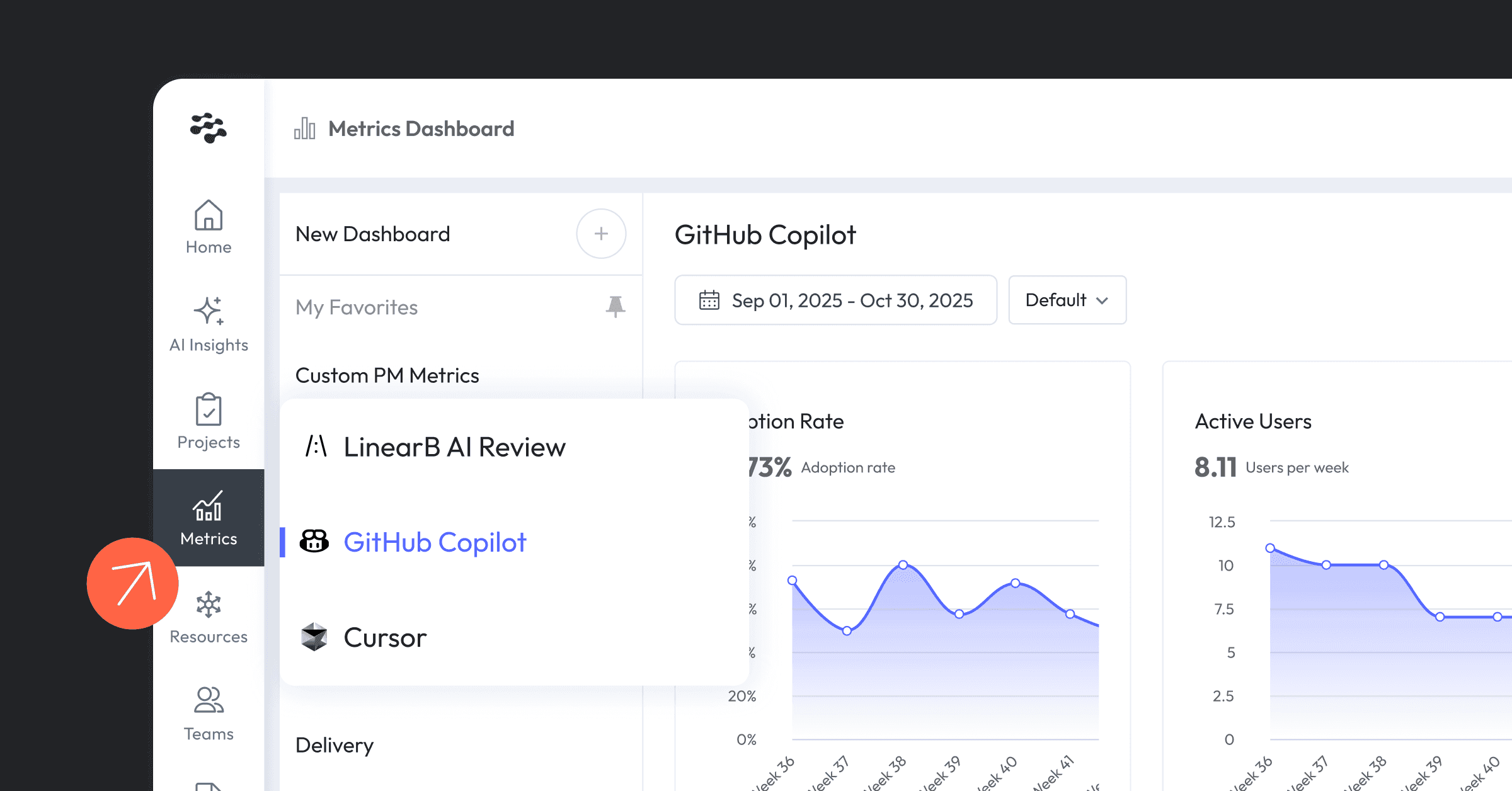

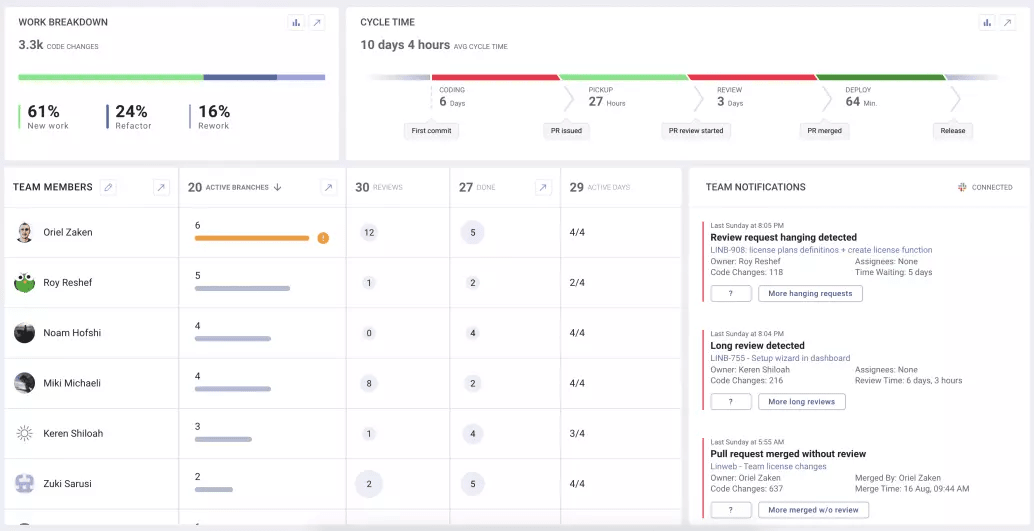

LinearB High-level View

LinearB High-level View

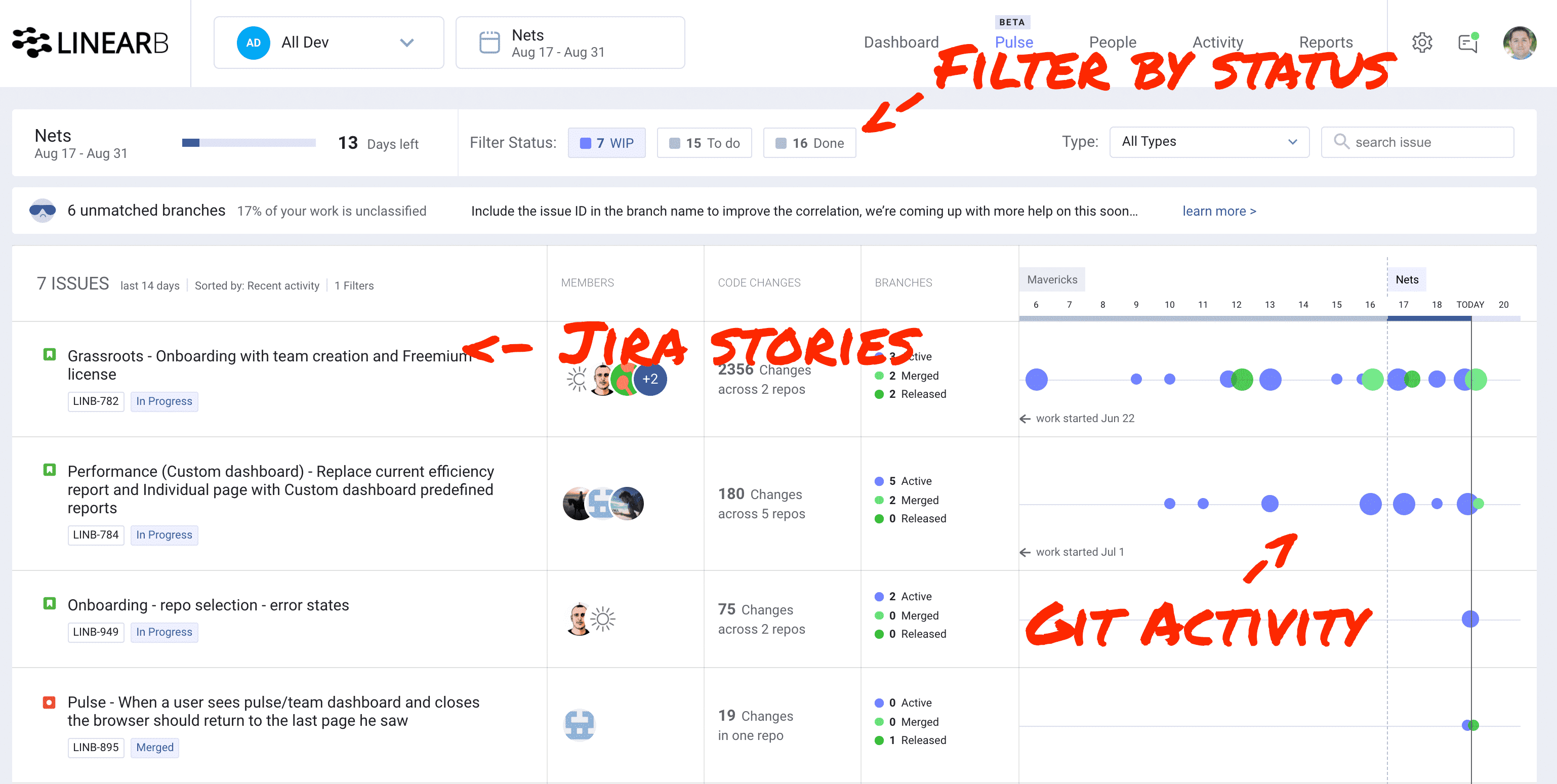

If Slack isn’t your thing, the LinearB dashboard view provides team notifications on the bottom right to help teams focus during scrum ceremonies. Along those same lines, if your team doesn’t have a slack alert culture, LinearB also has the team Pulse view that correlates actions from Jira and Git into a single view across the whole team.

LinearB Pulse View

LinearB Pulse View

If you want to start playing with development-level Slack alerts or see what your team looks like in Pulse, we invite you to try the LinearB teams dashboard for free. Let’s check it out>>>