Driving continuous improvement and delivering more value starts with measuring team performance. Basic metrics like lines of code fail to provide meaningful insights into team effectiveness, and the most successful engineering organizations focus on key performance indicators (KPIs) that directly correlate with business outcomes and engineering excellence.

Don’t know where to start with software development KPIs? We've identified 15 essential KPIs that every engineering leader should track. These measures are categorized into Developer Experience (DevEx) and Developer Productivity (DevProd) dimensions, providing a balanced view of your engineering organization's health and performance.

This expanded guide will help you understand:

- What metrics to set goals against.

- How to interpret metrics to evaluate current performance.

- How to leverage KPIs to drive sustained improvement.

What Are Software Development KPIs?

Software development KPIs (Key Performance Indicators) are quantifiable measurements used to evaluate the success and efficiency of your development processes. KPIs are directly tied to strategic objectives and provide actionable insights into team performance.

Effective software development KPIs share several important characteristics:

- Measurable: They can be quantified with numerical values that allow for objective assessment

- Clear: Every team member understands what the KPI is measuring and why it matters

- Key: They significantly impact project success and align with business objectives

- Actionable: They provide insights that can drive specific improvements

- Balanced: They measure both short-term output and long-term value creation

Why You Should Measure Software Development KPIs

KPIs help you identify bottlenecks, optimize processes, and make data-driven decisions that improve developer experience and productivity. Engineering leaders who leverage KPIs gain significant advantages over those who rely on subjective assessments or anecdotal evidence. This includes:

- Objective Performance Assessment: KPIs provide an unbiased view of team performance

- Bottleneck Identification: Pinpoint exactly where your development process is slowing down, whether it's in code review, deployment, or another phase.

- Data-Driven Decision Making: Base decisions on concrete data rather than gut feelings for more effective process improvements.

- Team Alignment: Clear metrics help align your team around common goals and create shared understanding of what success looks like.

- Continuous Improvement: Regular KPI tracking establishes a feedback loop that drives ongoing optimization of your development processes.

Limitations of Software Development KPIs

- Context Dependency: Performance that's "good" for one team might be "poor" for another, depending on team size, project complexity, and organizational goals. Without industry benchmarks, it's difficult to determine if your metrics indicate strong or weak performance.

- Balance Requirement: Focusing too heavily on one metric can lead to unintended consequences.

- Cultural Considerations: KPIs should support a healthy engineering culture, not create unhealthy competition or pressure.

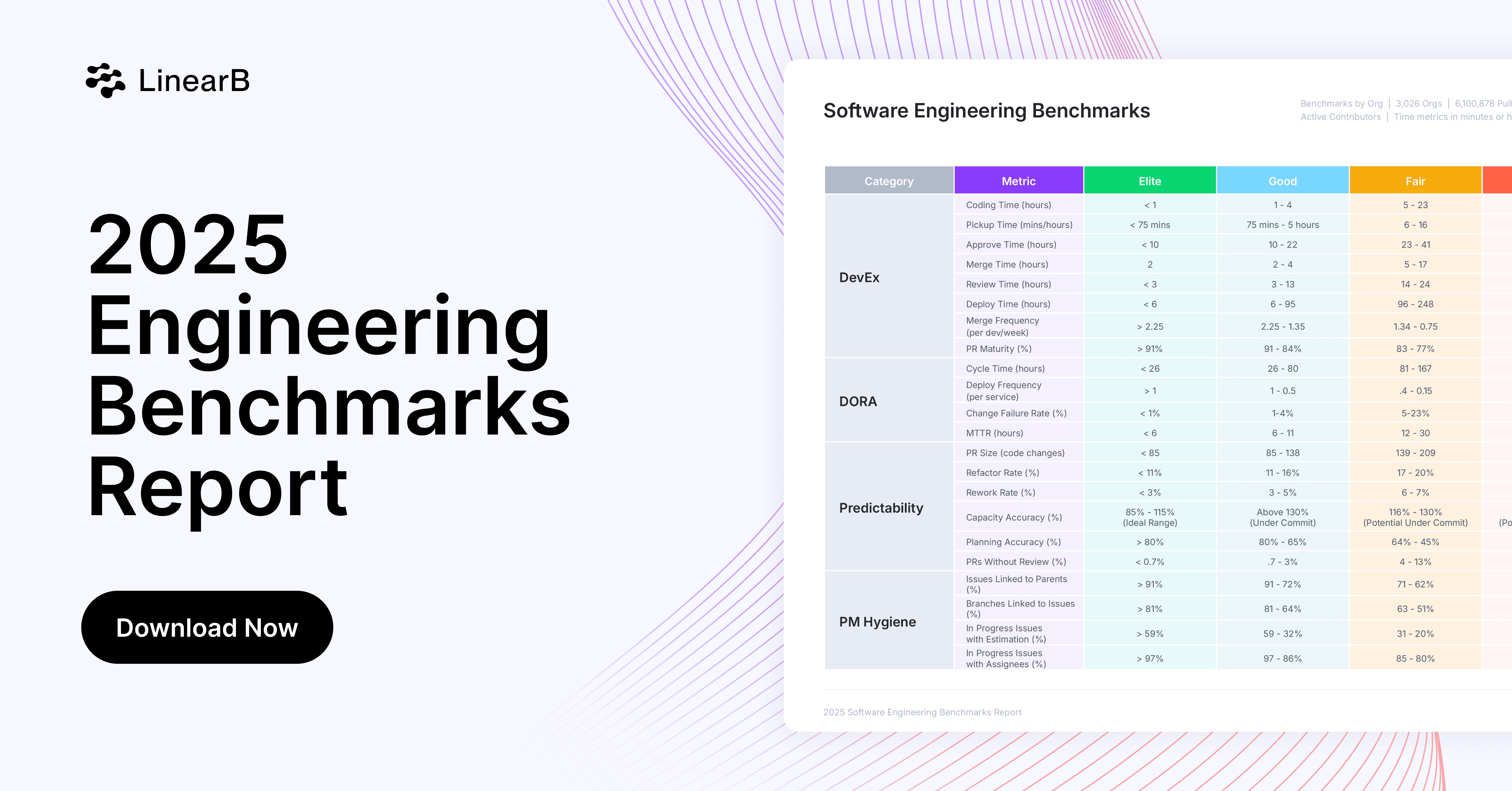

To derive meaningful insights from KPIs, you need benchmarks that contextualize your metrics and help you understand whether your performance is excellent, adequate, or needs improvement. We analyzed almost 3,000 dev teams and 6.1 million PRs to establish benchmarks for key engineering metrics. These benchmarks provide important context for interpreting your KPIs and setting realistic improvement goals.

15 Essential Software Development KPIs

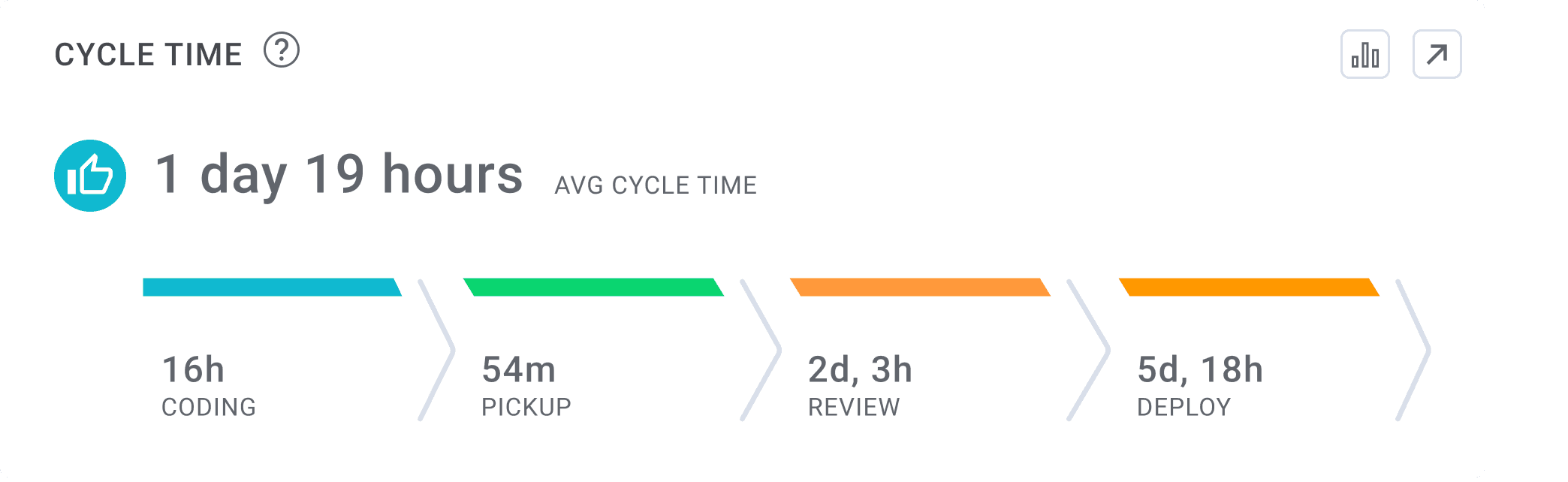

Cycle Time

Cycle Time measures the total time from first commit to production release. It's the most comprehensive velocity metric because it captures your entire development pipeline efficiency. According to our 2025 benchmarks research, elite teams are pushing code from commit to production in under 26 hours, while teams needing improvement take over 167 hours.

What's fascinating is that Cycle Time directly impacts code quality. Our research found that teams with longer Cycle Times have significantly higher Change Failure Rates. Why? The extended process makes it difficult for developers to keep track of concurrent changes in the codebase, which increases the likelihood of conflicts, outdated code, and prone-to-break dependencies.

To improve Cycle Time, focus on breaking work into smaller chunks and optimizing each phase of your development process, particularly code review and deployment.

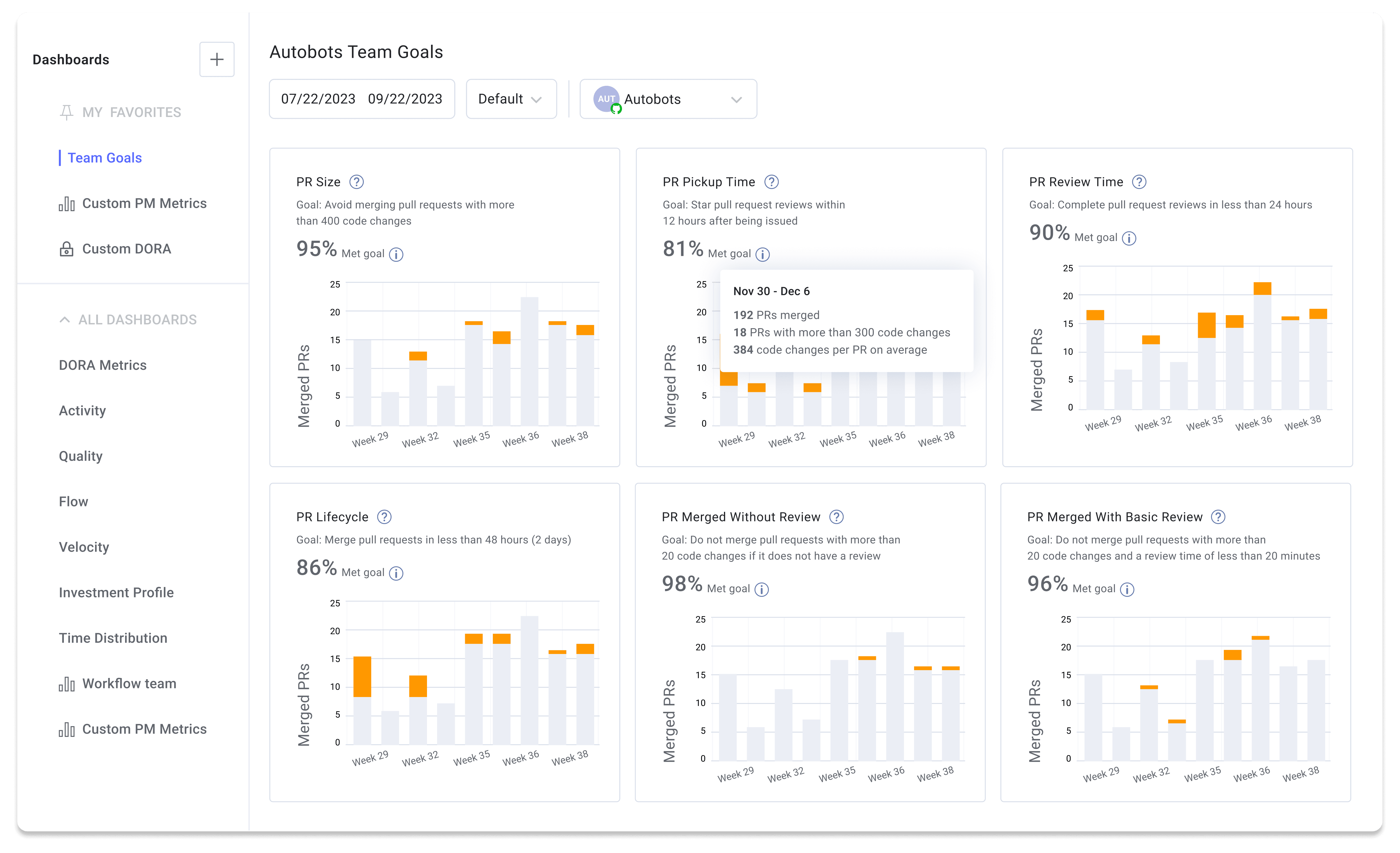

PR Size

PR Size measures the number of code lines modified in a pull request, and our data shows it's a critical driver of engineering velocity.

Here's why PR Size matters so much: Large PRs create bottlenecks at every stage of your development process. They wait longer for review, take longer to approve, and are modified more heavily during the review process. Our data shows a clear correlation between PR Size and Cycle Time.

Elite teams keep their PRs under 85 code changes, while teams needing improvement typically exceed 209 changes. The impact is dramatic - smaller PRs move through your pipeline up to 5x faster. The best way to reduce PR Size is to encourage developers to break work into smaller, more focused changes. Bot Assistants can help by sending notifications when PRs exceed your target size.

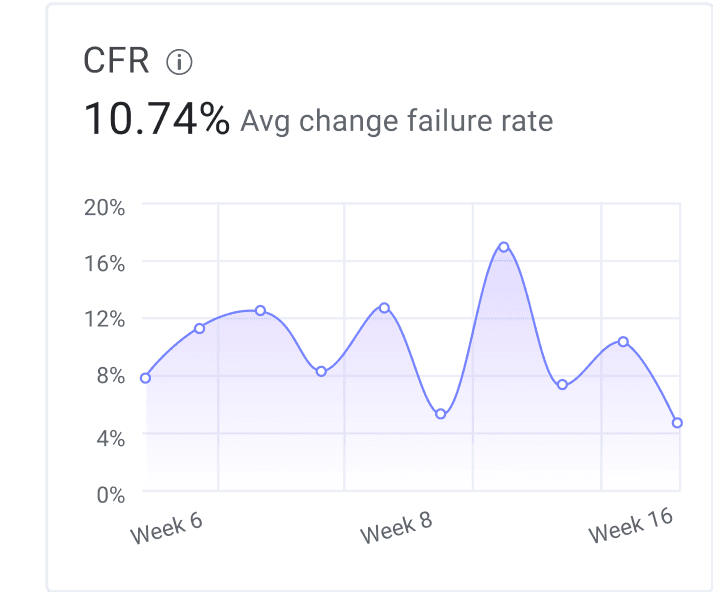

Change Failure Rate

Change Failure Rate (CFR) measures the percentage of deployments causing failures in production. It's a key indicator of code quality and reliability. What makes CFR so valuable is that it's objective - either your deployment broke something or it didn't. Unlike subjective quality metrics, CFR gives you a concrete measure of how stable your code is.

According to our benchmarks, elite teams maintain a CFR below 1%, while teams needing improvement exceed 23%. High CFRs not only disrupt user experience but also create additional work as your team scrambles to fix production issues. Interestingly, our research found that faster teams (those with shorter Cycle Times) actually have lower CFRs. This contradicts the common belief that moving quickly means sacrificing quality.

Deployment Frequency

Deployment Frequency measures how often your team pushes code to production. It's a key indicator of your team's agility and ability to deliver value quickly.

Elite teams deploy more than once per day per service, while teams needing improvement typically deploy less than once per week. More frequent, smaller deployments mean faster feedback loops and the ability to respond more quickly to changing requirements. They also reduce risk by limiting the scope of each change and make it easier to identify and fix issues when they arise.

Startups typically have higher Deployment Frequencies than enterprises, likely due to their leaner infrastructure and less complex approval processes. But even large organizations can achieve high deployment frequencies by implementing continuous delivery practices.

Mean Time to Recovery

Mean Time to Recovery (MTTR) measures how quickly your team can restore service after a production failure. It's a critical metric for reliability and user experience.

Elite teams restore service in less than 6 hours, while teams needing improvement take more than 30 hours. Fast recovery requires not only technical capabilities but also effective incident response processes. To improve MTTR, ensure your team has robust monitoring, clear incident response procedures, and the necessary access and tools to diagnose and fix issues quickly.

Merge Frequency

Merge Frequency tells you how many PRs are merged per developer per week. It's a direct measure of how often developers are completing work and integrating it into the codebase.

Elite teams achieve more than 2.25 merges per developer per week, while teams needing improvement fall below 0.75. Higher merge frequencies correlate with more frequent value delivery and typically indicate a healthy, productive engineering organization.

Our data shows that merge frequency is closely tied to PR size - smaller PRs are easier to complete and merge, leading to higher merge frequencies. It's also strongly correlated with PR maturity, as well-prepared PRs move through the review process more quickly.

PR Maturity

PR Maturity measures how "ready" pull requests are when they're submitted for review. Specifically, it's the ratio between the total changes added to a PR after it was published and the total changes in the PR.

Elite teams maintain PR Maturity above 91%, while teams needing improvement typically fall below 77%. Higher PR maturity correlates with faster reviews and higher merge frequencies. Our research found that PRs with higher maturity ratios experience shorter pickup times, as reviewers are more willing to review well-prepared code. This creates a virtuous cycle - developers who submit high-quality PRs get faster feedback, which helps them ship more code.

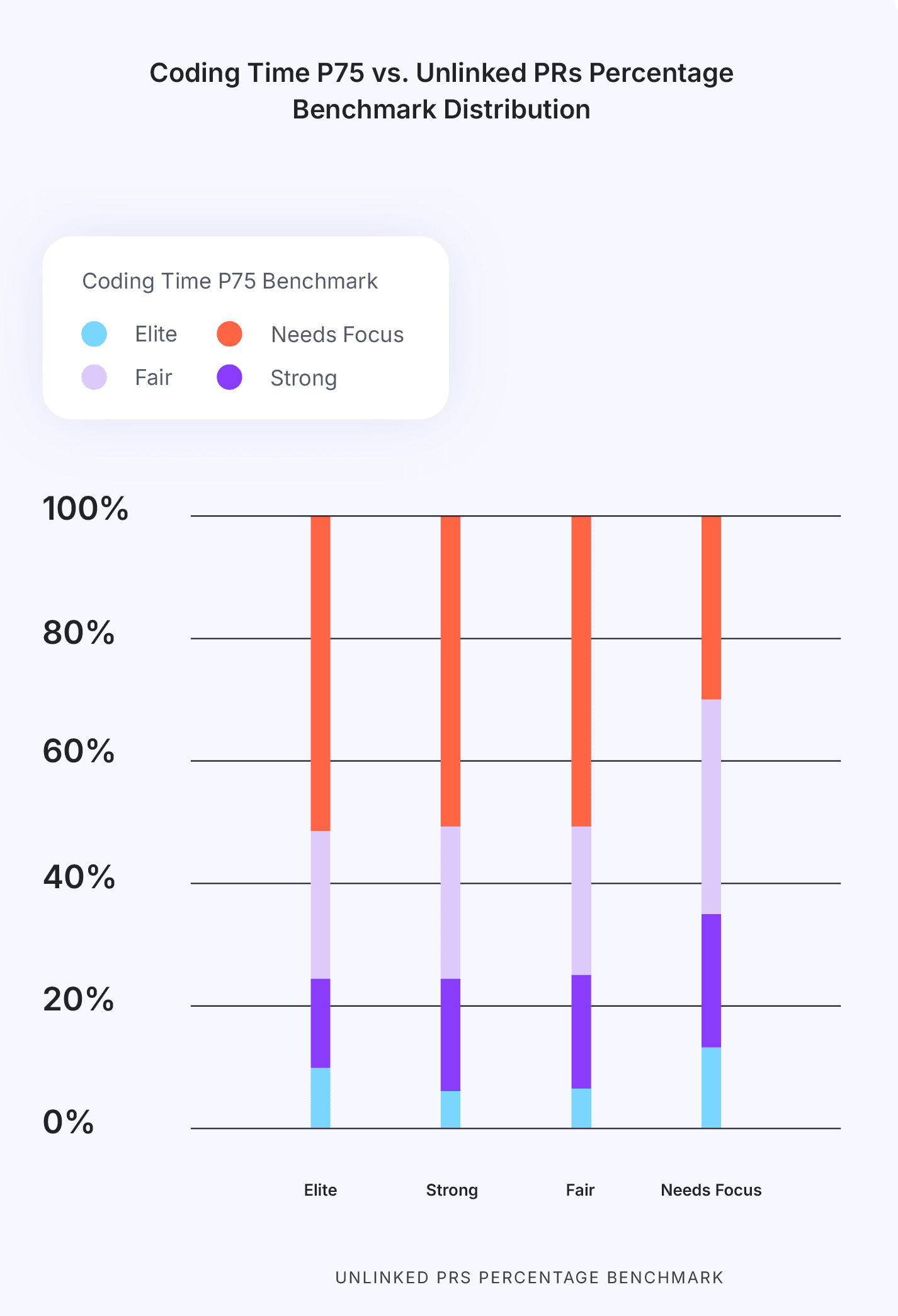

Coding Time

Coding Time measures the time from first commit to PR creation. Elite teams maintain coding times under 1 hour, while teams needing improvement typically exceed 23 hours. Extended coding times often indicate unclear requirements, excessive work-in-progress, or technical challenges.

One surprising finding from our research is that teams with poor project management hygiene (like branches not linked to issues) often have shorter coding times. While this might seem positive, it typically comes at the cost of reduced visibility and predictability.

To improve coding time, ensure requirements are clear before work begins, limit work-in-progress, and address technical debt that might be slowing down implementation.

Pickup Time

Pickup Time measures how long a PR waits for someone to start reviewing it, and is often a major bottleneck in development pipelines.

Elite teams achieve pickup times under 75 minutes, while teams needing improvement typically exceed 16 hours. Long Pickup Times not only extend Cycle Time but can also demotivate developers waiting for feedback.

Our research found that larger PRs wait significantly longer for review, creating a compounding effect on Cycle Time. We also discovered that PRs with higher maturity ratios get picked up faster, as reviewers prefer to review well-prepared code. To reduce pickup time, consider implementing automated review assignments, setting clear expectations for review timeliness, and using notifications to alert reviewers of pending PRs.

Review Time

Review Time measures how long it takes to complete a code review, from first review to PR approval. Efficient reviews are crucial for maintaining development velocity.

Elite teams complete reviews in less than 3 hours, while teams needing improvement typically take more than 24 hours. Our data shows that PR size significantly impacts Review Time, with larger PRs taking substantially longer to review.

To improve Review Time, encourage smaller PRs, provide clear review guidelines, and consider implementing automated checks that can reduce manual review burden. Creating a culture where reviews are prioritized can also help reduce delays.

Deploy Time

Deploy Time measures how long it takes to get code from merge to production. It reflects the efficiency of your deployment process.

Elite teams deploy in less than 6 hours, while teams needing improvement typically take more than 248 hours (over 10 days). Our research found that longer deploy times correlate with higher Change Failure Rates, suggesting that code that sits waiting to be deployed may become outdated or conflict with other changes.

To reduce Deploy Time, invest in deployment automation, implement continuous deployment where appropriate, and streamline approval processes. The goal should be to make deployments routine, low-risk events rather than major operations.

Rework Rate

Rework Rate measures the percentage of changes to recently modified code (less than 21 days old). It indicates how often developers need to fix or modify their recent work.

Elite teams maintain rework rates below 3%, while teams needing improvement typically exceed 7%. High rework rates not only reduce productivity but can also demotivate developers who must repeatedly revisit the same code.

Rework is often caused by unclear requirements, inadequate testing, or rushed implementation. To reduce rework, ensure requirements are clear before work begins, implement thorough code reviews, and consider pair programming for complex changes.

Planning Accuracy

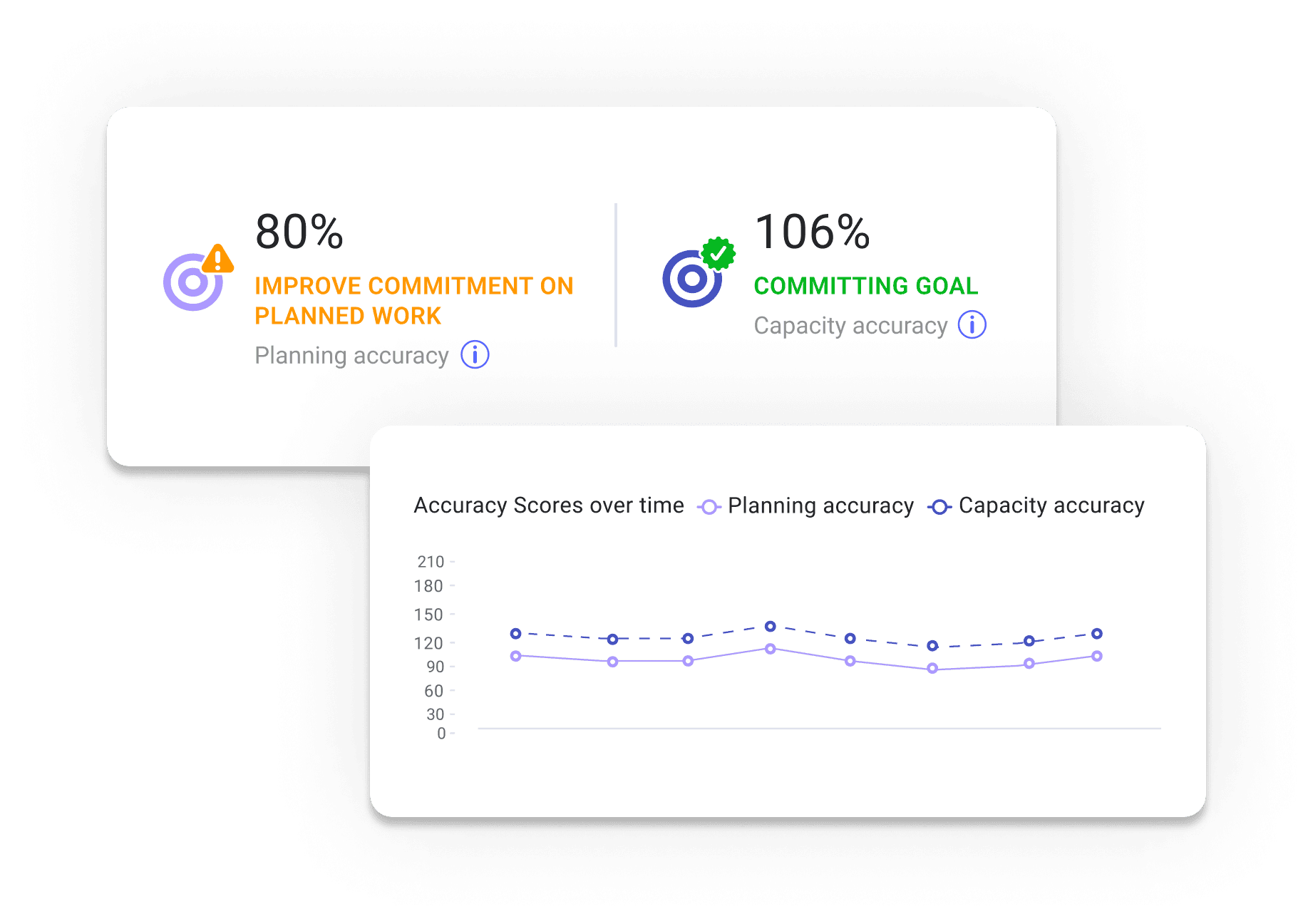

Planning Accuracy measures how well your team delivers on its commitments. Specifically, it's the ratio of planned work versus what is actually delivered during a sprint or iteration.

Elite teams achieve planning accuracy above 80%, while teams needing improvement typically fall below 45%. High Planning Accuracy enables more reliable forecasting and builds trust with stakeholders.

Our research found that over half of engineering projects under-commit in their iteration plans, with less than 20% hitting the ideal range. This suggests many teams are playing it safe with their estimates to ensure they meet commitments. To improve Planning Accuracy, track historical performance, break work into smaller, more predictable units, and regularly review and refine estimation practices.

Capacity Accuracy

Capacity Accuracy measures how many issues a team completed in an iteration compared to the amount planned. It indicates how well your team understands its own capabilities and workload.

The ideal range for Capacity Accuracy is 85-115%, indicating that teams are neither significantly over-committing nor under-utilizing their capacity. Our research found that nearly 70% of teams under-commit in their Capacity Planning, potentially leaving productivity on the table.

Unlike Planning Accuracy, which focuses on completing specific planned items, capacity accuracy looks at total work completed versus planned. Together, these metrics give you a complete picture of your team's predictability.

Refactor Rate

Refactor Rate measures the percentage of changes to legacy code (older than 21 days). It indicates how much effort your team is investing in improving existing code versus developing new features.

Elite teams maintain Refactor Rates below 11%, while teams needing improvement typically exceed 20%. Too low of a Refactor Rate might indicate neglect of technical debt, while too high a rate might signal excessive legacy code issues.

Finding the right balance for Refactor Rate depends on your specific context. For teams building new products, a lower Refactor Rate is expected. For teams maintaining mature products, a higher rate might be appropriate as they focus on improving existing functionalities.

Using KPIs to Drive Sustained Improvement

Tracking KPIs is just the first step. To drive meaningful improvement, you need to use these metrics effectively:

- Establish Baselines: Before setting goals, measure your current performance to establish a baseline for each KPI.

- Set Realistic Goals: Use benchmarks to set achievable improvement targets. Focus on moving up one tier (e.g., from "Fair" to "Good") over a quarter rather than trying to reach "Elite" immediately.

- Balance Your Metrics: Ensure you're tracking both Developer Experience and Developer Productivity metrics to maintain a healthy engineering organization.

- Communicate Clearly: Share KPIs and goals with your team, explaining why each metric matters and how it connects to broader objectives.

- Review Regularly: Schedule regular reviews of your KPIs to assess progress and identify areas for improvement.

- Take Targeted Action: Use KPI insights to implement specific, focused improvements rather than broad, sweeping changes.

- Iterate and Adapt: As your team improves, adjust your goals and potentially introduce new KPIs to address evolving challenges.

Remember that KPIs are tools for improvement, not weapons for criticism. Use them to identify opportunities and celebrate progress, not to punish or create unhealthy competition. Learn how elite engineering teams set goals to drive continuous improvement in the Engineering Leader's Guide to Goals and Reporting.

Conclusion

The 15 KPIs outlined in this guide provide a comprehensive framework for measuring and improving both Developer Experience and Developer Productivity. By tracking these metrics, establishing benchmarks, and implementing targeted improvements, you can create a more efficient, effective engineering organization that delivers high-quality software at speed.

The goal isn't to optimize for metrics in isolation, but to create a balanced, healthy engineering culture that delivers value to users while providing a positive experience for developers. With the right KPIs and a thoughtful approach to improvement, you can achieve both objectives and lead your team to sustained success.