Identify PR Policy Inefficiencies

PR reviews are a critical safeguard against introducing security vulnerabilities, performance regressions, and compliance risks. However, without clear policies in place, critical checks can be missed and processes can break down. Common challenges include:

- Security risks. Sensitive files may be modified without a security review, increasing exposure to vulnerabilities.

- Lack of compliance enforcement. Internal security policies struggle to ensure that required review steps are followed.

- Unreviewed PRs merged to main. Code changes are pushed without proper scrutiny, bypassing essential peer review and jeopardizing SOC2 compliance.

- Test coverage gaps. PRs that do not update or add tests may degrade code reliability over time.

- Oversized PRs. Large, complex changes are harder to review thoroughly, increasing the chance of undetected issues.

- Lack of AI policy enforcement. AI-generated code, such as GitHub Copilot-assisted contributions, can introduce security risks or bypass team conventions. Without clear labeling, reviewers may not realize which PRs include AI-written code and fail to apply additional scrutiny.

Deploy PR Policy Automations

Implementing automated PR policies with LinearB ensures that critical checks are enforced consistently, reducing security risks and improving compliance.

Automate enforcement of key review policies

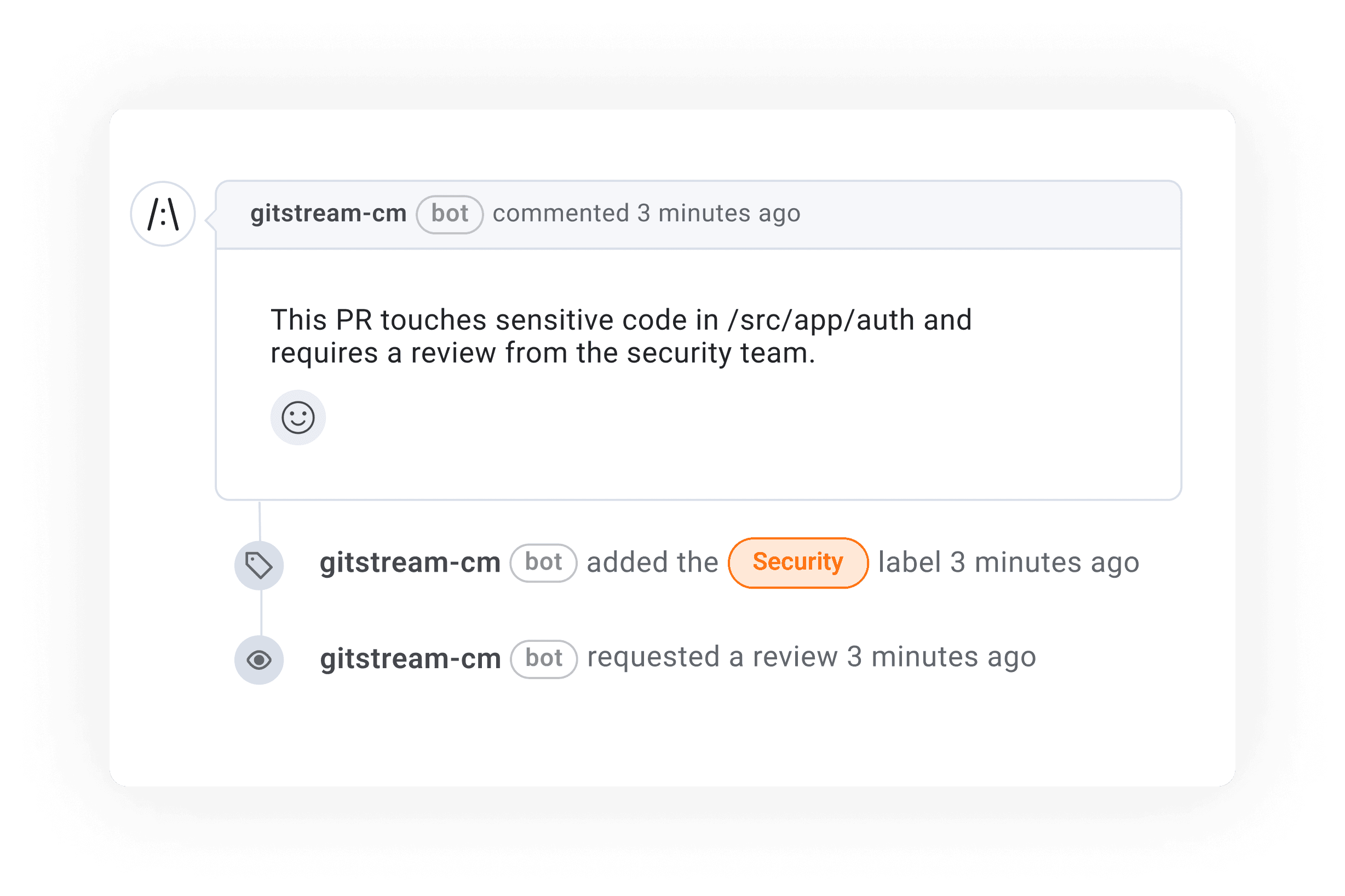

- Review Sensitive Files: Requires security reviews when changes are made to sensitive areas of the codebase. Learn more.

- Assign Reviewers by Directory: Routes PRs to the appropriate teams based on the affected file paths, ensuring expert oversight. Learn more.

- Notify Watchlist: Alerts relevant stakeholders when PRs affect critical areas of the codebase. Learn more.

- Flag Code Merged Without Review: Prevents unreviewed code from slipping into production by sending automated alerts when PRs are merged without approval. Needed for SOC2 compliance. Learn more.

As established in Find Code Experts, you can assign reviewers by directory, use a custom watchlist, or configure rules to review sensitive files so that security teams are looped in when needed without adding unnecessary friction. This ensures that PR policies scale to meet the needs of each PR, on demand. Manually enforcing security and compliance standards is error-prone and slows down delivery. For example, if you flag code merged without review then you can prevent unreviewed changes from bypassing the intended review process, which is required for your organization to be SOC2 compliant.

manifest:

version: 1.0

# === Slack settings ===

slack_webhook_url: "{{ env.SLACK_WEBHOOK }}"

security_team: "your-org/security"

automations:

flag_merged_without_review:

on:

- merge

if:

# Check if PR was merged without approvals

- {{ pr.approvals | length == 0 }}

run:

# Apply a visible label

- action: add-label@v1

args:

label: "merged-without-review"

color: 'F6443B'

# Notify team in Slack for audit/compliance visibility

- action: send-slack-message@v1

args:

message: |

⚠️ PR #{{ pr.number }} - {{ pr.title }} was merged without peer review.

PR: https://github.com/{{ repo.owner }}/{{ repo.name }}/pull/{{ pr.number }}

webhook_url: "{{ slack_webhook_url }}"

# Add comment in PR for permanent visibility

- action: add-comment@v1

args:

comment: |

⚠️ This PR was merged without peer review.

Tagging @{{ security_team }} for awareness.Ensure PR quality with automated checks

- Ask AI to Suggest Tests: Uses AI to suggest additional test cases for uncovered or modified functions in the PR, including edge cases. Learn more.

- Label PRs Without Tests: Tags PRs that don’t include test updates, helping teams maintain test coverage standards. Learn more.

- Label Deleted Files: Identifies PRs that remove files, ensuring these changes receive proper attention. Learn more.

- Additional Review for Large PRs: Requires multiple reviewers for complex changes, to prevent oversights and maintain quality. Learn more.

- Automatically Label GitHub Copilot PRs: Flags PRs that were assisted by GitHub Copilot, based on known Copilot users, PR tags, or by asking the author. This makes it easier to enforce AI-generated code policies and apply appropriate review scrutiny. Learn more.

One of the easiest ways to prevent regressions is to label PRs without tests, prompting developers to add them before merging. Similarly, use an automation to label deleted files for the appropriate scrutiny. For complex changes, requiring an additional review for large PRs ensures multiple reviewers to weigh in, giving larger updates the necessary attention.

AI-generated code is becoming more prevalent, but visibility remains a challenge. Without clear indicators, reviewers might assume a PR was written entirely by a human and miss opportunities to catch AI-related errors or inconsistencies. By automatically labeling GitHub Copilot PRs, teams can apply additional scrutiny where necessary, ensuring AI-assisted code meets security and quality standards before merging.

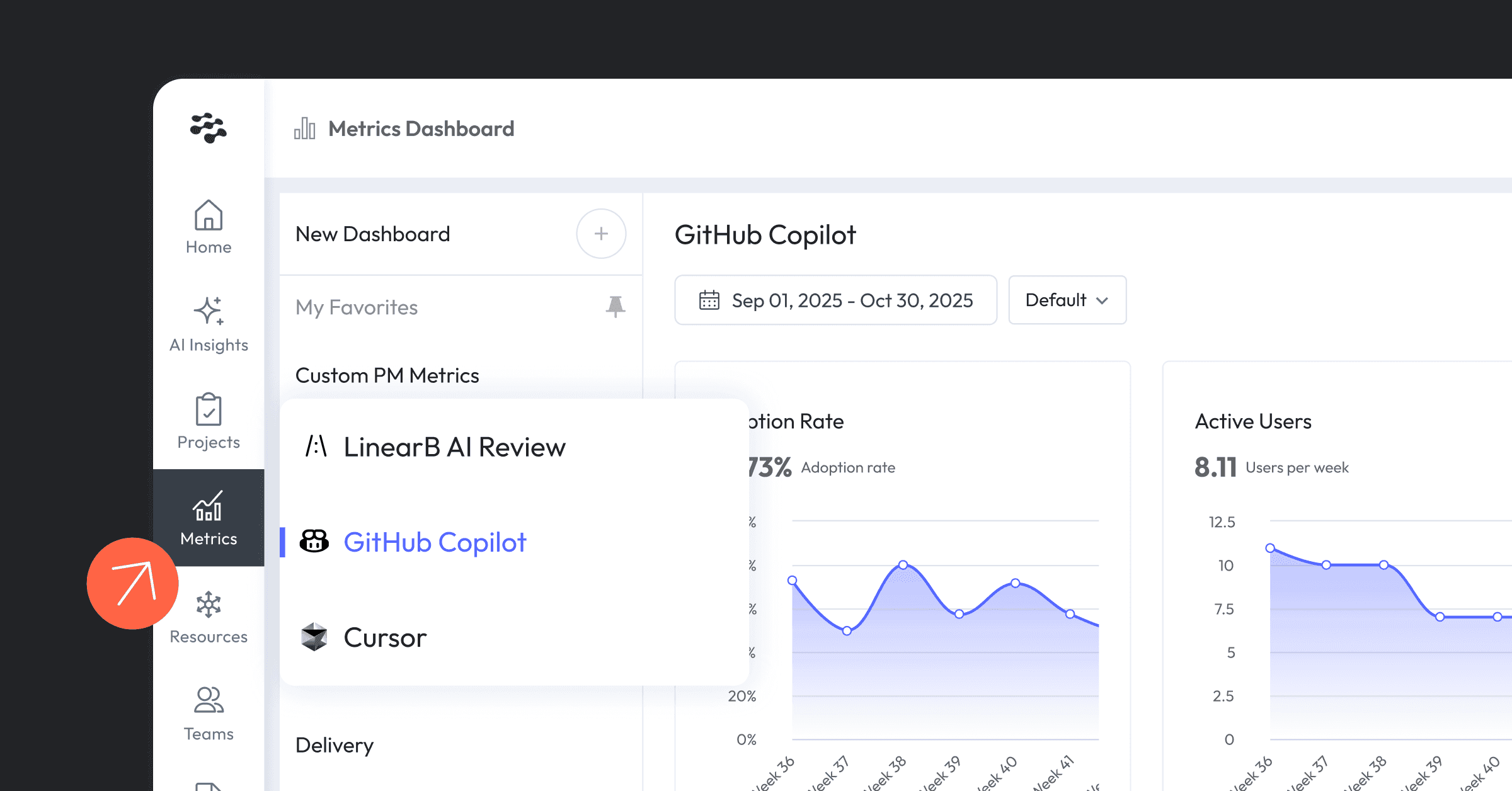

For example, you could progressively track GitHub Copilot adoption by doing the following:

- Start by labeling PRs from a known list of early Copilot adopters with gitStream

- Transition to prompting all contributors to self-report Copilot usage by using gitStream to add a checkbox comment on each PR that applies labels based on how the author indicates Copilot assistance.

To achieve Step 1, you would start with a config like this:

manifest:

version: 1.0

# === Known early Copilot testers ===

copilot_users:

- "alice"

- "bob"

- "carol"

automations:

flag_copilot_early_adopters:

if:

# Check if PR author is a known Copilot early adopter

- {{ pr.author | match(list=copilot_users) | some }}

run:

# Apply a label

- action: add-label@v1

args:

label: "copilot-assisted"

color: "BFDADC"

# Add comment to remind reviewers

- action: add-comment@v1

args:

comment: |

🤖 This PR was flagged as AI-assisted based on known Copilot early adopters.And over time, this type of configuration can evolve as AI-assisted coding is rolled out across your organization. Eventually, all PRs could receive an automated comment that allows contributors to flag for PR contributions, which applies a label:

manifest:

version: 1.0

on:

- pr_created

automations:

comment_copilot_prompt:

# Prompt PR author to self-report Copilot usage

if:

- true

run:

- action: add-comment@v1

args:

comment: |

Please indicate whether you used Copilot to assist with this PR:

- [ ] Copilot Assisted

- [ ] Not Copilot Assisted

In a separate configuration file, you would write a second automation that watches to see if a checkbox is marked on the above comment:

manifest:

version: 1.0

automations:

label_copilot_pr:

# Look for author’s self-reported Copilot confirmation

if:

- {{ pr.comments | filter(attr='commenter', term='gitstream-cm') | filter(attr='content', regex=r/\- \[x\] Copilot Assisted/) | some }}

run:

- action: add-label@v1

args:

label: "🤖 Copilot"If there are PR characteristics you want to track that are unique to your organization (e.g., PRs that impacts a specific app in a monorepo, or PRs that changes database migrations, etc.), you can write your own labeling automation in YAML for gitStream to match a team’s needs. These policies can then enrich PR Notifications for your engineers.

Track PR Policy Impact with Key Metrics

Implementing automated PR policies for review allows your organization’s oversight on output to scale appropriately for the task at hand, de-risking all efforts across your engineering organization. Automated policies ensure every high-risk PR (whether it touches sensitive files, lacks tests, or involves AI-generated code) gets the scrutiny it needs.

Use PR policies to reduce change failure rate

Change failure rate (CFR), or the percentage of deployments that result in failure at production, often stems from risky changes slipping through review. Without enforced policies, critical checks are missed.

Routing PRs to the right experts, blocking unapproved merges, and requiring extra reviews for large changes all contribute to more thorough reviews and fewer failures in production. This drops CFR, boosts reliability, and builds confidence in every release.

Reduce rework with clear PR policies

High rework rates commonly happen when reviews miss key issues at the time of merge. Automated PR policies prevent these gaps upfront. Further routing reviews by expertise, and requiring extra reviewers for complex changes catch potential problems early. This reduces back-and-forth, shortens review cycles, and ensures code quality standards are met the first time.

Improve review depth with automated PR policies

When expectations aren’t defined, reviewers may give complex or high-risk changes only a cursory look, which represents itself as a shallow review depth. This metric is crucial as it highlights the thoroughness of code reviews, which directly influences code quality. It also offers insights into the level of collaboration among team members. Automated PR policies help ensure that every PR gets the appropriate level of scrutiny for your organization.

Rather than relying on manual enforcement, these automated policies allow teams to shift from reactive fixes to proactive governance. This enables faster development cycles, improved security posture, and reduced operational risk, all while scaling best practices across the organization. When review policies are automated, teams can focus on delivering value without worrying about compliance gaps.