Identify PR Blindspots

Pull requests (PRs) are the heartbeat of modern software development, but without clear context, they can become blockers or even hazards. Lack of visibility slows down reviews, increases cognitive load for developers (“what’s going on with this one?”), and creates ambiguity around PR status and impact. Common challenges include:

- PRs lack contextual details. Reviewers often open a PR only to find little or no description, making it difficult to understand what changes were made and why. This leads to unnecessary back-and-forth, delaying approvals.

- Poor PR descriptions create confusion and slow down reviews. Opening a PR with a maximally helpful description takes a large amount of uninterrupted focus, and can only be captured in a narrow window of time by the code author. Disjointed processes lose context before a review even starts.

- Difficulty tracking the impact and lifetime of AI-assisted code. As AI-generated code (e.g., from GitHub Copilot) becomes more common, teams struggle to monitor how and where it's being used. AI already generates an estimated 41% of all code, with 256 billion lines written in 2024 alone. That’s up from an estimated 20% of all code in 2024, or 1 in every 5 lines. Without labeling, there's no clear way to assess its impact, maintainability, or potential risks over time.

- Teams miss opportunities to improve PR efficiency, quality, and governance. Automated PR labels bring clarity, helping teams move faster and build trust in their development processes.

Deploy PR Labeling Automations

Automated PR labeling with LinearB’s gitStream provides instant visibility into PR status, review progress, and AI-assisted contributions, which will remove guesswork for reviewers and improve the team’s velocity.

Improve visibility of PR status indicators

- Add PR Description Using LinearB's AI: Auto-generates and appends a summary to PRs, reducing the burden on developers to manually provide context. Learn more.

- Label the Number of Approvals: Labels PRs with the number of completed reviews, providing instant visibility into approval progress. Learn more.

- Label Unresolved Review Threads: Flags PRs that have unresolved comments, ensuring open discussions are addressed before merging. Learn more.

- Provide Estimated Time to Review: Assigns a time estimate label to each PR, helping reviewers plan their workload more effectively. Learn more.

- Request Screenshot: If a PR involves UI changes but lacks an image, applies a "no-screenshot" label and requests one. Learn more.

- Label Modified Resources: Labels PRs based on the types of resources modified (e.g., sub-systems, libraries, infrastructure). This provides immediate visibility into what parts of the codebase are affected. Learn more.

- Automatically Label GitHub Copilot PRs: Flags PRs that were assisted by GitHub Copilot, using a predefined list of Copilot users, PR tags, or author confirmation. Learn more.

Automated labels act as a real-time dashboard for PRs, reducing manual tracking and status confusion. Instead of digging through comments or guessing whether a PR is ready, teams get instant visibility, accelerating cycle time while maintaining quality. Your team benefits not only from direct statuses like approvals and unresolved threads, but also derived statuses like the estimated time to review.

These labels offer two key benefits. First, reviewers can quickly understand which parts of your codebase are impacted, making it easier to assign appropriate reviewers or anticipate testing requirements. Second, these labels unlock deeper insights when paired with LinearB metrics. For example, you can compare cycle time, pickup time, or review depth across resource types, such as “TypeScript”, “mobile”, or "backend” and pinpoint where bottlenecks exist. This allows you to optimize specific areas of the delivery process.

Save the submitting developer time and accelerate the pickup of the review by adding an AI-generated description of the proposed changes to every human-submitted PR. You can achieve this by configuring simple YAML in your repo’s .cm/gitstream.cm file:

manifest:

version: 1.0

automations:

pr_description:

# Trigger when PR is created or updated with new commits

on:

- pr_created

- commit

# Skip generating descriptions for draft PRs or bot accounts

if:

- {{ not pr.draft }}

- {{ pr.author | match(list=['github-actions', 'dependabot', '[bot]']) | nope }}

run:

- action: describe-changes@v1

args:

# The mode to add the description, can be replace, append, or prepend

concat_mode: append With these wins in place, some of your next goals likely include PR Enrichment so you can sync these efforts to your project management platform, elevating the dashboard-like insights to your internal product-led organization.

Track and manage AI-assisted contributions

Labeling AI-assisted PRs is not about blocking, but about tracking and learning (without adding friction), so teams can refine their approach and gain a decision-making advantage. By automatically labeling AI-assisted PRs, organizations can monitor adoption trends, assess code maintainability, and build governance policies around AI usage. Tracking key metrics such as PR size, rework rate, and PRs merged without review (see the next section) can help leaders understand whether AI-generated code is improving productivity or introducing risks.

Track Label Impact with Key Metrics

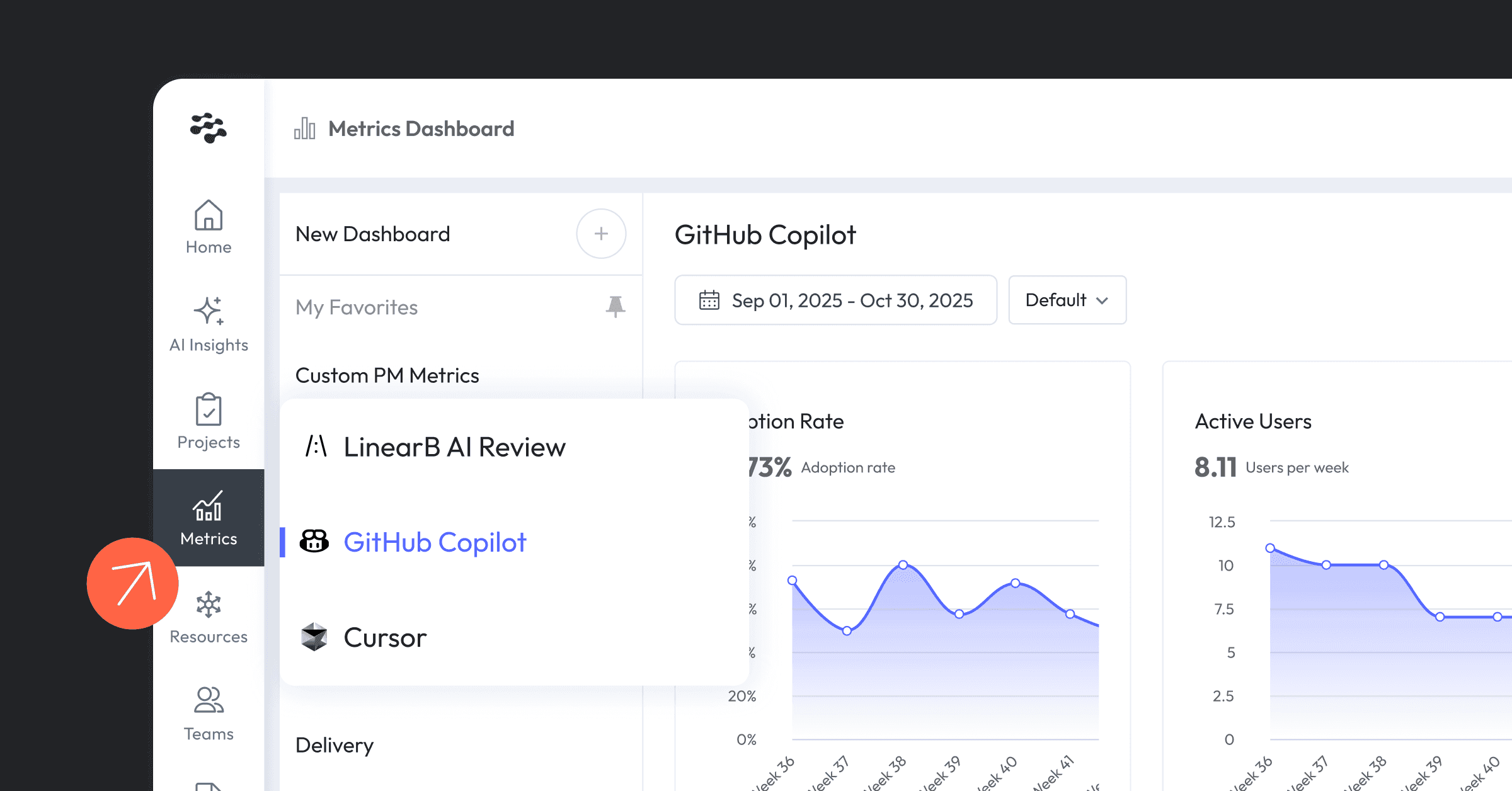

To measure the impact of automated label assignment, the LinearB platform connects labels to developer productivity outcomes. By tying label automation efforts to these key metrics, you can demonstrate a clear ROI to stakeholders. This section provides some examples of how you can use metrics to drive productivity improvements with automatic PR labels.

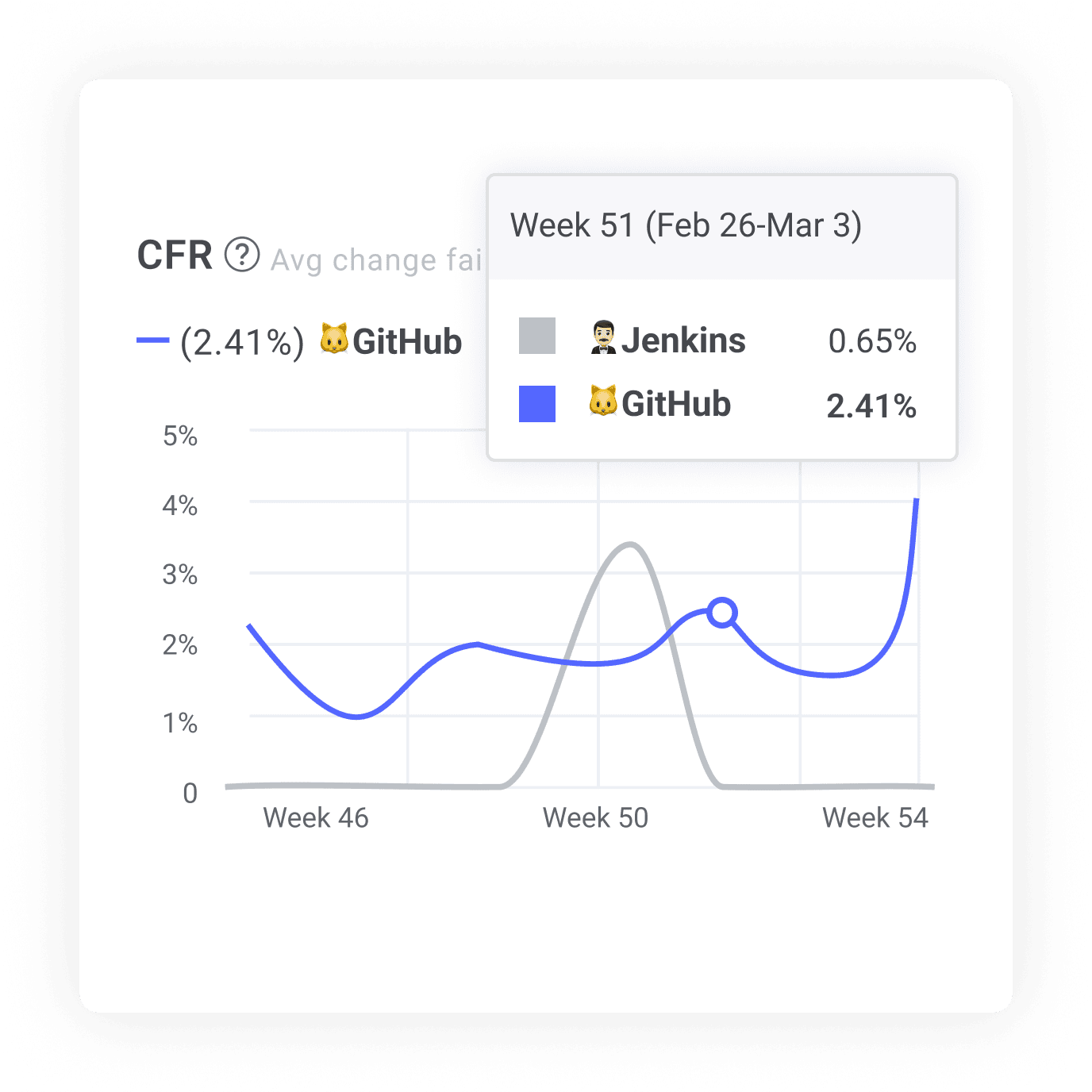

Use CFR to A/B test new tools

Change failure rate (CFR) measures the percentage of deployments that fail, and is an effective way to measure software quality risks. It can help you pinpoint services, frameworks, libraries, and other tools that may be introducing quality problems to your organization.

By filtering CFR by label (e.g., different CI/CD systems), leaders can pinpoint which pipelines introduce more failures. High CFR exposes problems with testing, quality gates, or infrastructure, guiding teams to improve stability, build developer trust, and reduce operational risk.

For example, you could use CFR to A/B test CI/CD services by doing the following:

- Split comparable teams or services into two groups, each using a different CI/CD tool.

- Use gitStream to automatically label PRs based on the CI/CD services they activate.

- Filter your CFR chart in the LinearB platform based on the CI/CD labels and monitor a fixed number of deployments or sprints to compare pipeline quality.

To achieve Step 2, write a YAML configuration to automatically distinguish between the work accomplished by the two teams using different CI/CD services. For example:

manifest:

version: 1.0

variables:

ci_cd_groups:

- label: "🐱 GitHub"

team: "team-swan"

- label: "🤵🏻♂️ Jenkins"

team: "team-flamingo"

automations:

label_ci_cd_group:

forEach: {{ ci_cd_groups }}

if:

- {{ pr.author_teams | match(term=item.team) | some }}

run:

- action: add-label@v1

args:

label: {{ item.label }}With that automation in place, you would be able to filter by that label across all of your LinearB metrics, including CFR. This approach allows you to aggregate data across different teams to measure the impact of changes.

Minimize review time

Review time measures how long it takes to approve a PR once review starts. PR labels streamline this by surfacing clear, actionable status indicators. In fact, this metric is where you will likely see the biggest impact from implementing automatic PR labels with gitStream.

Labels like unresolved threads, estimated review time, and modified resources give reviewers instant visibility, which reduces review time. This is because reviewers can focus on approvals instead of manual checks or clarification requests.

When PRs are clearly labeled with their current status (such as needing screenshots) reviewers can make faster and more informed decisions for the time and attention they have available, which builds momentum for both them and the broader team.

Use cycle time to measure efficiency gains

Automatically labeling GitHub Copilot-assisted PRs gives teams the visibility needed to track how AI is impacting their development process without adding friction for developers. By distinguishing AI-assisted work, organizations can analyze cycle time specifically for these PRs and compare it to manually written code.

This helps identify whether AI tools like Copilot are truly speeding things up, or whether extra review time is needed. The insight provides a foundation for optimizing AI tool usage, developing governance policies, and identifying opportunities to further reduce review bottlenecks.